In this, his second year presenting a “must see” Monday Short Course on Artificial Intelligence at the SID Display Week, Dr. Achin Bhowmik, Intel’s VP and GM of the Perceptual Computing group focused on image recognition and visual understanding with deep learning technologies that directly link to the human practice of, well, making mistakes. One might call it the “Oops factor”.

It can be argued that these mistakes have directly influenced biological evolution to date, and today we have the Darwin Awards (that somewhat morbidly “salute the improvement of the human genome by honoring those who accidentally remove themselves from it…”). A-I, it seems, is now borrowing heavily on modeling human neurons with algorithms or “artificial neurons” that model human behavior, so read on…

“Here’s a fun exercise,” Bhowmik began: “Ask Google’s Assistant (or Amazon’s Alexa or Apple’s Siri) to show you pictures of cats.” Turns out this seemingly simple task, once trivialized as a “summer project” for grad student (and now A-I pioneer) Gerald Sussman by his mentor Marvin Minsky in 1966, is harder than it might seem.

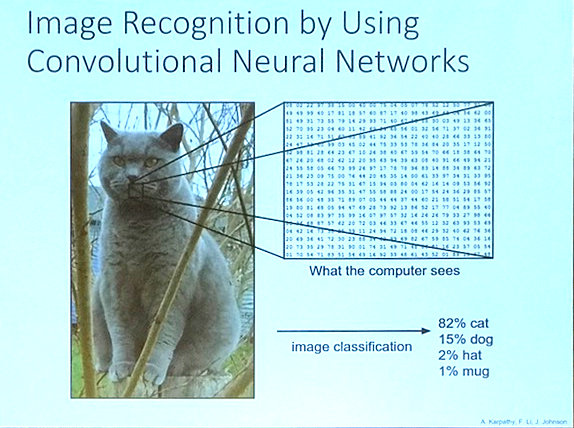

Data set for image of cat is just the beginning, Source Achin Bhowmik

Data set for image of cat is just the beginning, Source Achin Bhowmik

As the legend goes, Minsky quipped: “Spend the summer linking a camera to a computer and get the computer to describe what it sees.” That task turned into a fifty year and counting saga we now know as artificial intelligence, or A-I for short. “We’re still working on that summer project,” Bhowmik said with a faint smile.

Early beginnings of A-I as Summer project goes long in the tooth, we are still working on this one… Source: Achin BhowmikLast year, you may remember, Bhowmik treated session attendees to a most unexpected discussion that began with the “Cambrian Explosion.” This geological event links directly to the evolution of the human visual system, and points to critical issues the display industry need to consider today.

It turns out that human learning is also a major part of the A-I breakthroughs that led to Alexa (and Siri’s) ability to display that cat from a mountain of visual data, and deliver it in miliseconds. This progress is not trivial Bhowmik said, and is directly linked to sophisticated algorithms built on what we humans do best… making mistakes!

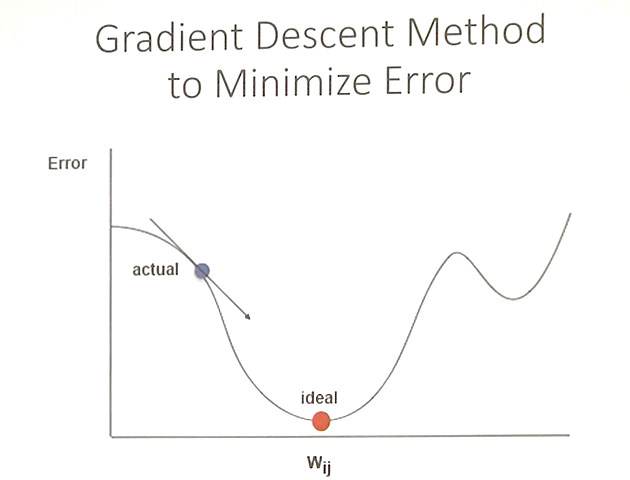

Artificial neuron continues making mistakes and iterates until optimum data set is identified, Source: Achin Bhowmik

Artificial neuron continues making mistakes and iterates until optimum data set is identified, Source: Achin Bhowmik

On the A-I front it turns out that the development of modeling neurons with algorithms called “artificial neurons” leads to a training process based on iterative learning using a known dataset and a process of both “back and forward propagation” to adjust the weights and output to minimize error. The process includes a ‘gradient descent’ method that is repeated until a statistically optimal result is achieved. The result is the one that closely matches the know data set or “ground truth” data. This approach represents a significant breakthrough in A-I by training the artificial neuron through a process of reducing the iterative mistakes.

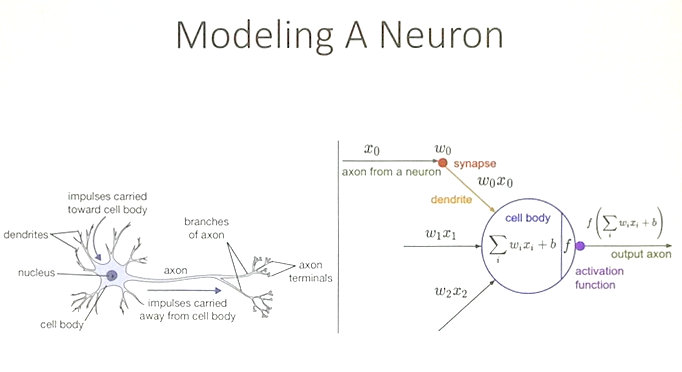

Modeling human systems gets us closer to optimal performance and efficiency through minimizing error.

Modeling human systems gets us closer to optimal performance and efficiency through minimizing error.

So, once again, Bhomik shows that by turning our attention to the human system as a template, we may indeed find keys to solving the complex problems already faced by our biological intelligence, that got us this far along (and by the way, full circle) in the process. — Stephen Sechrist