Whenever there is a change in the world, some companies will try to ‘catch the wave’ to make the most of the particular circumstances, while others will tend to follow on their own way. The sudden increase in the number of messages I have had about the development of ‘touchless touch’ since the development of the Covid-19 pandemic is an example.

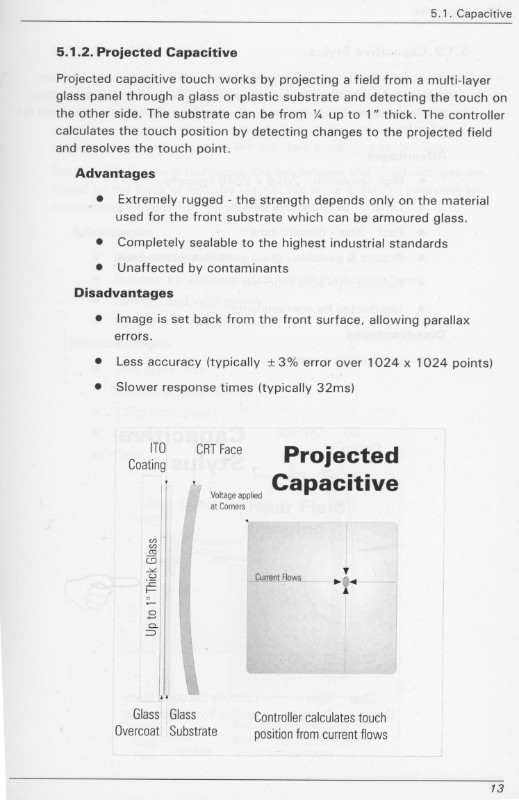

Touch has become a huge influence on the display market in general. It’s not a new technology – I wrote a booklet about touch technologies for what was Microtouch (later 3M Touch) more than 25 years ago. Much of what I wrote has remained true and useful, but the big change in the technology was the change in projected capactitive (PCap) touch. When I wrote that booklet, PCap was seen as a bit of an exotic touch technology that was mainly for use behind very thick glass. An example of the kind of application envisioned was in shop windows, to allow touch interaction when stores were closed.

The booklet I made in 1998 for Microtouch.

The booklet I made in 1998 for Microtouch.

At that time, adding touch usually meant putting some kind of coating on the surface of the display – quite a complicated process when the dominant display was the CRT which (apart from Trinitron, Diamondtron and FTM concepts) were curved in two planes. Typically, the addition of a resistive touch system or surface capacitive touch really degraded the optical performance of the display.

However, Apple changed that by adopting PCap as the technology of choice for the iPhone in 2007. There was quite a lot of surprise that they did this at the time, as it was said that the firm was paying more for the touch system than it was for the display itself. That was seen as completely mad. However, Apple’s development of this great multitouch technology which did not kill the image quality really changed the way that users interacted with displays. Even old display industry professionals, like me, have been known to swipe or touch at non-touch displays, assuming that touch was almost now mandatory.

PCap Touch was adopted in tablets, led by the iPad. My monitor analyst at the time was convinced that it would fail, as the kind of EMR touch used by Microsoft on its tablet designs had cost too much, had weighed too much and had used too much power. He had had experience of launching a tablet with this kind of technology, so “he knew”. What he underestimated was the progress made on PCap touch from 2007 to 2010. However, he also said that he thought that ‘convertibles’ were a better option for corporate use – and on that he was right.

However, touch has not always been popular everywhere and for all applications. I have long held the view that ‘touch doesn’t make sense on the desktop. If you are near enough to the screen to touch it comfortably, your ergonomics are all wrong. Touch has some benefits on notebooks, where use is already very poor ergonomically (and illegal for those that use screens a lot in the workplace, more or less, in Europe).

In Japan, where cleanliness and hygiene are taken much more seriously than in elsewhere, touch has always been viewed with some suspicion and special anti-bacterial coatings and glasses have been developed. Corning, for example, has an antimicrobial version of its Gorilla Glass.

The reason for the concern was highlighted at the end of 2018 when a survey of touchscreens in a McDonalds restaurant found ‘gut and feacal bacteria’ on the screens. That’s not good news when you are probably about to pick up the food and eat it with your hands! (Synchronicity In the Dirt). It’s even worse in a global pandemic where the virus can live on cold surfaces for some time.

The solution, one would hope, would be gestures, but as I pointed out in that article, there is not a ‘grammar’ for gestures. It’s easy to forget that Apple ran advertising before the launch of the iPhone essentially training potential buyers in how to use the touch display. Until there is a formal or defacto standard for gestures, so that we know what we are signalling to the system, the use of gestures is likely to be limited.

I was very impressed by a demo of gesture recognition by sensor company, PrimeSense of Israel (the firm that was behind the Kinect technology) and its partners at CES in 2013. However, that company was acquired by Apple later that year and disappeared into the firm’s R&D empire and off our radar. I suspect that its technology may appear again when Apple eventually shows the world what it has been doing in AR. After all, glasses or goggles are not a good surface for other than occasional touch input.

Image:Ultraleap

Image:Ultraleap

The other part of the solution is feedback from a gesture – I’m sure we’ve all submitted multiple forms on websites, when it’s not clear that the form has been accepted. Ultrahaptics is the firm that we have seen most often over the years (and have reported on since 2013) and in 2019, it joined with Leap Motion, which had, in my view, the best finger and hand recognition technology. They formed Ultraleap to combine the two technologies of hand recognition and ‘mid-air haptics’ generated by ultrasound. We most recently reported on the collaboration between Ultraleap and one of my favourite VR companies, Varjo. (Varjo Announces First-Ever Mixed Reality Dimensional Interface for Professional Immersive Computing)

At the moment, nobody seems to have established their ‘grammar’ as the way to operate with gestures. Covid may provide the kind of opportunity of ‘catching the wave’. I suspect, however, it might be the automotive industry that establishes a defacto or formalised standard for gestures in the end as it would make interfaces so much more convenient in a car. (BR)