On July 21st, SMPTE hosted a webinar titled “Content Acquisition Using Light Field Technology.” The content they are talking about is cinema-grade content, not gee-whizz photos for amateurs to play with. This has been a hot topic since the NAB last April when Lytro introduced a light field camera with resolution, bit depth and frame rate that make it capable of acquiring cinema content. A back-of-the-envelope estimate I did indicates this content can be then shown by conventional digital cinema projectors with 4K resolution.

Speakers at this webinar included Peter Ludé of RealD who was the moderator, Ryan Damm, Co-founder of Visby Cameras, Jon Karafin, Head of Light Field Video at Lytro and David Stump, ASC, Cinematographer and Author who has worked with Lytro and actually made cinema-grade content using the Lytro Cinema camera.

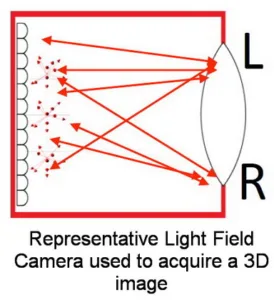

The webinar began with an introduction to light fields and light field cameras. A light field is described by a 5D plenoptic function, as shown in the image. That is, the light varies in 5 dimensions: three spatial (x, y, z) and two angular (?, ?). Since light travels in straight lines, ignoring lenses and general relativity, one of these dimensions is unneeded and the light field can be completely described by a 4D plenoptic function. This reduction from the elimination of a spatial variable is considered a holographic process and is closely related to the conventional meaning of “Hologram.” In this version of “holographic,” however, diffraction is not involved.

In the image, the 5D light field is generated by light reflected off of a goat. Once you have reduced it to a 4D light field and evaluated this light field in all four dimensions, you no longer need the goat – if you have the light field, the image produced by the light field is in every way like the image produced by the goat. This is the basis of light field imaging. In conventional cameras, only the 2D field corresponding to ? and ? are acquired. The x-y spatial data, that is the distribution of light over the pupil of the camera lens, is discarded.

Of course, this is a simplification. In particular, there are two important variables that also must be considered in an imaging or display system: wavelength of the light (color) and temporal variation (moving images).

One thing about light field imaging is the immense amount of data involved. An 8-bit/color 4K 4:4:4 still image contains about 25Mbytes of data in an x-y (really ?-?) grid pattern. If you want to evaluate each point at a variety of angles (x-y points on the lens pupil) you have this much data for each angle. My back-of-the-envelope estimate based on the publicly available information on the Lytro Cinema camera and came to the conclusion they evaluated at about 49 different angles (pupil locations). This represents an angular grid of about 7 horizontal by 7 vertical perspectives. Therefore, a light field camera like the Lytro Cinema will produce approximately 49x the amount of data a conventional 2D 4K camera would produce. Since Lytro claims its cinema camera is capable of 300 frames per second and 16 stops of dynamic range, the quantity of data recorded may actually be much more than this.

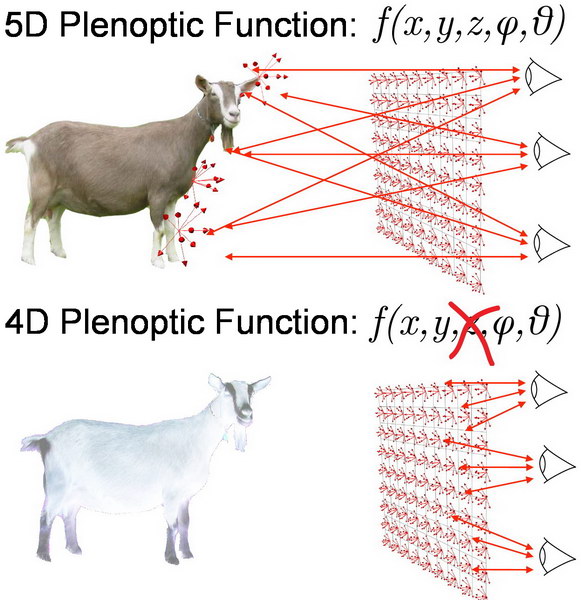

After the introduction to light fields and light field cameras, Jon Karafin, with the assistance of David Stump, explained how Lytro has set up a post-production work flow that allows studios and post-production facilities to turn the data from the Lytro light field camera into usable cinema images. The first step in the process is to upload all the light field data to servers in the cloud – no need to have local servers with sufficient capacity to process the huge amount of data produced by the Lytro camera. The local server associated with the camera only does some simple pre-processing and then uploads the data to the cloud server.

Lytro has produced plug-ins for normal cinema content editing software that allow the editors working on the content to use familiar tools. The overall goal of the editing process is to produce a conventional Digital Intermediate (DI) that can be shown on conventional cinema projectors or other displays. Light field displays are not required to take advantage of light field cameras.

The editors see proxy images of the light field data, not the light field data itself. This allows them to use various editing techniques and see how the results would look in the final cinema image. Tools available include adjusting focus, adjusting depth of field and converting the light field data into stereoscopic 3D images. All these effects can be used on all or just a part of the image. The interpupillary distance for the conversion of light field data into stereoscopic 3D images is limited to the diameter of the objective lens in the camera. Since the Lytro Cinema camera has a very large diameter objective lens, this should not be an issue for stereoscopic 3D cinema.

Lytro has introduced locks that allow the creatives on-set, e.g. the director, director of photography and cinematographer, to choose and lock the various adjustable parameters such as focus, depth of focus and shutter angle. Of course, there are also tools that allow these locks to be over-ridden in post production. If focus and depth of focus were irreversibly locked on the set, there would hardly be any need of a light field camera and you might as well use a conventional 2D camera.

The light field data can be reprocessed many times for different display types, repurposing the content for applications besides digital cinema. For example, the interpupillary distance can be adjusted in post for various display sizes and distances from the viewer.

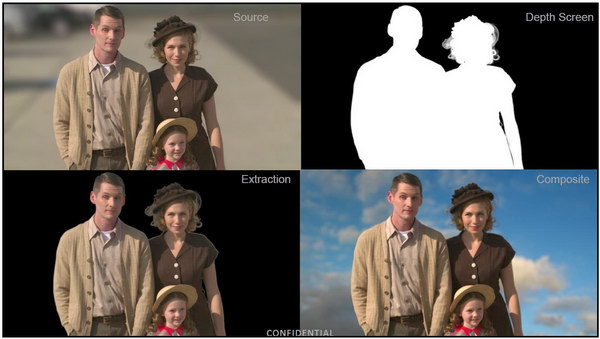

One important thing that can be done with light field data is depth screening. With a conventional 2D cinema camera, if the director wants to impose the actors on a background that would be impossible, dangerous or excessively expensive to use in real life, the normal technique is to film the actors in front of a green screen or a blue screen. This allows the actors to be separated from the background by color in post-production and put the desired background behind the actors. With a light field camera, it is possible to use any background and separate the actors from the background by depth, as shown in the image.

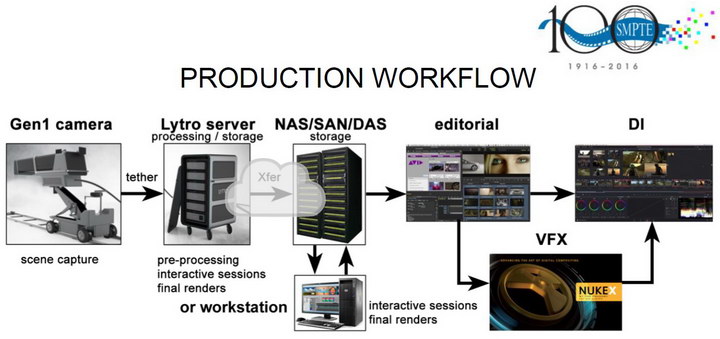

After the webinar, I had questions about the main imaging lens used in a light field camera. This lens is normally depicted as a singlet lens in block diagrams, such as the one shown above, but I suspected it was not a singlet in practice. So I e-mailed Ryan Damm at Visby with my questions. His response was:

“Thanks for reaching out. Briefly, the main (or objective) lens in these systems could theoretically have any properties. In practice, because the ray bundles have non-zero extent, there are benefits to controlling aberrations, and the objective lens is not a singlet.

“I should point out right now that I have no particular knowledge of designing these systems; I’m speaking from knowledge of optics, lens design, and light fields in the abstract… Please don’t construe this as inside information on what Lytro – or anyone else – is specifically doing. They don’t discuss these things with me in any case.

“Some aberrations are more-suited for light field correction than others. Field curvature is the most obviously correctable; distortion is likewise easily fixed, including adjusting or adding the desired distortion in post. Chromatic aberration can be helped to a degree, though because it operates across all wavelengths, and a digital camera only samples the spectrum in three wavelength bands, you have to do some interpolation to fully correct it. Lateral chromatic aberration is, I think, easier to deal with than axial chromatic aberration. Spherical and third-order aberrations like coma can probably be corrected if they’re not convolved with large amounts of higher-order aberrations. And in all cases, your ability to correct the lenses is a strict function of your 4D light field ‘resolution’ – you have to have accurate enough ray data, and ray bundles that are sufficiently narrow, to allow you to pick apart the effects you want.

“So, to summarize: each aberration requires separate handling, but in theory can be corrected or interpolated away if you have high enough light field capture resolution.

“A zoom lens would require calibrating the lens for each zoom position or having some explicit model of how the aberrations and ray directions vary with focal length. In practice, you can perform an optically-correct ‘digital zoom’ in post, so it’s unlikely to be worthwhile. That is, it’s not just cropping; you can get depth-of-field effects etc. by synthesizing what a zoom lens would have seen.

“Going forward, one benefit of microlens-based light field systems is the ability to allow certain optical constraints to be relaxed, and using optical designs for the taking lens that would be unsuitable for a traditional camera, e.g., allowing less-constrained spherical aberration, field flatness and distortion. I don’t know if Lytro or anyone else is doing that yet. (I’m not even sure it’s practical, to be honest, but it feels right.)

“Oh, and one thing Lytro has touted is the ability to model different lenses; loading a profile for a specific vintage lens, for example. This means, in practice, adding back in aberrations to match that particular glass. The tradeoffs between aberrations are a major lens design factor, and ultimately a major contributor to the ‘feel’ of a particular lens, especially the vintage lenses that are currently in vogue.”

After the webinar was over, SMPTE did a survey of the viewers. My comment? “I wish it had lasted longer so they could have gone into more detail on the workflow! Alternatively, SMPTE could run a second webinar that skips the introduction to light field science and technology and do the full hour on the workflow aspects of the Lytro Cinema camera.” Perhaps, this same comment could be applied to this Display Daily article.

SMPTE recorded this webcast and plans to post it on-line at https://www.smpte.org/education/on-demand-webcasts. As of this writing, the recorded version is not yet available.

-Matthew Brennesholtz