The Ultra HD Forum used NAB 2016 to release the first phase of industry guidelines on end-to-end workflows for creating and delivering live and pre-recorded UHD content. This first release of the guidelines lets the Forum’s members target deployments in 2016. Both technical and commercial challenges are addressed such as production, distribution and consumer decoding of UHD programming with both SDR and HDR content. The guidelines take into account the production workflow in the face of challenging backward compatibility constraints. A future release will target 2017 and beyond.

We had a chance to hear about these guidelines in a press event and to talk with numerous industry partners about the guidelines over the course of the event. We think these guidelines will be well respected and seriously considered for actual rollouts of UHD services.

The UHD Forum currently has 46 members composed of content providers, broadcasters, service providers, professional equipment manufacturers, technology solution providers, CDNs, chip-makers and device manufacturers. They are also working closely with other organizations including MPEG, DVB, DASH-IF, ATSC, SCTE, SMPTE, CableLabs, NAB and the UHD Alliance.

To be clear, the Ultra HD Forum is a global organization that is focused on the infrastructure to produce and deliver UHD content to the home. Their mission is to define industry best practices.

The UHD Forum is also working with UHD Alliance, but the Alliance is more focused on content creation guidelines and display device guidelines, along with a certification program. There are no certifications associated with the UHF Forum.

The full guidelines document is available only to Ultra HD Forum members. However a summary description will be posted to http://ultrahdforum.org/resources/phasea-guidelines-description/.

The initial focus of the guidelines is on technologies and practices that support a commercially deployable UltraHD real-time linear service with live and pre-recorded content in 2016, which is termed a “UHD Phase A” service, as defined in the table below.

| Spatial Resolution | 1080p* or 2160p |

| Color Gamut | BT.709, BT.2020 |

| Bit Depth | 10-bit |

| Dynamic Range | SDR, PQ, HLG |

| Frame Rate** | 24, 25, 30, 50, 60 |

| Video Codec | HEVC, Main 10, Level 5.1 |

| Audio Channels | Stereo or 5.1 multi-channel audio |

| Audio Codec | AC-3, EAC-3, HE-ACC, AAC-LC |

| Broadcast security | AES or DVB-CSA3, using a minimum of 128 bits key size |

| IPTV / OTT security | AES as defined by DVB |

| Transmission | Broadcast (TS), Multicast (IP), Unicast Live & On demand (DASH ISO BMFF) |

| Captions/Subtitles Coding (in/out formats) | CTA-608/708, ETSI 300 743, ETSI 300 472, SCTE-27, IMSC1 |

*1080p together with WCG and HDR fulfills certain use cases for UHD Phase A services and is therefore considered to be an Ultra HD format for the purposes of this document. 1080p without WCG or HDR is considered to be an HD format. The possibility of 1080i or 720p plus HDR and WCG are not considered here. HDR and WCG for multiscreen resolutions may be considered in the future.

**Fractional frame rates for 24, 30 and 60 fps are included, but not preferred. Lower frame rates may be best applied to cinematic content.

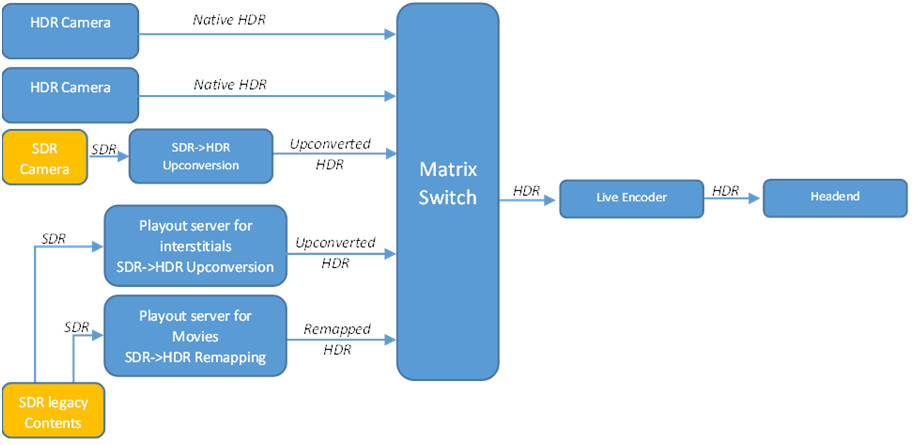

Use of mixed HDR and SDR source content in a live production is considered, e.g., live HDR camera footage overlaid with SDR graphics, as shown in the below diagram.

For delivery of HDR content, the guidelines recommend only PQ-based and HLG-based HDR content for deployments in 2016. The reason is primarily the issue of metadata. Current broadcast production facilities do not have a way to carry metadata through the plant, so schemes that use metadata were not viewed as robust enough to be considered for deployments in 2016. This includes Dolby Vision and the Philips/Technicolor approaches.

This is not to say that the group does not see merit in these approaches, but they believe there remain challenges to moving metadata that needed to be solved before they can recommend deployments. Typical concerns are what happens to metadata when passing through a visual switcher, when going through various encode/decode cycles or through other equipment that may strip out the metadata.

The group also came up with some definitions to help in the discussion process. They also added two new terms, PQ10 and HLG10, which are good additions.

- HDR10 (CTA definition)

- PQ (SMPTE ST 2084 definition)

- PQ10 (Ultra HD Forum definition: PQ EOTF plus BT.2020 color gamut and 10-bit depth)

- HLG (Draft New Rec. BT.[HDR-TV] definition)

- HLG10 (Ultra HD Forum definition: HLG OETF plus BT.2020 color gamut and 10-bit depth)

The full guidelines describes mechanisms used to convert or map between SDR and HDR and between HDR technologies. Use cases are offered to suggest where in the distribution chain such conversions are recommended and which conversion method to choose for a given situation. Management of peak brighness across the production chain is also documented.

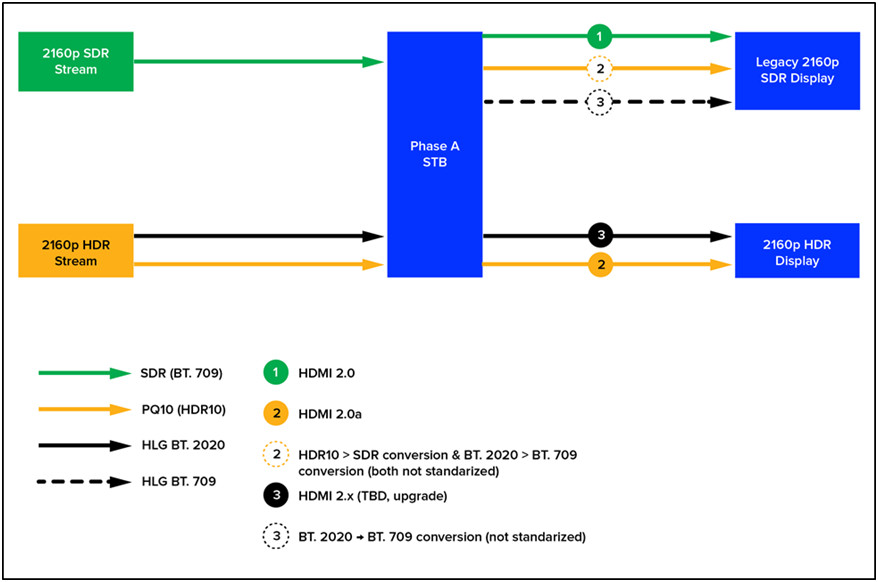

The guidelines also document decoding and rendering devices in the consumer home. It covers the key parameters that devices need in order to process UHD Phase A content including carriage of data over common interfaces such as HDMI. For example, certain Ultra HD parameters are not carried over HDMI 2.0a; the document offers insight into the timeline of standards development and equipment upgrade possibilities. This section also touches on considerations for displaying closed captions or graphic overlays on the consumer device.

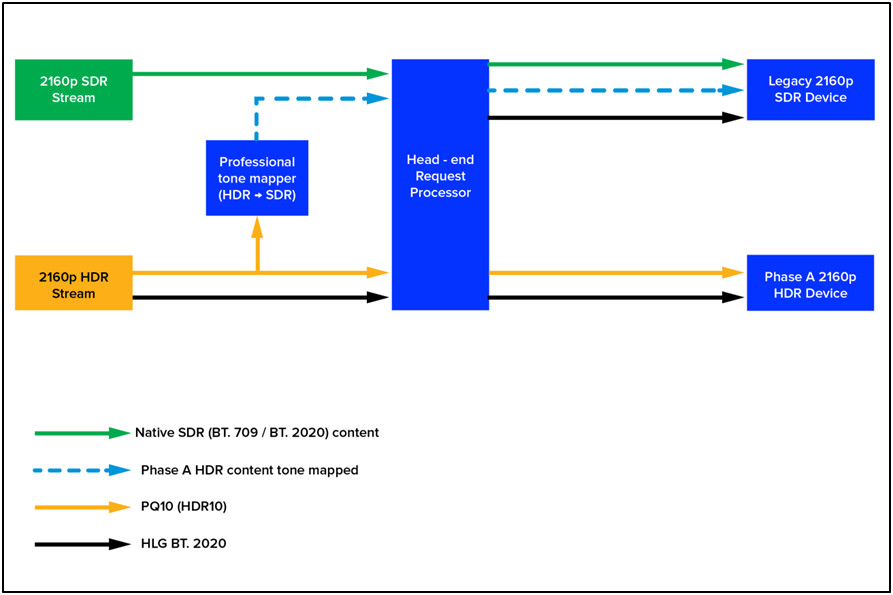

For backward compatibility, the guidelines note that SDR and HDR UHD streams will be in use for the near future. That means the proper streams need to be delivered to compatible TVs and that conversion between these formats will be needed (between color spaces and OETF/EOTF dynamic range solutions). In general, this can be done in the set top box or at the broadcaster’s headend. The full report includes a discussion of these options and are further illustrated in the charts below.

Reactions to the guidelines are mixed, as might be expected for a document that required the input of 45 companies in an emerging area. For example, one comment I heard was that the Dolby Vision and Technicolor/Philips solutions are nice, but require carriage of metadata.

The Technicolor/Philips solution adds the metadata at the “final encoder”, but critics contend there is no final encoder given the various ways that content flows and is encoded/decoded at many stages.

I also heard people asking how these solutions allow the switching of video with metadata and the insertion of graphics and advertisements that don’t contain metadata. The lack of robust answers to these and other questions is why deployments in 2016 are not recommended. Plus, some contend that you need to decide if you are shooting for SDR or HDR production as the lighting and camera set up will be different.

On the other hand, Technicolor was demonstrating a UHD HDR channel concept that showed all of these parts working together, so there will be further discussion, I am sure.

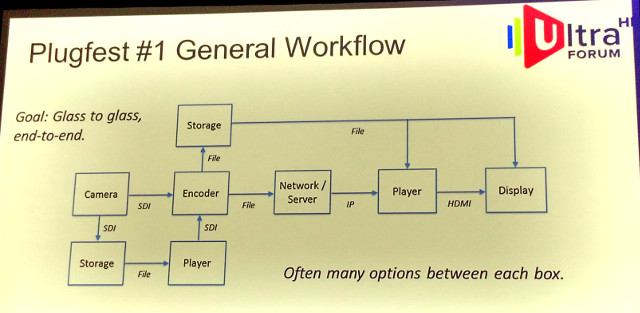

At the press event, the UHD Forum also described their plans to offer plug fests to test interoperability of various solutions on all kinds of equipment. The first plug fest has already been held on March 29-30 in Washington D.C. and was described by Eric Grab. This was a private workshop for members only to test their gear.

One conclusion Grab offered that “seeing the picture doesn’t aways mean it is correct.” That means there may be dynamic range or color conversion errors that need to be understood. More plug fest are being planned for the future. – CC