I wrote a couple of articles last year related to Light Field Displays (LFD) and called them Pixel Ray Displays (PRD). The question was: Are they Waves or Rays?

I know that “real” holograms, either digital or analog, deal with waves. On the other hand, as described in the articles, as the number of pixels increases and the pixel size decreases, at the mathematical limit where the number of pixels approaches infinity and the pixel size approaches zero, by definition, we enter into scary multiple integral mathematical world without the approximation of pixels and “numerical analysis”.

Are we in the Wave world? The answer was NO and is still NO. We are still talking about many, many pixel rays, and not wavefronts. We simply could not resolve the pixels as the number grows to a larger value and the size decreases. That’s all. As a matter of fact, if we have bad eyesight, we get to this situation much sooner without too many pixel rays. Again, we are still in the ray world by definition. Then what happens to the meaning of the term “Light Field”? I think this is as elusive as the terms “Hologram” and “Holographic”.

I read a few more LFD articles recently in Display Daily and other publications, I started to think more about this subject again as I am always fascinated by how “light rays” are reconstructed forming 3D images using stereoscopes or lenticular lenses for viewing. I followed the rays and tried to apply them to the recently developed LFDs, which in my opinion are still PRDs.

I am trying to formulate thought experiments imagining myself sitting in a room with LFDs covering all the walls and showing perceived images at various positions “inside and outside” of the room.

Viewing a Light Field Display in a Room

Viewing a Light Field Display in a Room

I should be immersed in the virtual reality with perceived objects close to me inside the room, and far away from me, outside the room. The walls, which are the LFDs, should be perceived as non-existent. At this time, there are many exciting, “high” resolution, perceived objects inside the VR goggles, but not inside the room.

The Whale is Inside the VR Goggle, not Inside the Room

As I mentioned before, we could have an issue with focusing and convergence, as the “pixel planes” are different from the “perceived object planes”. On the other hand, if we could not resolve the pixels because the pixel density is very high, or if we have bad eyesight, we could be focusing at the perceived object plane. At this location, the perceived size will not be the same as the pixel size of the display as the pixel ray propagates from the pixel plane to the perceived object plane and the ray diverges, forming larger perceived pixels. It is a simple calculation to calculate the divergence of the pixel ray using diffraction theory.

For a typical 65” LCD display with quad HD resolution, the pixel size is 375?m. Assuming that a plane wave is emitting from the pixel aperture and using the simple diffraction equation, the divergence is ?/d, where ? is the wavelength and d is the pixel size. Assuming ? is 0.5 ?m for this example, the divergence is 1/750. The spot size at a distance of 1 metre (1000 mm) will be 1.71 mm. This means that if one focuses at the pixel plane, the perceived pixel size is 375 um. On the other hand, if one focuses at the perceived object plane at 1 metre away from the pixel plane, the perceived pixel size is 1.71 mm, which is substantially larger than the pixel size itself. If the perceived object plane is 2 metres away from the pixel plane, the perceived pixel size at the object plane will be 3.00 mm.

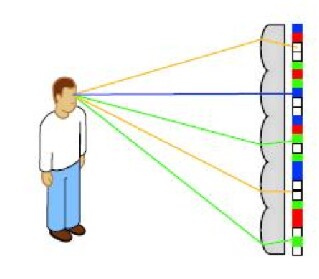

As shown in the figure, the smaller the pixel, the larger is the divergence and the larger the pixel, the smaller the divergence. As a result, there should be an optimum pixel size where the perceived pixel size at a particular distance is the smallest. Here is how it goes.

![]() Small Pixel, Large Divergence

Small Pixel, Large Divergence

Let P be the pixel size and S be the perceived pixel size at a distance L from the pixel plane.

S = ?L/P + P

For the smallest perceived pixel size S, the differential dS/dP = 0. Solving the equation gives:

P = sqrt (?L)

S = 2 sqrt (?L)

For a wavelength of ? = 0.5 um and perceived pixel distance of L = 1 metre, the smallest perceived pixel size will be S = 1.4 mm with a display pixel size of P = 0.7 mm.

The angular resolution of the eye is about 1 arc-minute, approximately 0.02° or 0.0003 radians. For the eye to resolve a perceived pixel size of 1.4 mm, the distance will be at 4.7 metres. To resolve the original pixel size of 0.7 mm, the distance will be 2.3 metres. If the viewer is inside the room and is over 2.3 metres away from the screen, the original pixels will not be resolved. On the other hand, for an optimum pixel size of 0.7 mm producing the smallest perceived pixel size at 1 metre, unless the room is very large and the viewer is over 5.7 metres away from the screen, the viewer will always see the perceived pixels at 1 metre from the screen. It seems that getting high-resolution light field displays will be very challenging. A very large room is required and the perceived objects will be relatively close to the walls, i.e. viewing the perceived objects at 1 metre from the wall at a distance of 5.7 metres, which is almost 20 feet away, making the VR experience non-immersive.

What happen if the perceived object plane is behind the pixel plane? What will be the pixel size? Honestly, I do not know how to approach this problem at this time using a similar approach of diffraction theory. My first guess would be that the pixel should be the size of the defocused pixel position at the perceived object plane. If this is the case, it will depend on the pupil size of the eyeball, which determines the depth of field. It would mean that the brighter the display is, the smaller the pupil size, the larger is the depth of field, and the smaller is the perceived pixel size.

On the other hand, there is a strong possibility that the eyes would tend to refocus, regardless of the convergence, such that it the eye will focus on the display pixels, similar to the auto-focusing system of modern cameras. This refocusing scenario could also apply to the perceive objects inside the room. If this is the overriding scenario, the eye will always focus at the display pixel plane regardless of the location of the perceived object plane.

I would welcome any inputs and suggestions regarding this scenario. Please direct any questions or comments to Kenneth Li, [email protected].