Although autonomous vehicles are grabbing most of the attention in the general press, the industry is just as obsessed with the connected car. Connectivity is required for the safest and most efficient full-automation systems, but it is also important for more limited systems and features, now and in the near future.

All of the major players, and lots of minor ones, were talking about their cloud-based technologies for collecting, pooling, and acting upon data from individual vehicles. Some systems take action and then discard the data collected. Other systems retain the data for later large-scale analysis but make the data anonymous. And yet other system owners say they will comply with applicable privacy regulations when they are instituted but will hold on to all data until then.

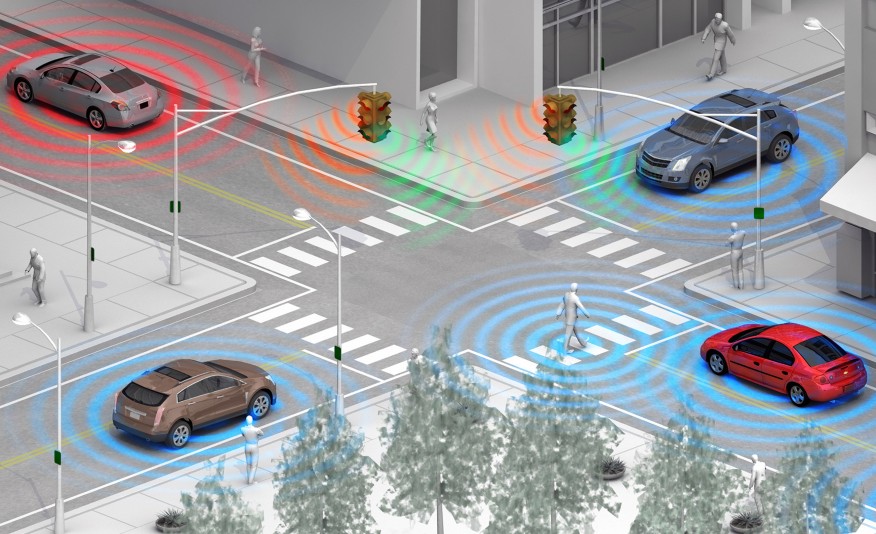

But you can do a lot without having a cloud in the sky. In December, the NHTSA issued a notice of proposed rulemaking with the intent of enabling vehicle-to-vehicle (V2V) communications on all new light-duty vehicles and “enabling a multitude of new crash-avoidance applications that, once fully deployed, could prevent hundreds of thousands of crashes every year by helping vehicles ‘talk’ to each other,” according to an NHTSA news release.

V2V Applications

One kinds of applications are possible? Here’s a simple one. If a car could transmit data about its acceleration to the car behind it, that car could more precisely maintain the vehicle-to-vehicle spacing established by its adaptive cruise control, thus reducing gas consumption, drive-train wear, and road surface wear. If cars could communicate one to the other in daisy-chain fashion, warnings of obstructions that are out of sight could be passed down the line.

Covering all new vehicles with a V2V and/or cloud-based systems obviously requires industry-wide standards, but systems providing particular services to a specific fleet of cars and trucks are being deployed now.

Vulog, a two-year-old company launched in Nice, provide end-to-end technology for car sharing-projects. “Car sharing” tuns out to be renting a car with an app, and vulog develops and supplies the technology that makes such a system work. This includes the the in-car hardware, the mobile app, and the back-end software for fleet management, said Alex Thibault, Vulog’s VP for business development in North America.

Vulog’s system is now supporting car-sharing projects in 10 cities, with a total of 3200 connected cars and 150,000 users. They have 1,000 cars in Vancouver and 600 in Montreal. Their latest launch was in December 2016 in Madrid with 500 cars, all of which are battery electric vehicles (BEVs). Other projects are in Nice and Copenhagen. The company initiated its U.S. business in December 2016.

There are three types of car sharing, said Thibault: (1) round trip, (2) station-based one-way, and (3) free-floating one way. Free-floating is the most recently developed type, which permits the user to drop the car off anywhere in a “home zone.” A green LED on the dash tells the driver when he is in the home zone.

Users register to become members of the service. The Vulog app shows the member where available cars can be found. All costs, including vehicle use, depreciation, fuel, and insurance are automatically charged to the member’s credit card on a per-mile basis. A large help button is installed beneath the dash. The button dials a help number on the member’s phone, saving the member the distraction of having to dial the phone himself.

AI Motive is a 125-person company, 120 of whom are located in Budapest. Two people maintain a U.S. office, which offers tech support, sales, and testing, and interfaces with U.S. tech companies, said Investor Relations representative, Junting Zhao. AI Motive is an AI software company that focuses on the three key issues of self-driving vehicles: understand the surroundings, make decissions, and plot a safe route. Hardware has been a processing bottleneck, with the challenge being to provide high processing speed and low power consumption simultaneously. AI Motive’s IP focuses on a neural networks accelerator, which can also be used for non-automotive applications.

Although AI Motive is hardware-agnostic, they are currently using Nvidia GPUs as the hardware platform for their AI processing. New inputs are uploaded from the vehicle, decisions are made centrally, and then downloaded to the vehicle for implementation. The AI learning occurs centrally because the decision-making process must be the same for all vehicles.

Zhao went out of her way to emphasize that the software part of the autonomous vehicle problem is what AI Motive works on exclusively, which gives the company an advantage in rapid development, she said.

The larger company Mobile Eye plays on the same field as AI Motive, Zhao said, but Mobile Eye’s system is oriented toward computer vision, not AI.

The primary sensors for AI Motive are cameras — “vision first” — as is the case with Mobile Eye and Tesla. The other philosophical camp uses Lidar as the primary display. They focus on vision first because it emulates human reactions. However, “Lidar system can drive places that are not yet mapped,” Zhao said. So, although video cameras are AI Motive’s primary sensor, the company also use radar and Lidar. But, perhaps surprisingly given all the Lidar sensors on the show floor, Lidar is not available for commercial implementation yet. Putting the input from all of these sources together is called “sensor data fusion.” AI Motive’s approach fuses data early in the process.

The company’s neural networks have been trained to recognize over 100 categories of objects (such as dogs, pedestrians, vehicles), with more to come. Training occurs over millions of miles of “driving,” most of which are provided by simulated driving data.

Concept headlight cluster with integrated Quanergy Lidar sensor. Anticipated deployment: 2025. (Photo: Ken Werner)

Lidar-sensor-maker Quanergy will have sensor available for customers “perhaps by the end of this year.” When systems will be available to OEMs depends on how long it takes Quanergy’s customers to integrate their systems. Quanergy showed a concept of one of its Lidar sensors integrated into a headlamp cluster. Target introduction is 2025.

Velodyne, perhaps the largest maker of Lidar sensors, is ready to ramp up production of its Model VLP32 now. Ford plans to introduce a Lidar-based system in the 2020-21 time frame. In a large booth, Ford was showing its I.D. concept car, which is electrically powered, fully connected, and contains Velodyne Lidar sensors.

Ford developmental autonomous vehicle with Velodyne scanning Lidar sensor. (Photo: Ken Werner)

ZF was demonstrating its X2Safe cloud-based predictive AI system, which the company is developing along with vehicle OEMs and a cell-phone provider. The system tracks the positions vehicles and (through their cell phones) pedestrians and cyclists. Objects are identified as vehicles, pedestrians, etc. by their movement patterns. Conflicting vehicles and people communicate with the cloud, not with each other. Data is acted on in the cloud and appropriate commands or warnings are sent to the conflicting objects. Then, the system deletes the data. X2Safe has been real-world tested on Los Angeles streets.

ZF’s X2Safe system identifies imminent collisions without local sensors. (Photo: Ken Werner).