The smart screen—an old idea is re-emerging as an exciting new segment in consumer, industrial, and enterprise client computing. Enabled by new technology innovations in areas such as AI, wireless connectivity, touch, video processing and display drivers –the screen interface is evolving to be a multi–functional control point that promises flexible computing—local and tethered–great security, and more intuitive and efficient ways to interact with a wider range of devices.

Some Definitions

Let’s start by defining terms. The new smart screens exist on a spectrum, from modest to sophisticated. At the modest end are displays that can execute a few specific apps, support WiFi connectivity, and securely dock to a nearby laptop.

At the ambitious end, we see systems that can run some apps locally and connect to the cloud for other apps. But these devices are also aware of their surroundings, can identify and authenticate users through face recognition and other biometrics, and can create adaptive, immersive environments for individual users all the way up to impressive Augmented Reality (AR). To see this idea in action, let’s look at some specific use cases in realistic consumer, industrial, or enterprise environments.

Some examples in consumer and industrial settings

The huge change in consumer computing behavior since the onset of the COVID 19 pandemic has swept away old notions of home computing. Today consumers work from home. They study from home. Increasingly they play at home.

Visualize, if you will, this scenario. In that inevitable spare bedroom or corner of the dining table stands a smart screen. A member of the family sits down in front of it, and the device recognizes the individual, authenticates them via biometrics, and logs them in to their application environment both locally and in the cloud.

The device provides local artificial intelligence (AI) acceleration for apps. These apps may be for productivity (such as joining a video conference or viewing a power point presentation, or adaptive self-paced learning), or multimedia (such as streaming and watching a movie), or entertainment (such as interactive gaming and augmented reality (AR) for yoga and other sports pose estimation). When the user brings a notebook PC, the smart screen recognizes it via Bluetooth and securely, wirelessly docks to it.

Industrial Devices

Or consider a different example—not at home, but in a classroom, retail store, or factory. Here, the smart screen may be on a hot desk, in a kiosk, portable with WiFi or 5G connectivity, or built into a piece of machinery. Once again the user approaches and the smart screen recognizes and, if appropriate, authenticates them. What happens next depends on the use case.

In an industrial application, the smart screen might for instance be a mobile control and service panel. A technician approaches a machine, and the smart screen recognizes the machine’s control computer via Bluetooth and wirelessly docks to it. Now the technician is holding a virtual control panel for that machine, able to observe the machine’s operation, perform diagnostics and AI-guided root-cause analyses of problems, and make AR-assisted adjustments or repairs. Via 5G or WiFi the technician may also summon up a digital twin of the machine and explore the effects of changes in settings, conferencing in engineers as necessary.

Similar scenarios that leverage identification, connectivity and personalization could play out in education, retail and smart cites use cases.

The technology underpinnings of smart displays

Beneath all these examples there needs to be a very different set of capabilities that are far different from the electronics in a conventional monitor. These differences are in three areas: multimedia processing, AI inference acceleration, and wireless docking.

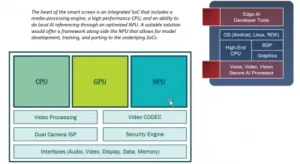

The heart of the smart screen is a media-processing engine. Far from just transferring incoming video to a display, the smart screen provides a sophisticated graphics processing unit (GPU) and video decoder. But the smart screen must be able to accept input as well, from multiple microphone channels—to allow for isolation of the user’s voice from background noise—and from one or more cameras. This implies the CPU bandwidth to do multi-channel audio processing, and a multi-stream video processing and encoding pipeline. To stay within the cost envelope of a consumer device, these capabilities must be integrated into a single advanced SoC.

Two areas in particular deserve a closer look. One is video processing. In order to support the wide range of use cases we have described, from streaming on-line learning video to handling multi-person video conferencing with both live and streaming content, there is a requirement to decode multiple video streams, up to a total budget of 4K UHD progressive-scan at 120 Hz. Similarly, encoding multiple video streams up to a total budget of 1080P 120 Hz is needed. Serving this video Codec, a dual-display controller can be used to drive two screens simultaneously, with features such as extend and mirror. And—necessary to provide the memory bandwidth for this video traffic and the other use cases we have discussed—there would be a need to be sufficient memory (e.g. 64-bit at least)

Second, and just as important is the ability to do local AI inferencing through an optimized Neural Network Processing Unit (NPU) that is small enough to be only a block on the SoC. A suitable solution would offer a framework along-side the NPU that allows for model development, training, and porting to the underlying SoCs. This would allow models to be securely inserted into the video pipeline, allowing the processor to analyze camera input and to post-process decoded video—for example, to identify persons or objects in front of the monitor and to perform Super-Resolution scaling.

A Full Solution

A complete smart screen platform certainly requires media processing and AI acceleration. But it also requires all the other blocks that go into a compelling implementation of the use cases we have discussed: high-performance software execution, graphics processing, peripheral controllers, and memory interfaces. And it requires the SDKs, application examples, and support to get a smart-screen vendor into the market quickly. Equally important, a full solution has security woven in from the beginning, not tacked on at the end.

The opportunity for smart screens is broad, diverse, and will develop rapidly, with many companies potentially underestimating the difficult of a full, secure solution and jumping in quickly. In such an environment, a silicon and solutions partner already in the market with a proven SoC, with application libraries, and with a hardware-based security approach that has withstood the scrutiny of the demanding video content protection lobby can be an invaluable asset in gaining fast market access with a differentiable, winning product. (ZD)

Thanks to Synaptics for liberating this article outside the Display Daily pay wall so that it does not count as one of your two free articles, if you are not a subscriber.

Zafer DIAB – Director of Product Marketing – Edge SOC

Zafer joined Synaptics in March 2018 and serves as Director of Product Marketing for the edge AI SOC line of business. Prior to Synaptics, Zafer occupied different product marketing, business development and sales roles at Amlogic, ST Microelectronics, and Zenverge. Before moving to business roles, Zafer managed software engineering at Broadcom and Conexant. He received his bachelor’s and master’s degrees in electrical engineering from École Polytechnique de Montreal in Canada.