Home delivery customers of the Sunday New York Times for November 8th got a cardboard VR viewer designed to work with a smartphone. Since Meko had previously published an article for its subscription customers about the announcement of this project (Google & NYT to Give Away VR Viewers), I’ll just discuss how it worked out in practice. This demonstration was being run in conjunction with Google, who obviously had a major role in generating the content.

First, the cardboard VR viewer worked as expected with the iPhone 5C I used to test the system. The app downloaded correctly from the Apple Store and the companion 11 minute video, “The Displaced,” downloaded from the NY Times website correctly. It was a relatively long download, however since 360° 3D VR files are big. They then played correctly on the iPhone. Reportedly, the NY Times distributed more than a million of these units. Presumably, on Saturday morning when most people got this viewer, there was a large number of people trying to download the app and content simultaneously, but I didn’t have any problems with an overloaded server.

Since the FOV of the left and right eyes only overlap at the center, the right portion of one image matches the left portion of the other image. This overlap area is the only region for stereoscopic 3D imaging.

Since the FOV of the left and right eyes only overlap at the center, the right portion of one image matches the left portion of the other image. This overlap area is the only region for stereoscopic 3D imaging.

Using the iPhone and the cardboard viewer, you could tip your head up and view the sky, down to view the ground and rotate your head 360° to see the complete horizon. Again, this worked as expected and there seemed to be little lag time between rotating your head and the image changing to match the direction you were now pointing. More on this later, however.

The catch phrase printed on the cardboard viewer was “Experience the future of news at nytimes.com/vr”. Well, maybe not yet. While everything worked as expected, there were two major problems with this demonstration: the VR system and the content.

The VR system worked as expected, but that’s because the expectations for VR aren’t very high. The iPhone 5C has a 4″ screen with a 640×1136 pixel array providing 326 ppi when viewed directly. This may not be the highest resolution iPhone available, but it’s still considered a retina display. Still, the display resolution was not nearly high enough to show ‘Virtual Reality’. The image was very strongly pixelated and even the RGB sub-pixels were visible. For virtual reality, even dedicated VR systems not based on smartphones, visible pixels seem to be the norm, but they prevent any sense of reality. A secondary problem was the fact that cardboard dust got on the iPhone screen, also interfering with any sense of immersion in the content. Like the pixels themselves, the dust was magnified by the lenses in the cardboard viewer.

Besides the cardboard VR viewer, there were two other ways to view “The Displaced.” First, you could view it directly on the phone’s screen. It seemed to run smoothly but I’m not accustomed to viewing video on a tiny screen so there was no sense of immersion. Again, as you moved the phone around, you could see the sky and what was beside and behind the camera.

The video could also be viewed on your computer screen, if you have the patience to download it. (I need to check my Internet connection speed – this might not be a universal problem.) When viewing it on your computer screen, you could use the mouse to move the image around and again see what is behind the camera. I’m a regular user of Google Street View and the conventions for moving this image were the reverse of the conventions used by Street View. Annoying, and the last thing the NY Times and Google want to do is annoy customers. Generally speaking, the subtitles were viewable on my 16:9 computer monitor, although they could be distorted.

The content also had serious problems. It was generated by a multi-camera system similar to the camera used to generate Street View. While the camera was never visible, of course, the shadow of the camera could be seen in some of the outdoor scenes and it looked like the shadow you sometimes see in Street View. In one scene, I could see a child holding what looked like a selfie stick that terminated in mid-air. The Google Street View camera can only generate 2D images, so any 3D came from 2D to 3D conversion. Producing content like this can obviously cause major problems for production, especially in an open field where there was no place for the production crew to hide. On the other hand, allowing the production crew to be visible would also interfere with the immersive, VR nature of the story.

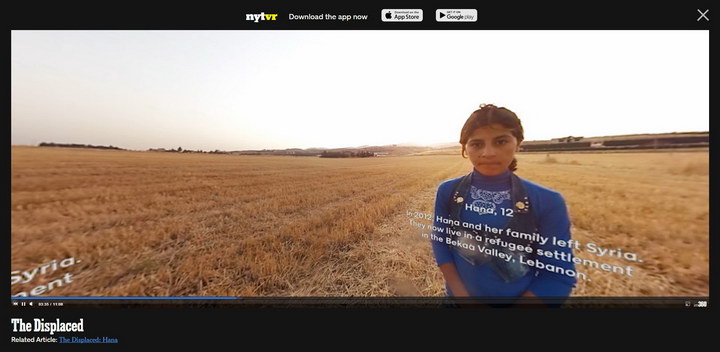

This wasn’t the worst of the problems with the content, however. “The Displaced” is the story of three children displaced by conflicts in the Ukraine, Syria and South Sudan, told in their own words. Unfortunately, their words were not in English and relatively few viewers of the video speak Ukrainian, Arabic or Sudanese, let alone all three. The NY Times put in subtitles translating all three voices into English. Unfortunately, these subtitles were fixed relative to the image, so if you turned your head to the side, they went off the screen. They were repeated at intervals around the image, but you could still be looking in a direction with no subtitles so you couldn’t understand what was being said. The children’s voices appeared to be voice-over. While there was sound captured with the images, there was no translation offered for this sound.

While any given subtitle was fixed relative to its image, a different subtitle would be in a different position relative to the 360° degree image. To read them, you had to rotate your head to find the next subtitle. Sometimes you couldn’t find and center the subtitle in time before it disappeared again.

Since my neck won’t rotate 360°, it was necessary to stand up and turn around to see what was behind you. With your vision obscured by the VR headset, this is not recommended for people with balance problems. Not knowing where the focus of attention should be or where the next subtitle would appear, this swiveling was a requirement, not an option. Perhaps an office swivel chair would be appropriate.

While the content is potentially a powerful story, I don’t think VR added anything to it. Quite the contrary, the need for the viewer to focus on the VR aspects of the storytelling obscured the story itself. This was a nice try on the part of the New York Times and Google, but I don’t believe it was even a near miss. Presumably, the main purpose of this was a mass experiment to see how people would react to a VR news story, plus a learning experience for the Times on making live VR content of news topics. Perhaps in their next attempt, they will have learned enough so the VR will be better and less intrusive. Frankly, I wouldn’t bet on it, though. –Matthew Brennesholtz