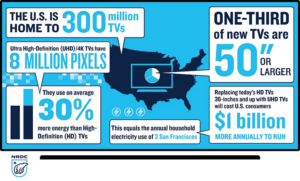

In a new report, the USA’s National Resources Defence Council (NRDC) has said that UltraHD TVs use, on average, 30% more energy than HD (1920 x 1080) models. The Council estimates that this could add $1 billion to US consumers’ utility bills, if energy-saving improvements are not expanded to all models.

Not all UltraHD TVs are equal in their energy consumption, said NRDC senior scientist Noah Horowitz. “We found an almost three-fold difference in energy consumption between the best and worst UHD TVs”, he said, “with some models using little or no more energy than their HD predecessors, proving the technology already exists to cut needless energy waste in these large televisions”.

To reach its conclusions, the NRDC and partner Ecos Research analysed public databases of UltraHD TV energy use and market share sales data. They also performed power use measurements on 21 TVs, representing a cross-section of 2014 and 2015 models. The testing focused on 55″ sets.

There are 300 million TVs installed in the USA. Without additional efficiency improvements, the NRDC estimates that switching from 36″+ HD TVs to UltraHD sets would cause the USA’s annual energy consumption to rise by 8 billion kW-h. This, it is noted, is as much energy generated by 2.5 large (500 MW) power plants. The switch would generate 5 million additional tons of carbon pollution.

In addition, it was found that the HDR feature consumes more energy when enabled. An HDR version of a film used 47% more power than the standard UltraHD version.

However, there was some good news. Ambient light sensors, which automatically adjust brightness, mean that TVs use 50% less power, on average (savings vary by model, from 17% to 93%). Consumers can also cut costs by buying TVs with the Energy Star label and avoiding using the Quick Start feature, which wastes power in standby mode.