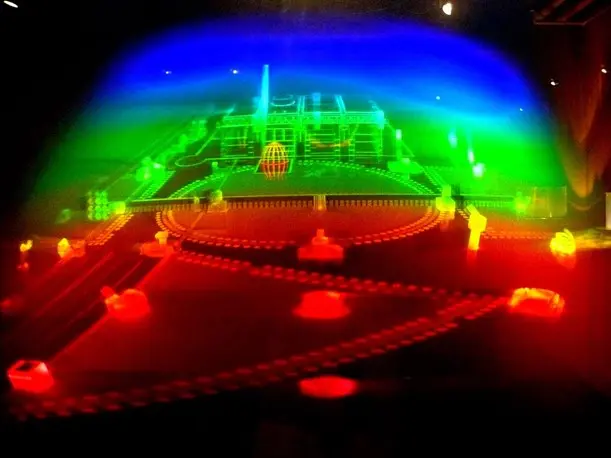

Researchers from Chiba University in Japan have developed a novel deep learning-based technique that can transform ordinary 2D color images into 3D holograms. The new approach, published online in Optics and Lasers in Engineering, uses three deep neural networks (DNNs) and does not require specialized 3D cameras.

Holograms provide immersive 3D experiences but generating them has historically been computationally intensive, limiting applications. Recently, deep learning methods have been proposed to create holograms directly from 3D data captured by RGB-D cameras.

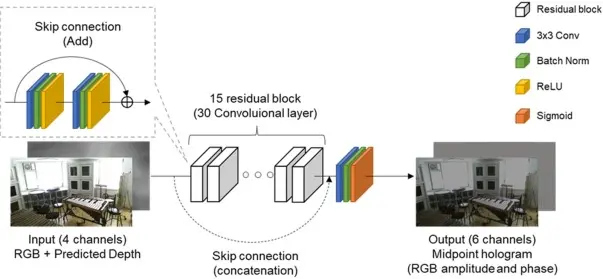

The new technique from Chiba University streamlines hologram generation further by using 2D images from conventional cameras as input. The first DNN predicts depth information from the 2D image. The second DNN then uses both the 2D image and depth map to generate a hologram. Finally, the third DNN refines the hologram for display.

In tests, the new system processed images faster than a high-end GPU. The resulting holograms also contained natural 3D depth cues. Since only 2D images are needed after training, the approach is inexpensive compared to methods requiring depth data.

The lead researcher, Prof. Tomoyoshi Shimobaba, said the ease of generating holograms could enable applications in medical imaging, manufacturing, and virtual reality. Near-term uses could include automotive head-up displays and augmented reality devices. The research demonstrates deep learning’s potential for simplified hologram creation.

Reference

Ishii, Y., Wang, F., Shiomi, H., Kakue, T., Ito, T., & Shimobaba, T. (2023). Multi-depth hologram generation from two-dimensional images by deep learning. Optics and Lasers in Engineering, 170, 107758. https://doi.org/10.1016/j.optlaseng.2023.107758