A few weeks ago Photon – Particle or Wave? Light Field – Wave or Ray? at whether, in designing display optics, you really need to decide whether to work as though light is a particle or a wave.

What happens to the images from the VR, AR, and the “Light Field” displays we encounter these days? Again, are they in the ‘Wave’ or ‘Ray’ world?

I would say that they are in the ray world. The reason is very simple.

These VR, AR, and “Light Field” displays do not generate “wavefronts” of the objects; instead, they generate “rays” from the display pixels replicating the rays from the original illuminated object. For simplicity, I will call all these displays, simply, Pixel Ray Displays (PRD) in subsequent descriptions, as the term “Light Field” could be misleading. At a certain viewing distance and direction, rays are generated for the viewer such that the viewers will see the images from the multiple rays and perceive that they are real. There are also pixel rays generated over different viewing directions such that when the viewers move relative to the Pixel Ray Displays, different perspectives will be seen by the viewers.

One main issue with such display is the mismatch between focusing and convergence of the eyes as described in the previous article on this subject “Analogue Light Field Displays are More Convincing than Digital”.

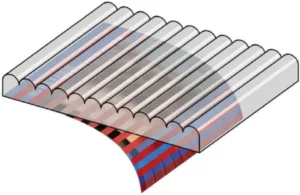

The simplest Pixel Ray Display is the common stereoscopic 3D display where two different perspectives are displayed, one for the left eye and one for the right eye. The images of the two perspectives are either printed or displayed, with alternating left and right vertical columns with certain spacing and placed underneath a linear lenticular lens with matching spacing, such that the viewer will see the left perspective columns with the left eye and the right perspective columns with the right eye. When these two perspectives are merged together in the brain, the image will be perceived as 3-dimensional.

In this case, the viewer focuses the eyes at the lenticular lens, which is usually laminated or attached to the front of the print or display regardless where the perceived object is located, whether it is in front or behind the display screen. One issue with such display is that when the viewers move their head slightly left and right, the same two images are being displayed and the viewer will not see a different perspective, but instead, the 3D image will be perceived with slight distortion.

Such 3D displays are generally classified as autostereocopic displays commonly available as printed pictures or in 3D televisions. Other schemes that allows 3D viewing in this category includes the classic two-color prints with matching color eyeglasses, polarized eyeglasses with matching polarizing displays, shutter glasses with matching alternating frames, etc. These schemes have been used in 3D televisions and movies shown in Dolby and Imax cinemas.

Overcoming the Limitations

To overcome the limitations of having only two perspectives, more advanced 3D displays will have multiple perspectives in the horizontal direction such that when the viewer moves the head left and right, new perspectives can be perceived by the viewer. The basic requirement is that each perspective requires one frame of image to be taken from the corresponding viewing directions. For example, many of the 3D printed pictures laminated with a 1-dimensional lenticular lens sheet have over 10 perspectives in the horizontal direction. The horizontal resolution is usually determined by cost, the limitation of printing resolution and the molded lenticular lens feature size.

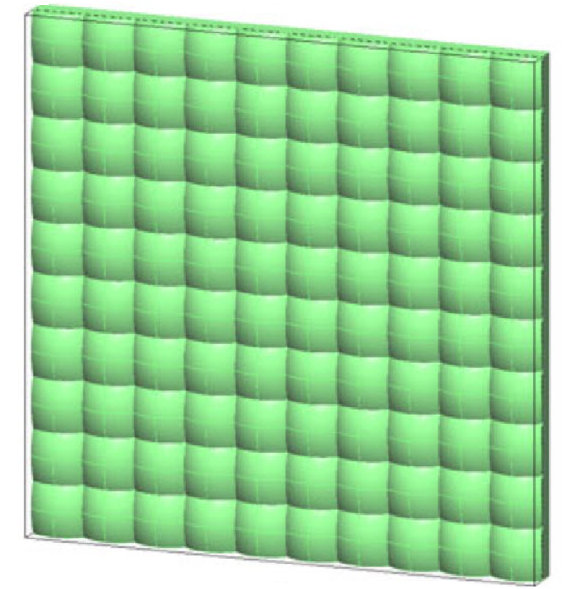

Extending the system further, multiple perspectives in the vertical direction are also developed using 2-dimensional micro lens array instead of 1-dimensional lenticular lens. The corresponding images for all the perspectives need to be captured by the camera system in both the horizontal and vertical directions. For example, for a 10 by 10 perspective Pixel Ray system, 100 perspective images have to be captured simultaneously. Such “Light Field” cameras capture the image through the aperture of the camera lens with the maximum perspective limited by the extent of the aperture. These cameras usually use a micro lens array or an aperture mask in front of the image sensor with 2-dimensional pixels. When the image sensor pixel information is analyzed, the 100 perspective images can be generated and displayed by the Pixel Ray display system.

The viewer would see the object from many directions while the head can be moved side by side and up and down, with the perception that the object is really there, within the extent of the aperture of the camera lens. Two LCD panels have been used, reported by Stanford, MIT, and others, to display such pixel ray images using two LCDs, the first panels as the pixel generator and the second LCD panel placed in front of the first LCD panel with variable apertures controlled by turning the pixels on and off. The output from the first LCD panel together with the apertures formed by the second LCD panel generates the appropriate pixel rays for the viewer.

To allow extraction of such perspectives from the original image sensor pixels, there is an efficiency of about 10% to 20% only. That is to say, the number of resolvable pixels in each perspective is about 10% to 20% of the total number of pixels in the image sensor. Since, within the extent of the aperture of the camera lens, the locations of the features of the object can be calculated, extended perspectives can also be computed by interpolations and extrapolations. This proves an even wider perspective for viewing beyond the original camera lens aperture, or filling in the in-between missing perspectives, which are physically not captured by the optical system.

In theory, as the number of perspectives increase, the number of pixels increases and the pixel size decreases. At the mathematical limit where the number of pixels approaches infinity and the pixel size approaches zero, by definition, we enter into scary multiple integral mathematical world without the approximation of pixels and “numerical analysis”. Are we in the Wave world now?

Are We in the Wave World?

The answer does not depend on how you look at it, anymore. I believe that mathematically, we “could be” in the Wave world, by definition, but no one would know in practice as we are still dealing with the practical world – with a finite number of pixels from the image sensor. We are still talking about many, many pixel rays, and not wavefronts. We simply cannot resolve the pixels as the number grows to a larger value and the size decreases. That’s all. As a matter of fact, if we have bad eyesight, we get to this situation much sooner without too many pixel rays.

Again, we are still in the Ray world, by definition. On the other hand, since we are not clear about the pixels, we probably do not focus well at the pixel plane and as a result, we might be focusing at the perceived location of the object. As a result, we will not encounter the issues with focusing and convergence. We would be too confused to get headaches, anymore. I think this is the place where we all want to be, which requires image sensors and VR/AR displays with very high resolutions. Together with many simultaneous image perspectives, the amount of storage and processing power will be tremendous. For HD resolutions with 30 frames per second, the processing power required from the GPU would be un-imaginable!

I just came back from this imaginary world and would welcome any comments from the real world. I can be reached at [email protected].