Jon Karafin from Light Field Labs gave a symposium paper (25-2) and a presentation in the Immersive Conference that was very similar to presentations we have reported on recently (TS03 The Future of Cinema is Holographic). He continued to hammer on the theme of identifying displays that are not true light field images. This list includes:

- Stitched VR/AR Images are Not Light Fields: While any multi-camera array is technically a light field system, the output content is not a light field once it is transformed into any stitched format. The process of stitching multiple viewpoints together inherently removes parallax, and hence the light field is lost.

- Point Clouds are Not Light Fields: A point cloud is a sampling of points from a singular viewpoint in space. The point cloud data, regardless of transparency or the number of samples along any ray path, only contain the color and intensity values as sampled from a single point in space with no consideration of other perspectives. Many point clouds are stitched together from multiple viewpoints, and similar to a stitched AR/VR image, once combined into a singular point cloud, the underlying light field is lost.

- Deep Images are Not Light Fields: A deep image is a visual effects format similar to a point cloud, but with multiple color, intensity and alpha pixel samples retained along the ray axis extending from a singular viewpoint in space. Although the alpha information and occlusion information may be retained, this is considered a volume, and not a holographic or light field dataset as there is no inherent angular independence per effective sample throughout the volume.

- Geometry is Not a Light Field: Geometry is a collection of vectors representing an object with defined contours, and textured with a mapped rasterized or procedural surface. The geometry and texture alone only represent a volume and not a light field, as these textures are producing the same color and intensity values regardless of viewpoint. Geometry may derive a light field dataset by ray-tracing with appropriate surface and illumination properties if and only if the angular independence of the effective surface is otherwise retained or selectively viewed.

He then dispelled holographic display marketing claims, as has done in numerous presentations:

- Pepper’s Ghost is Not Holographic

- Freezing Light in Mid-air is Not Possible

- Stereoscopic Displays are Not Holographic

- Horizontal Multiview Displays are Not Holographic

- Head-mounted Displays are Not Holographic

- Volumetric Displays are Not Holographic

So what is a light field display? According to Karafin, a true LFVD must provide sufficient density to stimulate the eye into perceiving a real world scene, providing for:

- Binocular disparity without external accessories, head-mounted eyewear, or other peripherals;

- Accurate motion parallax, occlusion and opacity throughout a determined viewing volume simultaneously for any number of viewers;

- Visual focus through synchronous vergence, accommodation and miosis of the eye for all perceived rays of light; and

- Converging energy wave propagation of sufficient density and resolution to exceed the visual acuity of the eye.

By his criteria, no display can be considered a light field display yet, even if they try to achieve these objectives. Any potential light field display solution must create real converging light points at various distances from the display surface (as can be tested with ground glass) and the LF dataset must comprise multiple angular color and intensity values for each pixel. This allows the color, texture and reflectivity of any pixel to change based upon the viewing angle – and represents how objects appear in the real world.

For a light field video display, he then defined three terms: effective holographic pixel resolution; projected rays per degree and viewing volume size. For a holographic pixel resolution of 1920×1080, rays per degree of (2, 2) in the x and y directions and a viewing volume on 90, 90 degrees (theta and phi), the display requires 67 Gpixels of data. That’s a lot of data and one of the key reasons they are so hard to make. And this is rather low fidelity example!

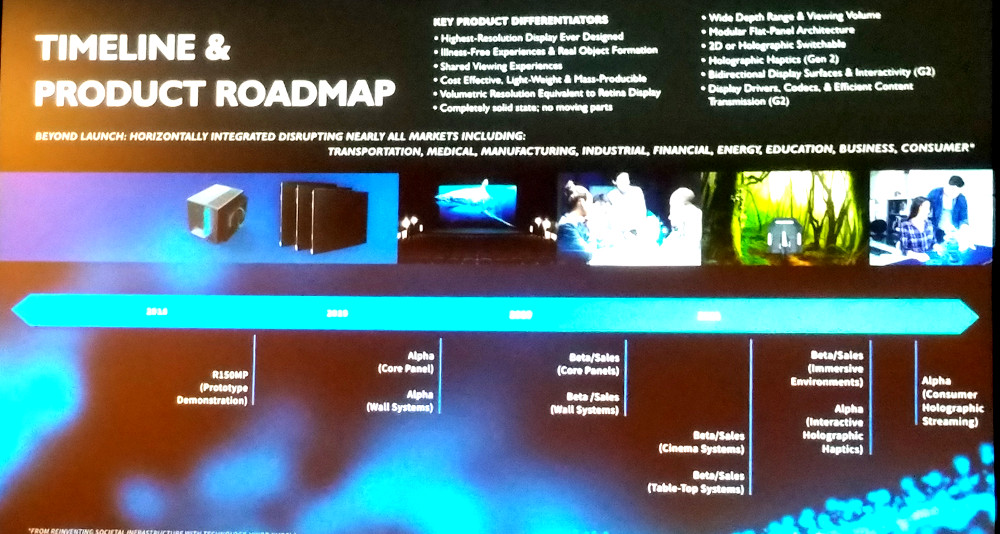

LFL is working on a small 4” x 6” module that will form the basis on their tiled approach. How they will create the light field image and manipulate the data has not been disclosed other than with high level hand waving. Karafin did reveal his development roadmap, however. This shows the R150MP prototype being available by the end of 2018, with the alpha core tile model (2’x2’) available in mid-2019 and commercial sales starting in 2020.- CC