If you haven’t been following activities in Light Field displays, here’s a quick update – there is a lot of activity in this area with products likely to be commercialized in 2018.

Investments are flowing and standards organizations are getting serious about finding ways to distribute the vast amounts of data that will be needed for real light field displays. They will offer a glasses-free 3D image with look-around capability that should be superior to what you may have experienced with lenticular or parallax-barrier type glasses-free 3D displays.

One big announcement came last week from Light Field Labs, a firm started by former Lytro technologists. They have just raised $7M in seed capital from Khosla Ventures and Sherpa Capital, with participation from R7 Partners, to continue the development of their light field display concept.

The company’s vision is big – to create the Holodeck of Star Trek fame. To get there, they are developing light field display modules that can be assembled like tiles to create entire walls. If you build a cube of such walls, you would be immersed in a visual environment that they hope would be indistinguishable from reality, i.e. the Holodeck.

Initial modules will be 6” x 4” (15cm x 10cm) but production modules in 2019 are expected to be about 2’ x 2’ (60cm x 60cm) with a resolution of 16K x 10K. Of course trying to specify or even measure the resolution of a light field display is no easy feat, so this roughly corresponds to the 2D resolution. Initially, Light Field Labs intends to target high end cinemas, theme parks or location-based entertainment, but hope to move this technology to the home down the road, says CEO, Jon Karafin.

The company also hopes to eventually release volumetric haptics that will allow interaction with these holographic-like images using ultrasound technology.

KDX Develops Alliances

At CES, a Chinese company called KDX formed a new alliance called Simulated Reality which seeks to integrate 3D visual technology along with immersive audio and haptics. The display technology is not based on light fields, but rather more conventional lenticular lens-based solutions that offer horizontal-only parallax, whereas the Light Field Lab displays wll offer full (vertical and horizontal) parallax. Light Field Labs has revealed very little about its technical approach other than to say it is a waveguide-based approach in a flat form factor.

Also developing a waveguide-based, full-parallax approach is a team headed by Michael Bove of MIT Media Lab and Daniel Smalley of Brigham Young University. Smalley’s group has also just announced work on a volumetric display as well, with inspiration from the Princess Leia image of Star Wars fame.

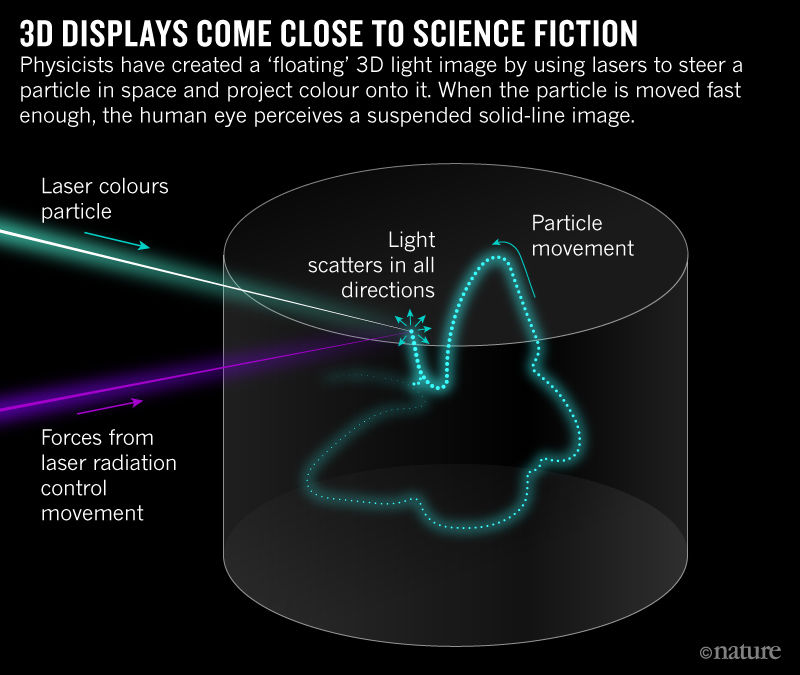

Smalley explained that a light field or holographic image has a light scattering surface which has a defined viewing cone. True volumetric displays emit light spherically so can be seen from any direction.

Their display features a volume of air containing cellulose particles. Illuminating a particle with a non-visible laser can “trap” the particle and move it around the volume. A second set of red, green and blues lasers then illuminate the particle to create light by using the particle as a scattering surface. Doing this rapidly allows the creation of an image. So far, they have created only simple images like a butterfly or prism. A video can be seen at: https://youtu.be/1aAx2uWcENc

Red was also in the news last week with some more details on their new Hydrogen One phone, which offers a light field display. This will feature the same display that Leia is developing (with investment from Red) and is a 5.7” flat panel with 2560 x 1440 2D resolution. However, there is a diffractive image steering element placed between the backlight and LCD panel that creates what they call a “4V” image. It is not clear if the 4V refers to 4-view or four depth layers, however.

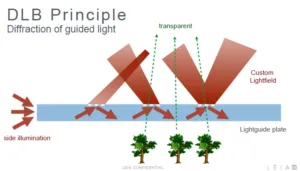

The principle is shown in the diagram below which Leia calls a diffraction layer backlight. Note that they have added diffractive elements to the top of the light guide to steer light into different directions, although the diffraction pattern could also create lenses with four different focal lengths. These diffractive elements must be electrically addressable to steer (or focus) the light and to be turned off in 2D mode (4V mode is a bit dimmer as light must be multiplexed).

Red had planned to ship its new phone by April, but that will now slip to the summer.

Standards Being Developed

Finally, I wanted to mention activity underway in the MPEG standardization group. The Hybrid Natural-Synthetic Scene (HNSS) committee is now working on a container to carry light field data across broadband networks. This group is spearheaded by CableLabs, the R&D group of the cable industry. What may surprise you is that the cable industry wants to move away from conventional video raster codecs for light field data. Instead, they see the path forward as based on creating 3D models of the scene and sending geometry, textures, and other layers of data to create a light field image that could potentially be photorealistic. The group is now looking to solicit candidate container models to evaluate and standardize.

If you have an interest in the light field ecosystem, check out some of the conference summaries and presentations from the last Stream Media for Field of Light Displays (SMFoLD) workshop. They are free to access at: http://www.smfold.org/2017-smfold-workshop/smfold-2017-presentations/ – Chris Chinnock