In case you have been under a rock lately, the most popular streaming TV show of all time, Game of Thrones, created a chorus of complaints about how dim “The Long Night” episode was. This episode was shot at night and was intentionally graded to be dark. However, an unfortunate series of decisions resulted in scenes that were so dark in people’s home that you literally could not see anything. Needless to say, this sent up a hue and a cry around the land.

I can be pretty certain that no TV fared well for the debut of this episode as there were many posts from people watching on their OLED 4K TVs in a dark room and it was still too dim and/or full of so many banding artifacts as to be distracting. So what went wrong?

One source suggested that HBO made a conscious decision to shoot this and other episodes specifically for distribution to TVs. They suggested that content was only saved with 4:2:0 color sub-sampling with a rec. 709 color space. In others, it was captured as SDR content. That also means 8-bit per color. No HDR version of this exists, apparently. To me, if true, this is unbelievable. Game of Thrones will have a life after HBO and to not capture a 4K or 8K HDR master seems crazy. I realize it would cost more, but HBO must be kicking themselves now after this PR disaster.

The cinematographer said the images looked fine at mastering – and maybe they did with uncompressed signals. But send ot through encoders and variable bandwidth networks to the home and any compression artifacts get magnified in the dark regions. And, even with SDR content, modern TVs do a lot of processing so in brighter scenes, sets boost the peak luminance and raise the black level. I have heard people complaining of crazy oscillations in the black levels due to this.

Some said that a properly calibrated TV in a dark room would have produced a good result, but that did not seem to be the case. The episode was viewable through various providers using different Content Delivery Networks (CDNs). The choices these CDN operators made in terms of bandwidth made a huge impact on the final result. Another source said the HBO Go live streaming content was horrible, but the replay of the episode on a Comcast network the following week was noticeably better. Why? Higher bit rate and perhaps some better encoding decisions.

This whole fiasco has given a big black eye to the streaming industry. HBO made some poor content creation decisions and then didn’t anticipate the consequences of delivering this at sub-optimal bit rates. Some streamers, particularly Netflix, have made a point of developing a robust CDN to help ensure such issues don’t generate complaints. But we need to do more.

A Timely Presentation

I attended the Streaming Media East conference in New York yesterday. I listened to a timely presentation from CDN operator, Akamai, and video quality assessment company, Ssimwave. They recently did a study to ask, “What does good look like?”

The testing for the study was based on software provided by Ssimwave. They have built a visual assessment model to evaluate the score of video. This is beyond the Structured Similarity tests that the company developed 15 years ago and beyond simple PSNR metrics. Ssimplus is based on the human visual perception model and uses some machine learning and AI techniques to help build algorithms and scoring criteria that correspond with what the human eye sees. It can even assign higher weight to regions of interest, like the main characters, so artifacts in this location count more than in a tangential area. That makes sense.

Ssimplus evaluates video and produces a score from 0-100, with anything above 80 considered excellent. In their booth, they showed examples of how this monitoring can work throughout the distribution chain. But here is the smart part: the software can be used to set a quality threshold for a certain channel or program, which instructs the encoder to deliver this quality at various bit rates. IP delivery typically requires multiple encodes of each piece of content to allow the playback device to switch to a lower bit rate segment if network conditions deteriorate.

There are several factors that impact the encoding parameters. This includes the genre of the content, for example. Complex and faster-moving scenes, like sports, requiring higher bit rates to maintain the quality while less complex and slow-moving scenes, like talking heads, require much less. The frame rate, resolution, dynamic range of the content impact encoding bit rate, plus adverse network conditions impact the overall quality of experience (QoE).

Maintaining a consistent QoE also requires knowledge of the viewing platform. Higher quality is needed for TVs compared to smartphones. All these factors can be accounted for to offer a consistent QoE across all platforms, all genres and most network conditions.

The tests that Akamai did in cooperation with Ssimwave and Eurofins Digital Testing is described in detail in their white paper. It is a very good read. The goal was to assess the impact on the quality of video across a range of variables to get a qualitative assessment of what viewers actually are seeing on their devices. Some of the results are shown below.

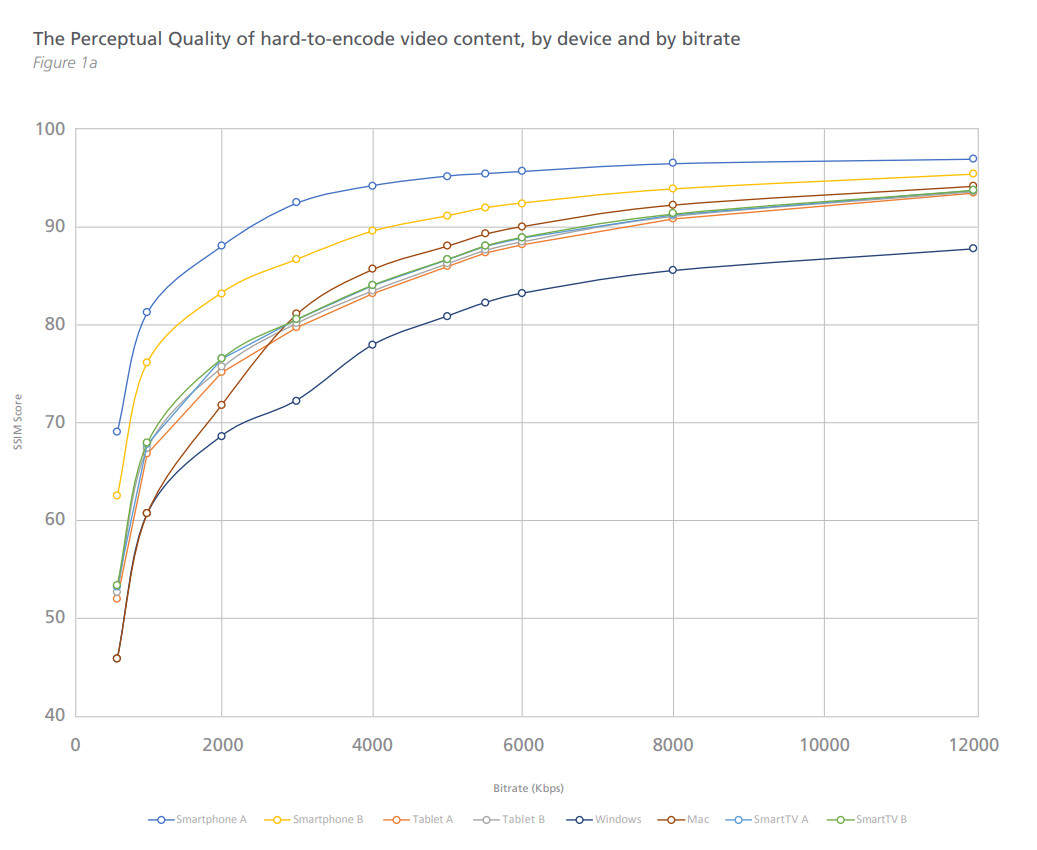

Figure 1a shows that

“the two smartphones tested achieved optimal perceptual quality scores with bitrates of less than 3Mbps but had significant perceptual quality variation between them. In contrast, the Smart TVs on test required encode profiles of beyond 6Mbps to achieve a similar score. Desktop PCs on test required bitrates of around 5Mbps, but again showed significant variation in their perceptual quality.”

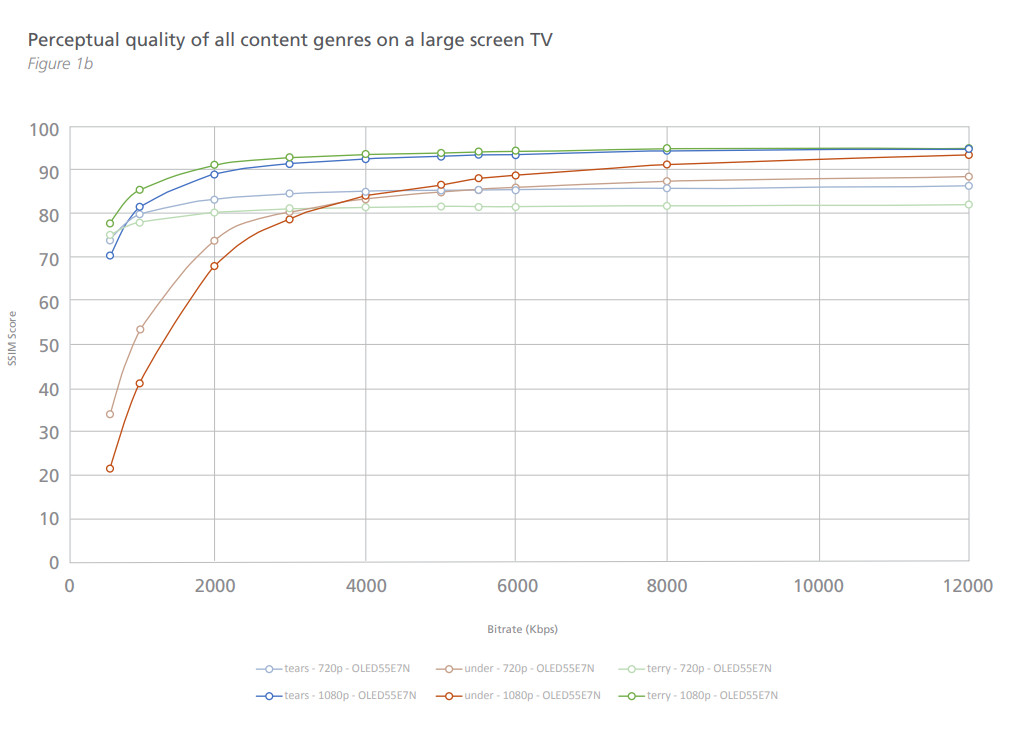

The data in Figure 1b suggests that

“on a large-screen TV the lower complexity content immediately benefited from being encoded in 1080p with excellent HD quality being achieved at 2Mbps. Mid-range quality content at 1080p also derived an excellent quality rating at 3Mbps. Neither genre benefited at the lower rates from encoding at 720p. Higher complexity content required a bit rate of a minimum of 6mbps but again did not benefit at higher bit rates when encoded at 720p.”

The final piece tested various network conditions including outages. It found that a SmartTV did not fare very well in its QoE score as conditions varied but a set top box did much better. So, there are clearly ways to help mitigate varying network conditions, but not all device makers consider this.

Only limited QoE evaluation metric exists today based on buffering and data rates. Can or should IP streaming service providers step up to a more sophisticated QoE metrics?

So here is my call to action: Consumers should demand a certain quality of experience level for their content, or at a minimum, be able to choose a tiered QoE option from their provider. Even better would be a QoE user control that allowed me to increase or decrease the QoE on each show I watched.

Just increasing your broadband data rate does not necessarily help if your service provider is making the decisions on the data rates being sent to the network. Service providers will say that customers don’t see these compression artifacts, but Akamai says they do – and they lose interest in the content. Or worse, they can’t see the content. We can take this to a higher level if there is a will, but consumers need to ask for it.

Ok, I’ll step off my soapbox now. (CC)