If you want to start an argument, just ask, “Which is better, Apple’s iOS or Google’s Android?” Asking which is a better HDR system, HDR10 or Dolby Vision is a similar question. Both have their advantages and disadvantages and both have their advocates.

The introduction on August 5th of the Xbox One S added one more consumer electronics company, Microsoft, to the HDR10 camp. I’ve written about both HDR10 and Dolby Vision but this announcement made me look deeper into the HDR10 vs Dolby Vision competition.

What are the differences and similarities with these two HDR systems? I’ve put the key parameters in the following table:

| HDR10 | Dolby Vision | |

| Best website I could find: | http://www.uhdalliance.org/uhd-alliance-press-releasejanuary-4-2016/#more-1227 | http://www.dolby.com/us/en/technologies/home/dolby-vision.html |

| Origins | ITU-R BT.2100 defines a range of HDR systems. Profile HDR10 has been defined within the limits of BT.2100 by the UHD Alliance. | Dolby purchased Brightside Technologies in 2007 and has developed Dolby Vision from the basic HDR technology it got from Brightside. |

| Bit Depth | 10 bits/color | 12 bits/color |

| Color Gamut | Signal formatted for ITU-R BT.2020 and the display must show 90% of P3 (Digital Cinema) colors. | Dolby Proprietary Color Space (Neither Rec. 2020 nor digital cinema P3) |

| High Brightness | ?1000 nits (or ?540 nits for OLED) | 4000 nits (Can master up to 10,000 nits) |

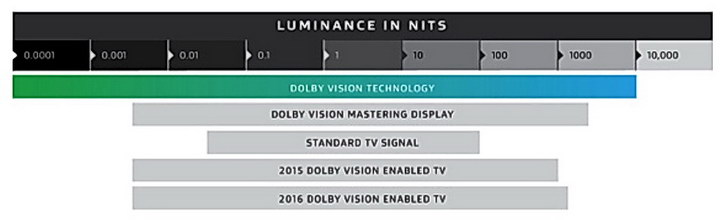

| Min Brightness | ?0.05 nits (or ?0.0005 nits for OLED) | Dolby believes current TV and professional displays are limited to about 0.004 nits – see figure. The technology can master down to 0.0001 nits |

| Minimum Display Contrast | 20,000:1 (108,000:1 for OLED) | 1,000,000:1 to show full range of Dolby Vision content from 0.004 nits to 4,000 nits. |

| Electro-Optical Transfer Function (EOTF AKA Gamma) |

Perceptual Quantizer (PQ) 10-bit EOTF The PQ EOTF was originally developed by Dolby and has been adapted by HDR10 because it is a better match for human vision than gamma or other encoding. |

PQ 12 bit EOTF SMPTE has adopted the Dolby-developed PQ EOTF and designated it ST-2084. BT.2100 also uses the PQ EOTF. |

| Decoding | Software decoding. The firmware can be updated in the TV as needed | ASIC licensed from Dolby |

| Color subsampling | 4:2:0 | 4:2:0 |

| Metadata | Static for entire film | Can vary scene by scene |

|

Adopted by (Lists not necessarily complete) |

UHD Alliance, Amazon, Netflix, Blu-ray Disc Association, Consumer Technology Association, Hisense, LG, Samsung, Sharp, Sony, Vizio, Microsoft, others | Dolby, Amazon, Netflix, VUDU, LG, Vizio, TCL, Blu-ray Disc Association (Dolby Vision is an option, not required, for UHD Blu-ray discs) |

| Studio Support for TV | 20th Century Fox, Lionsgate, Paramount, Sony and Warner Brothers | Sony, Warner Brothers, MGM and Universal |

| Compiled by M. Brennesholtz from reports | ||

The UHD Alliance requires HDR compatibility at the HDR10 level before they will allow their “Ultra HD Premium” logo to be used on TV sets. The Consumer Technology Association (CTA) has included the ability to decode HDR10 inputs as the minimum required to call a TV “HDR-compatible” in the US. The TV doesn’t need to display HDR10 content with its full dynamic range, as long as the hardware inputs and video decoder allow it to be input and displayed with Standard Dynamic Range (SDR).

While the UHD Alliance doesn’t specifically say the HDR10 specification of 1,000 nits is for LCD and the 540 nits is for OLED, it is widely recognized that OLED currently cannot meet the more reasonable spec of 1,000 nits for HDR. In order to not freeze OLED, whose strongest point is contrast, out of HDR, they lowered the peak brightness spec to accommodate what the technology can do now. Non-HDR LCD TVs can easily be designed to meet the 540 nit OLED peak brightness spec. Meeting the 1000 nit requirement is not so much a technology issue for LCD but a regulatory one related to the maximum power a TV is allowed to consume in various jurisdictions such as California. Since the primary visual advantage of HDR is showing high brightnesses that are crushed in normal TV, this reduced brightness spec for OLED does HDR no service.

Incidentally, I consider the 0.0005 nits dark level in the OLED version of the HDR specification one of the most ridiculous things ever put into print by a technical body such as the UHD Alliance. A dark level of 0.0005 nits can only be achieved in a pitch dark room with a black interior walls and all viewers wearing dark clothing. In addition, the video signal to be displayed must be essentially 100% black. Under more normal television viewing conditions, even those found in a dedicated home theater room, and with more normal content, no viewer will be able to tell the difference between 0.0005 nits, 0.004 nits and 0.05 nits – they will all look black.

Comparing HDR10 with Dolby Vision is actually more difficult than my simple table makes it look. Dolby Vision is a proprietary standard and few technical details are publicly available from Dolby. There is no “HDR10 Consortium” supporting the HDR10 standard. It is primarily a result of work at the UHD Alliance and is included in their specifications, which are also not readily available to non-members of the UHD Alliance, such as myself.

LG has posted on it’s website (http://www.lg.com/us/experience-tvs/hdr/what-is-hdr) an explanation of HDR in relationship to LG TVs and included the above image. The “Generic HDR” in this image clearly refers to HDR10. This image is supposed to simulate what a consumer would see if a HDR LG TV was fed with a native Dolby Vision signal vs a competitor TV showing a HDR10 signal derived from the same Dolby Vision master. Of course, Display Daily readers are seeing a fourth or fifth generation version of this image on a non-HDR display and the image was simulated in the first place. The simulation is intended to “show” how a LG TV with Dolby Vision would show exactly what the filmmaker intended but a non-LG TV with a Generic HDR algorithm like HDR10 would see a pale, washed out version of the image. Since one of the ways LG shows a “pale, washed out” image is by desaturating the colors of the sunset and since HDR10 has colorimetry at least as good as Dolby Vision, this simplistic simulation isn’t really fair or useful.

Even LG doesn’t really believe HDR10 is that bad since they offer HDR10-only HDR TVs, calling them “HDR Pro” TVs. This is in addition to their TVs that accept both Dolby Vision inputs and HDR10 inputs, called “Super UHD” TVs by LG.

A generation ago, the question was “Which is better, Beta or VHS?” Then came Blu Ray vs HD-DVD. I suppose two generations ago, the question was VHF vs UHF TV and before that it was AM vs FM radio. There was also NTSC vs the CBS color sequential standard, but the clear superiority of NTSC caused the color sequential standard to vanish almost as soon as it appeared. In my younger days, I remember four different competing versions of AM Stereo radio. History says format wars are difficult to resolve and, in many cases, the standards coexist essentially forever.

In addition to Beta and VHS there were a couple of minor videotape players as well, such as V2000. Minor players in the HDR race include the BBC and Philips, each with their own format.

This forever coexistence may be what happens to Dolby Vision and HDR10. Dolby Vision is a high end, professional system, suitable for mastering and displaying cinema content with the highest quality. Once you have a movie mastered in Dolby Vision, it is easy enough to release it directly as Dolby Vision to consumers or to convert the content to HDR10 for consumer distribution. After all, the two formats use the same EOTF, greatly simplifying this format conversion process. If desired, it would even be possible to convert HDR10 content to Dolby Vision, if you have HDR10 content you want to make available to Dolby Vision-only TVs. Personally, I think Dolby Vision-only TVs are unlikely to exist in the long run since it costs little to add HDR10 capabilities to a Dolby Vision-only TV. In fact, Vizio has already done this, providing a software upgrade to allow HDR10 inputs to TVs that were originally Dolby Vision-only. Since Dolby Vision requires paying Dolby a license fee, there are likely to be many HDR10-only TVs.

While Fox has released films in Dolby Cinema (the theatrical equivalent of TV’s Dolby Vision), like The Revenant and The Martian, it hasn’t yet created any home video titles in the Dolby Vision format. “We prefer open standards, and HDR10 utilizes open standards,” Hanno Basse, Chief Technology Officer at 20th Century Fox and President and Chairman of the Board of Directors at the UHD Alliance, said when speaking to David Katzmaier of CNET. “Just like with software, you typically get much broader adoption with an open standard. We’re mastering our content in that format, we like the results and our creatives like it. We frankly don’t see the need to augment HDR10 with a proprietary solution at this point.”

While Mr. Basse has strong ties to the UHD Alliance and HDR10, I quoted him because I tend to agree with him – viewers, including high-end viewers with high end displays like the Fox creatives, are not likely to see a noticable improvement going from HDR10 to Dolby Vision. Dolby Vision is likely to survive in the theatrical setting but may not persist as a home theater format.

– Matthew Brennesholtz