The display world is full of specifications that relate to the response time of display devices. However, often it’s too easy to get fixated on one part of what is usually quite a complex system of interaction between, at the extremes, content and the photon or the ‘display pipeline’. Samsung seems to have got embroiled in a controversy over this.

I have tended to summarise the pipeline as ‘Content > Delivery > Display’, but usually, a display system is much more complex than this, especially as our devices have increasingly become (I originally wrote ‘resembled’) complex computing environments that mix 3D graphics and video. If you add in any element of image capture, as in augmented reality, you add even more steps. But even for just display processes, you need to allow time for 3D content creation, filtering, processing and compositing of the image. Usually, those processes end up in a frame buffer or image in the memory of the device. This image is then transferred to the display itself, typically as a stream of pixel colour/luminance values in a format that follows a scan of the image from the left to right and from top to bottom. To get the best image, the buffer and the display need to be synchronised to avoid ‘tearing’ and other issues – and a reason behind VRR and G-Sync technologies.

The display then will assemble this data into the lines that make up an image and put the values on the display surface itself. Most displays do not have any memory in the pixel itself (although there are exceptions to that, for example in LCDs from JDI, Sharp or Kyocera and others), so all the data has to be sent every time the frame is refreshed. Sending that data consumes power. If you have a bright, backlit, display, then the power consumed is small in relation to the backlight, but if you have a reflective or transflective LCD, it is substantial. That makes the technology popular for very low power portable devices.

The same logic applies to emissive displays, such as OLEDs. If you can supply power to the display for less time, you can save energy. However, if you want to avoid visible flicker, you do have to put out light often enough that the human visual system doesn’t perceive flicker. Now, flicker perception is a complex topic and was much discussed in the days of CRT monitors when eliminating flicker was a really challenge. It was generally accepted that most people couldn’t see large area display flicker at 75Hz or so, but in digging around for this article, I found this paper that suggests that under some circumstances, even 500Hz may show some flicker! The eye is more sensitive to flicker in the peripheral vision, so with a small smartphone display, it’s going to be less than the level of a big desktop monitor.

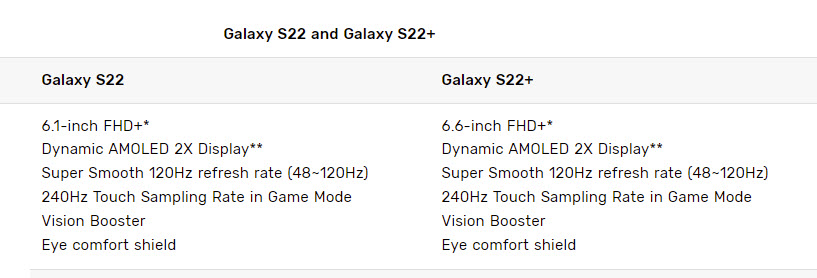

Anyway, back to Samsung. When the firm recently announced the S22 series of smartphones, it said that the display rate was variable from 1Hz to 120Hz on the Ultra and from 10Hz to 120Hz on the other two. However, subsequently the firm has adjusted that to read from 48Hz to 120Hz on the S22 and 22+ and clarified that while the video pipeline can be updated from 10 to 120Hz, the display itself does not go below 48Hz. If it did, as the display is an emissive OLED, there would be visible flicker, although that would be dependent on the relationship between the on and off times of the display.

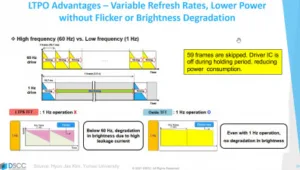

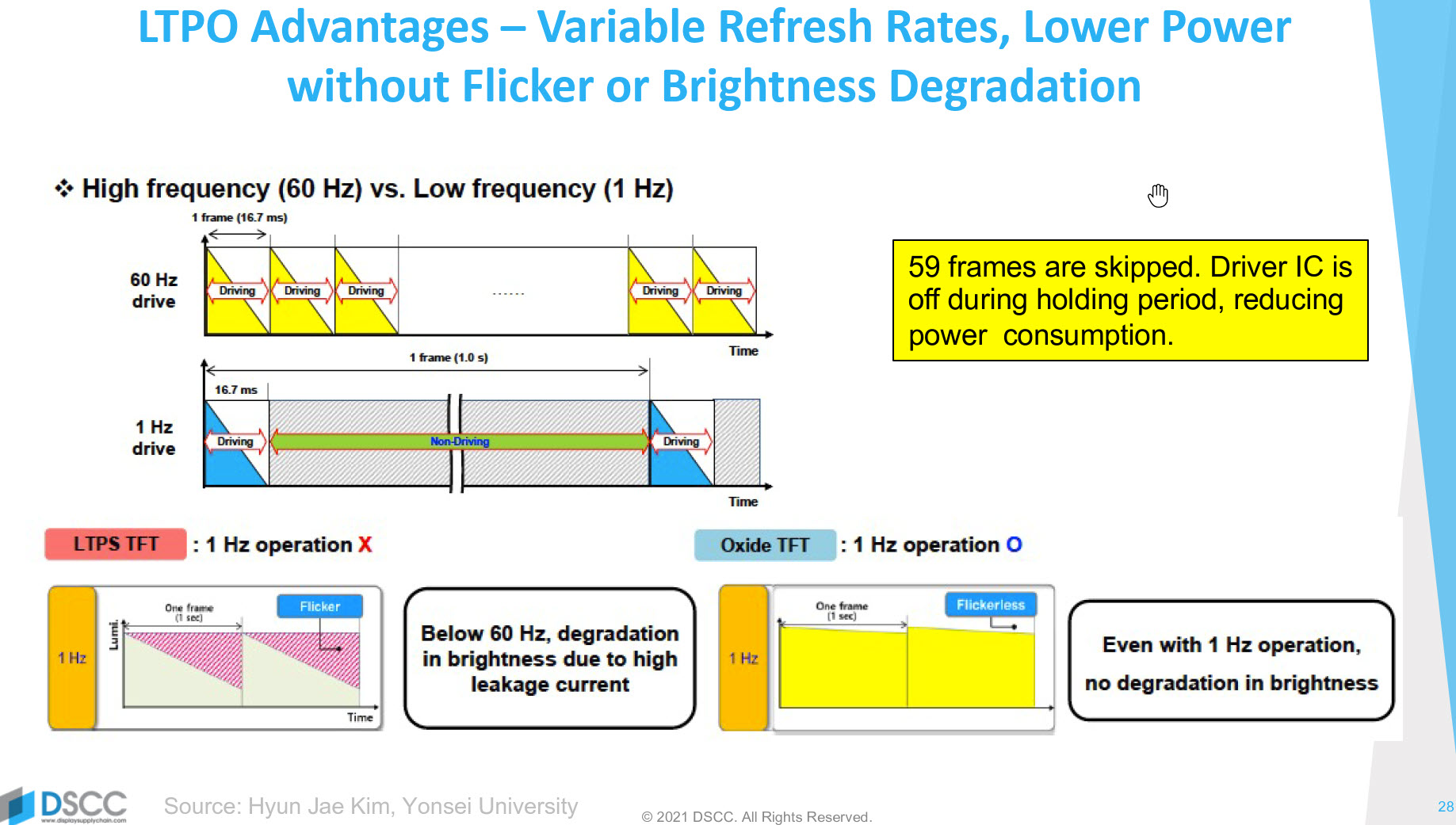

The Galaxy S22Ultra uses LTPO and as I covered in this article (LTPO is a Hot Technology – but What Is It??), LTPO can maintain the current to the pixel through the whole of the frame time.

The difference between LTPO and LTPS is clear from the bottom two diagrams

The difference between LTPO and LTPS is clear from the bottom two diagrams

However, the S22 and the S22+ use LTPS, and as I describe in that article, LTPS is prone to showing flicker. I am grateful to DSCC for spotting that Samsung Display presented a paper at the SID Symposium in 2020 (46-3 Image Adaptive Refresh Rate technology for Ultra Low Power Consumption) that explained that the firm had developed a way of recognising content to calculate when flicker might be visible.

You might think that having the pixel illuminated all the time means more power consumption, but, of course, the level of brightness is set by the area below the line of the chart of luminance over time, so the LTPS OLED would need higher peak brightness (and therefore higher peak current) than the LTPO version.

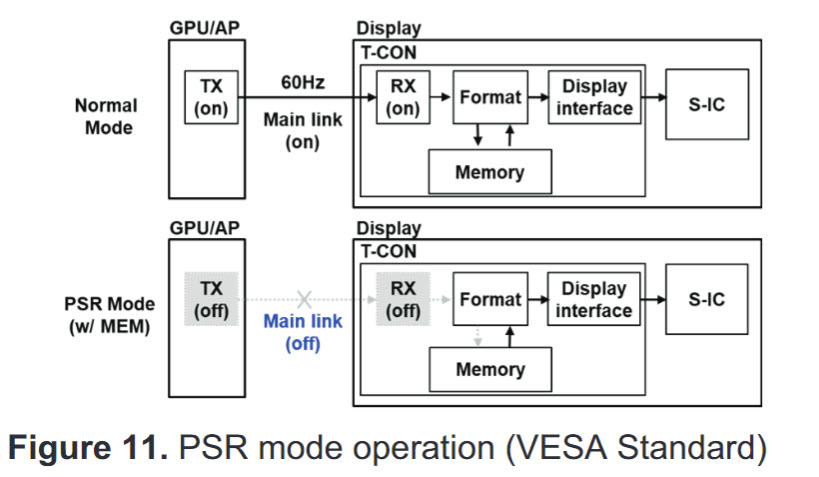

In the DisplayPort PSR Mode, there is a frame buffer in the display device, so only updates are sent

In the DisplayPort PSR Mode, there is a frame buffer in the display device, so only updates are sent

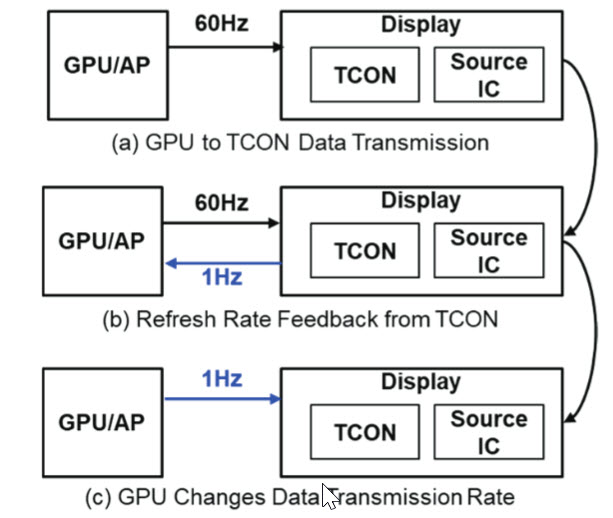

The paper is interesting as it looks not just at this topic, but also at a change to the traditional GPU > Frame buffer > Interface > display architecture.

In the proposed mode by Samsung, the display would signal to the processor the lowest level of refresh needed to eliminate flicker, maximising power consumption.

In the proposed mode by Samsung, the display would signal to the processor the lowest level of refresh needed to eliminate flicker, maximising power consumption.

If you have a frame buffer, this kind of architecture can be modified using features in the MIPI Video-command mode or in Panel Self-Refresh (PSR) if there is a DisplayPort interface. However, Samsung Display suggests a new architecture where the display itself could analyse the content and signal back to the GPU/AP when it could run at an extremely low update rate. Samsung Display said that it was working with MIPI to standardise this concept. (BR)