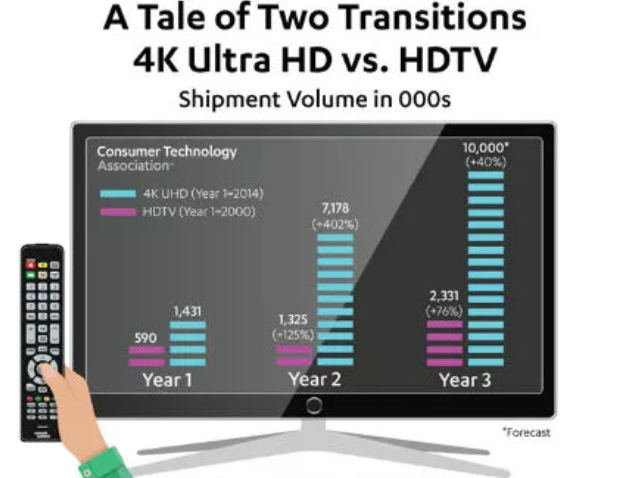

In 1998, HDTV was introduced in the U.S., marking a significant milestone in display technology. Its popularity grew slowly, gated by content and a personal delivery system (Blu-ray). Flat-screen plasma TVs and Sharp’s first commercial color TFT-LCD had already made an appearance in the late ’90s, paving the way for slimmer, high-quality displays. Eventually, networks and cable providers added more content, further accelerating HDTV adoption.

When 4K was introduced 15 years later, a digital infrastructure for content delivery was already in place. Most content was being mastered in 4K or even 5K, making it more of a network bandwidth issue than a content problem. The rapid development of streaming platforms like Netflix and YouTube in the early 2010s played a crucial role in making 4K content more accessible.

Putting aside the plumbing and content creation issues, as important as they are, there were debates about the human perception of 4K—could a human really see the difference? Lengthy, mind-numbing proofs were offered (some by yours truly—sorry) that explained the retina’s subtended arc and focus depth, and most importantly, the brain’s ability to fill in the gaps. However, the advent of larger TV screens at more affordable prices made 4K displays irresistible, and the visual difference was undeniable.

| Year | Key Event |

|---|---|

| 1980 | IBM introduces the IBM 5150 personal computer with a monochrome display (typically 80×25 characters). |

| 1982 | AutoCAD is released, initially designed for Apple II and later for IBM PC, with low-resolution displays. |

| 1987 | HDTV was introduced in the U.S., with slow adoption due to limited content and high costs. |

| 1988 | Sharp Corporation releases the first commercial color TFT-LCD (Thin Film Transistor Liquid Crystal Display). |

| 1996 | First HDTV broadcast in the United States. |

| 1997 | First flat-screen plasma TVs enter the consumer market. |

| 1998 | HDR (High Dynamic Range) technology was introduced, enhancing the viewing experience. |

| 2000s | Adoption of HDTV accelerates as content availability increases and prices decrease. |

| 2004 | Sony introduces the first consumer 4K projector, but the high price limits its adoption. |

| 2007 | Netflix begins offering streaming video, eventually becoming a significant driver of 4K adoption. |

| 2010 | HDMI 1.4 specification released, supporting 4K resolution. |

| 2012 | Sony releases the first 4K TV for consumers. |

| 2013 | 4K content becomes more widely available, with Netflix streaming 4K content and YouTube supporting 4K. |

| 2014 | The rapid adoption of 4K TVs as prices decrease and content availability increases. |

| 2016 | PlayStation 4 Pro and Xbox One X released, supporting 4K gaming. |

| 2016-2018 | Gaming consoles PlayStation 5 and Xbox Series X were released, supporting 4K and 8K resolutions. |

| 2018 | 8K TVs introduced by Samsung and other manufacturers, though content availability remains limited. |

| 2019 | Streaming platforms like Disney+ and Apple TV+ launch, offering more 4K and HDR content. |

| 2020 | Gaming consoles PlayStation 5 and Xbox Series X released, supporting 4K and 8K resolutions. |

Some argued that we were seeing ‘the emperor’s new clothes,’ and 4K looked good because we wanted it to—kinda like why the stock market rises. Whatever the reason or motivation, 4K does indeed look better. The introduction of HDR (High Dynamic Range) technology in 2014 only served to amplify the viewing experience, with many claiming that HDR offered a greater visual delight than 4K alone.

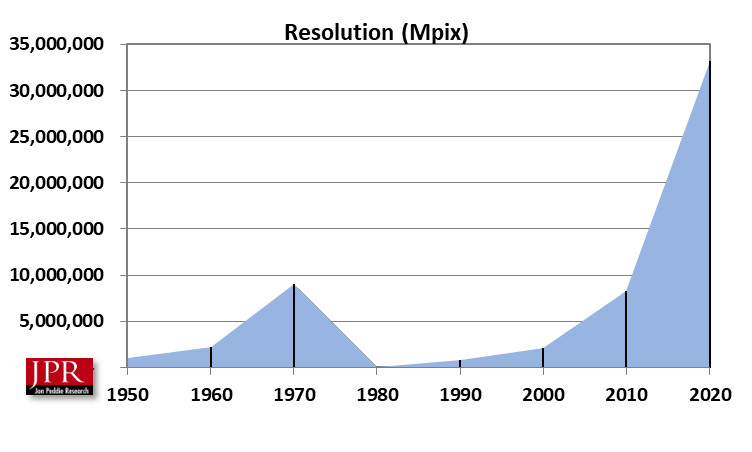

The irony of this discussion about what we can or can’t see is almost as old as computers themselves. Back in the 1980s, computer and workstation displays transitioned from calligraphic stroke writer vector displays to raster scan displays. The industry’s early raster displays had a resolution of 512×512, which was considered low-resolution compared to the vector displays that engineers used to take physical measurements from. The limitations of raster displays were primarily due to the cost of RAM and its bandwidth.

The end users balked and said, “the systems which have been developed and employed in various operating environments were not strictly specified by the end user. The systems were the product of the engineering judgement of the developer. Interaction with the ultimate user or the photo-interpreter was highly limited… if it looked or seemed good to the engineer or programmer, then the function in question was included.”

To counter the complaints and defend the investment being made in raster scan systems, suppliers brought in experts who claimed that “the human visual system will not use more than 500 x 500 displayed points due to resolution limits.” Sound familiar?

Researchers trying to defend their low-res raster displays proposed a solution similar to what Nvidia’s DLSS (Deep Learning Super Sampling) offers today. They suggested using a degraded image as a search field, with high-resolution data stored in a rapidly accessible form and a mechanism for extracting any 512×512 area designated by the user. Render small, ray trace if you must, and then scale up for final examination—50 years ago.

As I write this, on my 32-inch 8K UHD display, I wonder if technology is really progressing or if we’re mainly finding ways to build what we always wanted and knew we needed. It took 40 years to get back to a real high-resolution display, and with 8K, we now have a display that truly challenges the human vision system—but not the brain, a discussion for a future posting.

You’ve heard it before—the more you can see, the more you can do. Those who believe this have big, high-resolution multiple displays and are doing more. Ask them what they can and cannot see.

From the introduction of IBM’s 5150 personal computer with a monochrome display in 1980 to the advent of 8K TVs in the late 2010s, display technology has come a long way. Gaming consoles like PlayStation 5 and Xbox Series X, released in 2020, further pushed the boundaries, supporting 4K and 8K resolutions. Streaming platforms such as Disney+ and Apple TV+ also contributed to the popularity of high-resolution content.

The evolution of display technology is a testament to the persistent drive to improve visual experiences, whether through increased resolution, HDR, or AI-assisted upscaling. As technology continues to progress, we can only imagine what future innovations will bring to the world of displays, further challenging our human vision and perception.