If fully successful, the gesture control technology under development at eyeSight Technologies Ltd. (Herzeliya, Israel) will minimize the need for using input peripherals in conjunction with smartphone based VR headsets. Such headsets include the Samsung Gear VR and the Google Cardboard. The eyeSight approach provides the user with the ability to navigate virtual space through the use of their hand gestures.

eyeSight’s technology is based on the use of the rear facing camera that is built in to essentially all iOS and Android smartphone’s.

eyeSight explained that companies that produce VR headsets and that integrate eyeSight will not need to include any new hardware into their devices to implement the technology. This should reduce the cost of owning a VR headset and, as a consequence, could potentially contribute to a more rapid and broader adoption of VR systems.

At this time, the company has offered few details about their proprietary technology. It has, however, been reported that eyeSight identifies the user’s hand and fingers and uses the smartphone’s processing capability to create a graphical representation that follows their movements in real time. eyeSight technology can accurately identify a user’s fingertip up to about 16.4 feet away. The optimum distance is, however, between 12″ and 19″ in front of the headset. Unfortunately, due to limits in the field of view provided by most commercially available smartphone cameras, eyeSight is currently capable of detecting only one hand at a time.

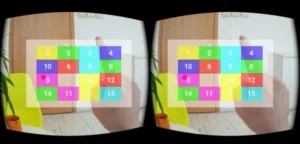

The company has offered demonstrations illustrating the technology in operation. One such demonstration is presented in a video that can be found at the end of this article. As shown in the video, the user sees a small red dot through their headset. The position of the dot in virtual space is controlled by the position of the user’s virtual fingertip. When the user wants to select an option, they simply tap the air with their finger in front of the spot designated object.

The company reports that eyeSight gesture control software can be easily integrated into any one of several levels of a device. That is, the code can be embedded in the chipset, the operating system, the camera module or at the application level.

The eyeSight approach can be implemented in various kinds of devices including smartphones and tablets. In addition, the technology can be embedded in so-called “internet of things” devices. This includes a truly wide range of devices from smartwatches to connected kitchen appliances. The “internet of things” category has been mentioned as an area of particular business interest for eyeSight. Another area of focus for eyeSight is automotive applications. The company is reported as developing means for drivers to control features of their car through touch free gestures. (and could also, presumably, be built into smart desktop monitors? – Man. Ed.)

Although the eyeSight technology is still in an early stage of development, the company has stated an intention to offer a SDK to developers in “the near future.”

In other news related to eyeSight, the company is reported to have received Series C funding of $20M on May 4, 2016. The investment was provided by the Chinese conglomerate Kuang-Chi, which investigates and manufactures new technologies. With these funds in hand and given the current state of product development, it would seem that eyeSight has a good shot at reaching a point where deployment of the technology is a real commercial possibility. -Arthur Berman

eyeSight Technologies, +972 +972-9-9567441