It’s four years since Norbert wrote a Display Daily that set out the ideas from Misapplied Sciences relating to being able to direct multiple images on a display directly to particular viewers. (Misapplied Sciences Working on Multiview Displays) Now the technology is being tried by Delta Airlines at Concourse A of Detroit Metropolitan Airport in the USA.

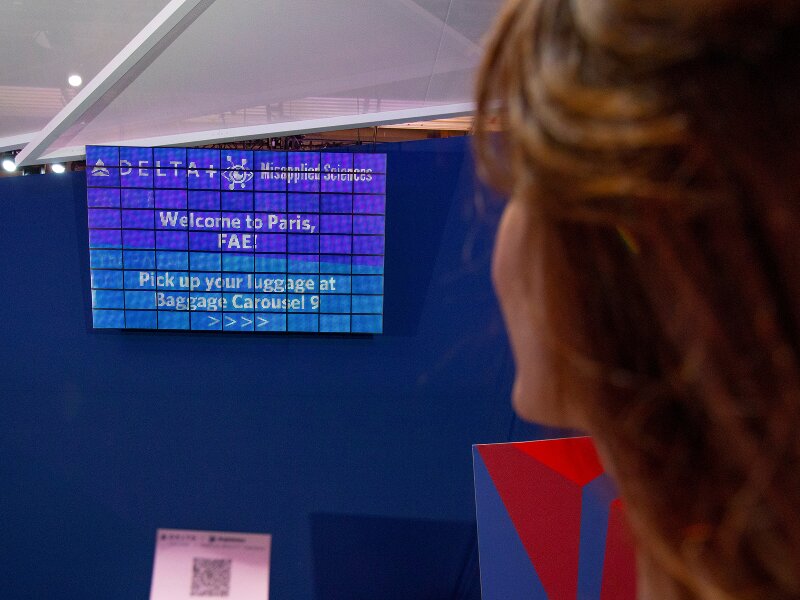

The display technology comes into its own after users scan their boarding pass or can activate it using facial recognition if they are enrolled in the Delta Digital Identity programme (if not, the system uses overhead cameras to identify those using the system when they register and tracks the ‘person object’ without knowing any personal details, Delta told Gizmodo). Those that are enrolled will be automatically recognised and will have personalised information shown to them. The information will be in an appropriate language and will advise of gate numbers and other useful travel guidance such as the correct carousel for baggage collection.

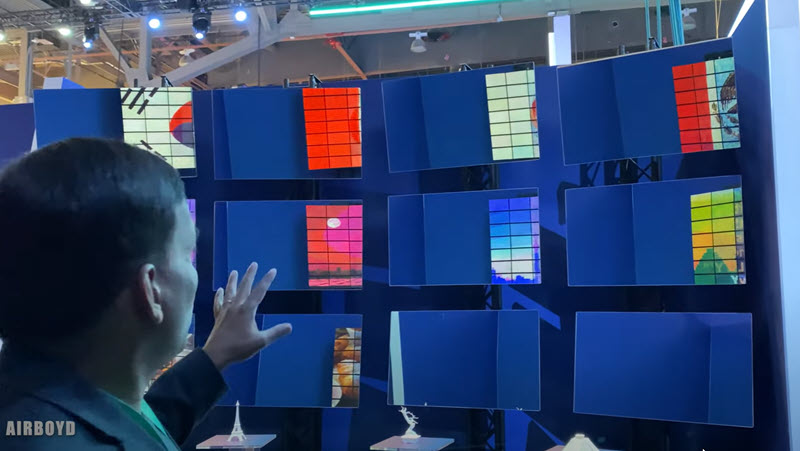

The idea was demonstrated at CES 2020 (see this YouTube video ). At that event, there was a demonstration involving around 12 people/positions, but Delta said at that time that it was ‘applicable to hundreds of people’. The plan was to have the system rolled out in 2020, but obviously Covid meant the airline had other things to think about.

Misapplied showed how it can address 12 viewers at CES in 2020

Misapplied showed how it can address 12 viewers at CES in 2020

Misapplied said that the technology:

- Can target advertising to each viewer’s needs, interests, behavior, and surroundings. (Oh, joy!)

- Individualise media, messaging, and lighting effects in public entertainment venues to each viewer

- Provide wayfinding

- Help with traffic control with messaging and signals on roadways are specific to each zone, lane, and vehicle.

- Can adjust the content for each viewer’s distance, viewing angle, and sight-lines.

- Help with people flow management – especially in the case of an evacuation event

Misapplied has also suggested that the technology could be very helpful in sports as well, or in retail – anywhere there are a lot of people. In a stadium, for example, the system could direct a spectator to their seat.

The company claims that its pixels can control the colour of the light in ‘up to a million angles’. The firm has its own processor to drive the pixels but up to hundreds of views can be created using a PC before being sent to the processor.

There don’t seem to be too many pixels – click for higher resolution

There don’t seem to be too many pixels – click for higher resolution

The company is short on providing details of the actual display technologies used, but it looks as though the number of pixels in each view is quite limited in the Delta configuration and that they are based on discrete LEDs, but this is just my speculation.

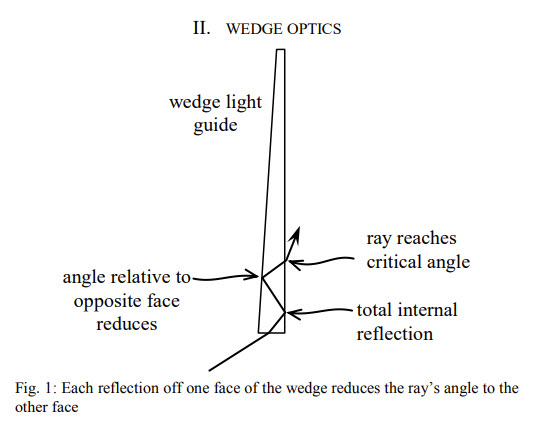

The technology reminded me of something I had seen before. I had a vague memory that Microsoft had, at one time, been working on steerable optics. I found a video from 2010 on YouTube that was from Microsoft Applied Sciences (MSAppliedSciences channel – just one letter away from Misapplied Sciences, of course! Oh, and Ng was an intern at Microsoft Research before starting Misapplied) and showed a very crude version of the idea with just two people and using the ‘Wedge’ display technology that I’ve been reporting on since I saw it demonstrated by Dr Adrian Travis at the EID show in the UK in 2000. The aim was to offer 3D using multiple views. (In a ‘small display world’ coincidence, he was showing his latest project in the i-Zone at Display Week just a few weeks ago). Travis moved from his start-up to work for some time at Microsoft.

(You can find a paper on Travis’s work here that gives some clues, I think, as to how Misapplied might be directing the light).

(You can find a paper on Travis’s work here that gives some clues, I think, as to how Misapplied might be directing the light).

The idea of steerable optics is, I think, a very important one. At the moment, all of our displays pump out light in all directions – in fact we grade them on how well they can spread the light in a Lambertian way. But the brute reality is that every photon that doesn’t land on a retina is wasted. To quote one of my favourite phrases – “a display without a person has no function”. At the moment, our displays bathe the world in light which is not only extremely wasteful, but in many situations that wasted light is reflected back onto the display and reduces the image quality. (as Pete Putman so clearly showed and as has been researched by Barco and others).

That got me thinking about a cinema fitted with this technology. I sometimes find myself in a cinema that only has a few viewers. Supposing you could just show the display to them? How about having different age-suitable visuals for different viewers?*

At the moment, the firm has pretty big pixels suitable for large video walls. However, imagine the use of microLEDs with metasurface optics, exploiting the technologies that have allowed the development of the advanced semiconductor industry. Could we get to small pixels and allow just the viewer to see their screen without the huge amounts of wasted power? I suspect such a vision is a long way away, but it’s intriguing. And it might just be nearer than I think! (BR)

* Followers of British politics will immediately see a practical use for this technology that could be popular with some MPs.