A lot of the coverage of High Dynamic Range (HDR) for both the cinema and home TV has focused on one of two things:

- Standards for HDR content. Much of the focus here has been on Dolby Vision, HDR-10 and HLG encoding

- HDR-compliant displays and systems, including many that are HDR signal compliant but unable to actually show a high dynamic range of light levels.

There is more to the HDR experience than standards and displays. The artistic and technical decisions made by the content creators are the key to what is ultimately transmitted by a standards-compatible HDR signal and seen on a HDR display.

Professor Harry Mathias from San Jose State University

Professor Harry Mathias from San Jose State University

I attended a SMPTE educational webinar titled “HDR Examined from a Cinematographer’s Point Of View – as a Dramatic Imaging Tool,” presented by Harry Mathias, Professor of Film & Digital Cinema at San Jose State University, He is the author of three books, Electronic Cinematography: Achieving Photographic Control over the Video Image (1985), Cinematografia Electronica (2005, in Spanish) and The Death & Rebirth of Cinema (2015) and contributor to several other volumes. In addition, he has been Director of Photography for numerous feature films, television movies and music videos done both on film and with electronic capture.

Mathias emphasized that HDR doesn’t sell movie tickets – the story told in the movie sells the tickets. Many stories do not need HDR to tell the story. Movies like Casablanca were shot on black-and-white film and are classics without HDR. While technical gimmicks like 3D and HDR can propel a single movie’s success, Mathias said the industry as a whole cannot survive on technical gimmicks alone – it must use new technology to tell stories the public is willing to pay to see. The value of HDR and other improvements in movie making technology is to enhance the ability of content creators to tell stories but not all stories benefit from HDR.

In Casablanca from Director Michael Curtiz, Cinematographer Arthur Edeson didn’t need HDR or even color to tell the story and win one of his three Oscars for Cinematography.

In Casablanca from Director Michael Curtiz, Cinematographer Arthur Edeson didn’t need HDR or even color to tell the story and win one of his three Oscars for Cinematography.

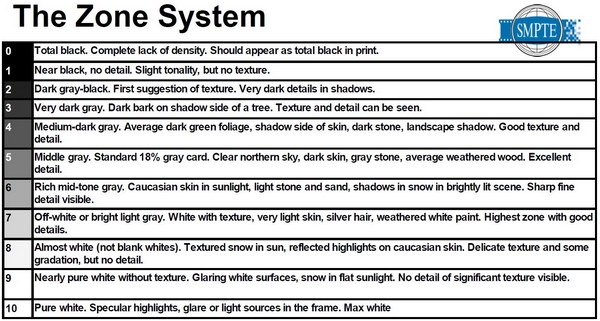

Mathias said “Not every subject is appropriate for high dynamic range or high brightness reproduction. Some subjects must be low contrast and have low brightness to be effective.” He used Ansel Adams, the famous landscape photographer, as another example of one who used low contrast images for dramatic effect. He discussed Adams’s exposure zone system for exposing photographs or movies. This technique is outlined in the table.

The exposure zone system originally developed by Ansel Adams for black-and-white still photography.

The exposure zone system originally developed by Ansel Adams for black-and-white still photography.

Note these zones are not spatial zones but exposure level zones. Mathias said the cinematographer needed to consider zones regardless of the total dynamic range of the system. Note there are no exposure levels associated with the zones in this table. If you are like Edeson or Adams and are telling a story with a system with 10 stops of dynamic range, there will be approximately one stop per table entry. With a HDR system, which can have 20 stops or more, you still have 11 different exposure zones but not necessarily 2 stops per zone. The middle zones, 4 – 7, tell most of the story and may still only be allocated 1 or 1½ stops each. According to Mathias, different content types tend to use different zones. For example, spy movies use zone 3 quite a bit but romantic comedies use zones 6, 7 and 8 extensively. It is largely the high (9 – 10) and low (0 – 2) brightness zones that absorb the extra dynamic range in a HDR system.

Mathias said that traditional standard dynamic range (SDR) movie making involved lighting subjects to bring them into the desired exposure zone. Lighting, however, can be expensive and time consuming. Using available light or simple added light, a HDR camera allows all portions of the image to be captured but does not necessarily give the artistic emphasis to the different parts of the image the content creator intends. While some (or all) of this artistic emphasis can be added by digital post-production, a traditional cinematographer like Mathias thinks what is recorded by the film or digital camera should contain most of the artistic input.

Cinematographers use incident light meters, spot meters and waveform monitors to control exposure in both SDR and HDR movies.

Cinematographers use incident light meters, spot meters and waveform monitors to control exposure in both SDR and HDR movies.

Cinematographers have always used technology to help them get subjects into the desired exposure zone. Three examples Mathias used were incident and spot light meters and waveform monitors looking at the output of the electronic camera.

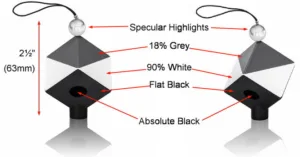

Datacolor’s SpyderCube reference target for HDR

Coincidentally, I attended a Tektronix Video Insights Seminar where Karl Kuhn discussed, among other things, the use of Tek waveform monitors for HDR exposure control. To use a camera and waveform monitor for exposure control, it is necessary to have a standard reflectance target to point the camera at. Kuhn favored the SpyderCube from Datacolor for HDR applications. This can be used either alone or with more conventional color and grayscale test pattern targets. However, there are two things that the SpyderCube brings to the HDR cinematographer that are not available from conventional targets: a specular reference and an absolute black reference.

Specular reflection has been an essential part of all the HDR cinema test clips I’ve ever seen. SpyderCube provides a reference for this in the form of the small chrome sphere at the top of the system. Very dark blacks are another part of HDR and conventional “black” reference cards are not truly black – they always reflect some light. The SpyderCube provides and absolute black reference in the form of a light trap – a black hole where light enters but never leaves again.

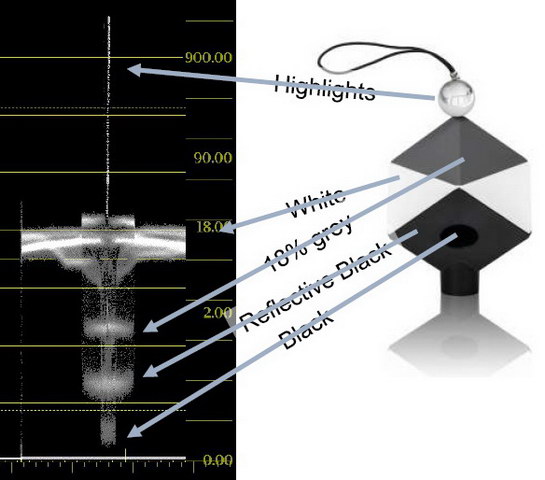

SpyderCube as seen by a RAW S Log 2 HDR camera on a Tek waveform monitor (Credit: Tektronix)

SpyderCube as seen by a RAW S Log 2 HDR camera on a Tek waveform monitor (Credit: Tektronix)

This image shows which areas and gray levels correspond to which features on the waveform monitor for a HDR signal. As can be seen, the reflective black through the reflective white represents only a portion of the allowed levels in this HDR system. The whiter-than-white and blacker-than-black portions of the signal represent a large part of the signal. Yet, most of the story is told in the light level region between reflective black and reflective white, which correspond roughly with exposure zones 1 and 9 respectively. The added exposure range in a HDR system is used mostly in zones 0 and 10, as shown in the next image, plus possibly a stretching of the zones 1 – 9.

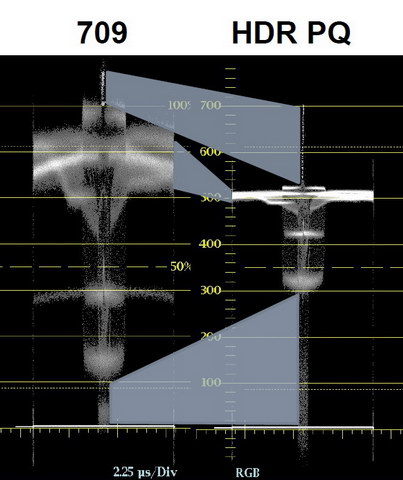

Waveforms for a Rec. 709 SDR signal compared to a HDR PQ signal. The critical zones 1 – 9 take up less of the total signal range in the HDR system compared to the SDR system. (Image: Tektronix)

Waveforms for a Rec. 709 SDR signal compared to a HDR PQ signal. The critical zones 1 – 9 take up less of the total signal range in the HDR system compared to the SDR system. (Image: Tektronix)

With an HDR display, this doesn’t pose a problem because while zones 1 – 9 get less of the signal space, due to the high brightness white and the low black level, a HDR display dedicates as much or more of the light level range to these zones as they would have gotten in a standard dynamic range (SDR) system. Zones 0 and 10 get stretched to better match the human vision system range. This allows both the story to be told and visual reality to be matched. The HDR can then be used to tell part of the story.

This does pose a problem, however, in the case of format conversion when the HDR content is then shown on a SDR display. There are several ways of doing this, none of which is really acceptable. The simplest is to just tone map from the HDR display to the SDR display so the brightest highlight from the HDR image maps to the maximum brightness of the SDR display. To make room for this specular white, the reflective white and 18% gray levels must be pushed down to lower levels than they would be if the signal had been SDR right from the beginning. This lower brightness is very noticeable and I have heard complaints from consumers about how dim HDR looks. The consumers are a little off-base because HDR signals on a true HDR display don’t look dim, just HDR signals on an SDR display.

The second approach is to just clip the specular whites so the reflective white and 18% gray are shown at or close to their normal SDR levels. This may be OK if HDR was just used as a gimmick by the content creator but if HDR was actually used to help tell the story then part of the story will be lost.

The use of metadata in the Dolby Vision and HDR-10 systems partially mitigates this effect by telling the display the best compromise between tone mapping and clipping that provides the best visual compromise for the content to be shown on a SDR display. Still, the visual quality on the SDR display is likely to be not as good as it would be if the content had been mastered for SDR in the first place.

Is HDR Content Like 3D?

This puts HDR content creators into a position similar to 3D content creators. The best HDR content is different to the SDR content, just like the best 3D content is different from 2D content. Really, two different versions of a movie are needed, an HDR version and an SDR version. Since the vast majority of TV viewing for the foreseeable future is going to be on SDR displays, does it make economic sense to make the HDR version at all? For very high-end content to be shown in Dolby Cinema or other premium theaters where viewers will be expected to pay a premium price for the HDR experience, the answer is probably yes. The HDR content can then be transferred to HDR Blu-Ray disks to be shown on the very few $100K+ home cinema systems that can do it justice. The rest of the viewing public needs a native SDR version of the movie for watching on the vast majority of home theater systems and all other TVs and mobile devices.

This, perhaps, isn’t an impossible economic barrier. Studios currently produce multiple versions of content from a single master for viewing on a wide variety of devices using a wide variety of standards and distribution channels. I’ve also heard people say that a SDR master produced from a HDR master actually can produce better SDR than native SDR can. If studios are willing to produce a SDR master from every HDR master, fine. Everyone benefits, both the people watching the content in HDR and the people watching it in SDR. But if they only distribute the HDR version and expect the TV to produce the SDR version, the vast majority of viewers will lose out in image quality. –Matthew Brennesholtz