As a follow-up to my HDR10 vs Dolby Vision article, I had a chance to talk to Patrick Griffis of Dolby Laboratories, Inc. on Monday about Dolby’s view of high dynamic range (HDR) systems. This balances the article Samsung, HDR and Industry Experts (Subscription required) I wrote after visiting the Samsung 837 venue, where I attended a workshop on HDR that focused on HDR10. Griffis is the Vice President of Technology in Dolby’s Office of the CTO and the SMPTE Vice President for Education. He has been at Dolby since 2008 and before that he had positions at Microsoft and Panasonic. At Dolby, he has been active in HDR for 4 – 5 years.

Two questions that haunt HDR, not just at Dolby, but everywhere the technology is being implemented, are “How black is black?” and “How bright is white?”

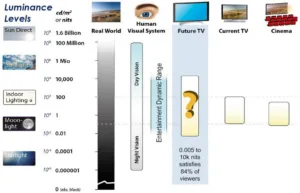

According to Griffis, the human visual system (HVS) can see just a handful of photons; perhaps 40. In order to see this, it takes several hours of adaptation in total darkness – not a realistic TV-watching scenario. Dolby did human viewing tests showing that a “black” this black is not necessary. There tests showed that a “black level” of 0.005 nits (cd/m²) satisfied the vast majority of viewers. While 0.005 nits is very close to true black, Griffis says Dolby can go down to a black of 0.0001 nits, even though there is no need or ability for displays to get that dark today. This 0.0001 nit black level is what you would see if your scene were illuminated on a dark night, illuminated only by starlight. As a comparison, Standard Dynamic Range (SDR) content is typically mastered with a black level of 0.1 nit, comparable to what you would see on a moon-lit night.

How bright is white? Dolby says the range of 0.005 nits – 10,000 nits satisfied 84% of the viewers in their viewing tests – no need to go to blacker blacks and little need to go to brighter whites. While Griffis personally can see advantages to setting the white point to 20,000 nits instead of 10,000 nits, he can see the disadvantages, too. Displays now and in the near future simply cannot achieve 20,000 nits. He says the brightest consumer HDR displays today are about 1,500 nits. Professional displays where HDR content is color-graded can achieve up to 4,000 nits peak brightness, so Dolby Vision HDR content is mastered at a higher brightness than it is viewed by consumers. This is the reverse of the situation for SDR where the content is typically color-graded at 100 nits and then viewed by consumers at a higher brightness of 300 nits or more.

Dolby uses the Perceptual Quantizer Electro-optical Transfer Function (PQ EOTF) to encode light levels for Dolby Vision. This isn’t surprising since Dolby specifically developed the PQ EOTF for use in HDR systems like Dolby Vision. The PQ EOTF is also used for HDR10 content, except HDR10 uses a 10 bit version and Dolby uses a 12 bit version of the PQ function. The PQ EOTF has been standardized as SMPTE ST-2084.

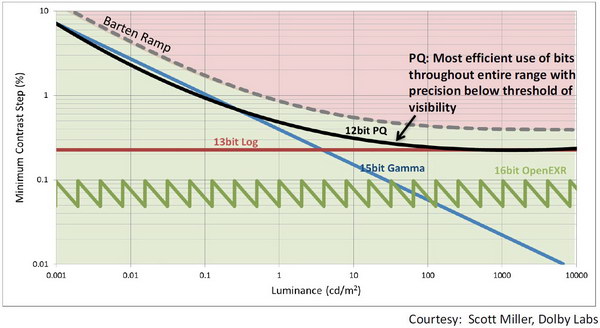

If too few a number of bits is used in the EOTF function, then viewers may be able to see “contouring” or steps between two gray values that have bit values that differ by one count. Dolby claims 12 bits are necessary to avoid this problem and presented the figure below to demonstrate this issue.

If, at any given luminance level, the EOTF exceeds the threshold for minimum contrast step, it would be possible for a viewer to see contouring in the image at that luminance level. In this image, Dolby uses the Barten Ramp as the threshold of visibility for contouring. It would take a 13 bit Log EOTF, a 15 bit Gamma EOTF or the 16 bit OpenEXR EOTF to remain below this threshold. Sensibly, Dolby chose the EOTF that required the fewest number of bits to encode the image without visible contouring: a 12 bit PQ EOTF. A 10 bit PQ EOTF would be above the threshold defined by this Barten Ramp at all luminance levels.

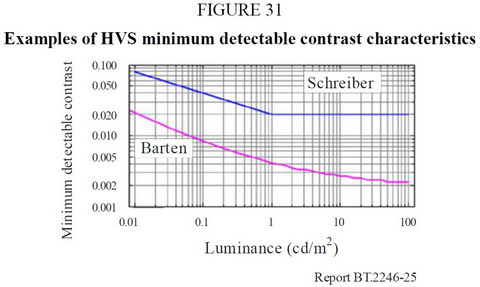

The question here is, “Is the Barten Ramp the correct threshold?” The Barten Ramp is calculated from P. G. J. Barten’s 1999 Contrast Sensitivity Function (CSF), a model that supposedly incorporated most of the important variables in the ability of the HVS to detect contouring in digital display systems. Note this is modeled rather than measured data and the model is based on data that predates the 1999 model. Measured data from W. F. Schreiber’s 1992 book shows the eye is not nearly as sensitive to contrast steps as Barten’s CSF model indicates, as shown in the image from ITU Report BT.2246-5-2015, below.

While a 10 bit version of the PQ EOTF would exceed the threshold based on the Barten CSF model, it would stay well below the threshold established by Schreiber’s measured data. The satisfactory application of the 10 bit PQ function by HDR10 supporters would seem to indicate that Barten sets too strict a limit. For example, at 1 nit of luminance, Barten says the HVS can detect a 0.5% brightness step but Schreiber says the HVS can only detect a 2.0% brightness step. This is a huge difference. Which is right? Depending on 1992 or 1999 data to determine something as important as the need for 10 or 12 bits doesn’t seem right – someone should repeat the experiments to find what the correct answer is.

Another reason why HDR10 can use a 10 bit PQ EOTF is HDR10 content is mastered over a narrower luminance range than Dolby Vision. HDR10 masters over a range of 0.05 – 1000 nits with a 20,000:1 range of brightness levels, while Dolby Vision is mastered over a range of 0.0001 – 10,000 nits, a brightness range of 1,000,000:1. HDR10 doesn’t need to use code values to represent brightness levels from 1000 – 10,000 nits or from 0.0001 – 0.05 nits, so fewer code values are needed and 10 bits are enough to represent all of them. The implementation of HDR10 in UltraHD Premium OLEDs runs from 0.0005 – 540 nits, a brightness range of 108,000:1. Since the HVS is relatively insensitive to contouring at very low brightnesses, according to either the Barten Ramp or Schreiber, no additional steps are required for this wider range of brightnesses compared to the LCD version of HDR10.

Dolby is a proprietary system and brands must pay a license fee to Dolby to use it, while HDR10 is an open system without license fees. There are advantages to both approaches. A proprietary system is well defined and inter-operability is not a problem. An open system, particularly one early on the development curve, can have multiple contradictory approaches. While HDR10 seems to work and be compatible across systems, the deeper I looked into HDR10 and its underlying standards from SMPTE, the ITU and others, the more confused I got and the more worried I got about its lack of standardization.

Griffis said that for streaming video, Dolby Vision provides a backward-compatible framework for HDR. There is a base layer of SDR content encoded with a Gamma EOTF and decodable by any streaming video decoder for showing on an SDR display. Then there is an enhancement layer that includes all the necessary data to convert the SDR stream into an HDR stream and metadata that tells the Dolby Vision decoder exactly how to use this enhancement layer data to perform this SDR to HDR conversion. Griffis said the enhancement layer and the metadata adds just 15% on average to the size of the bitstream. This allows streaming companies like Netflix to keep just one version of the file and then stream it to either SDR or HDR customers. An SDR (or HDR10-only) system discards the extra data and shows the SDR image. This saves storage space on the server, at the expense of higher streaming bit rates to SDR customers. Of course, in 2016, the vast majority of streaming customers are SDR customers and this streamed enhancement data is mostly discarded.

I’m not sure that this is a worthwhile saving – storage space is cheap and streaming bandwidth is relatively expensive. Beside, a streaming company like Amazon or Netflix doesn’t store just one version of a file – they store multiple versions to allow for adaptive bit rates. If a customer can’t accept the full bit rate, the streaming company doesn’t decimate the file in real time to stream at a lower bit rate. Instead it starts to stream the version of the file that was compressed off-line with a higher compression ratio so it could be sent at a lower bit rate. Having one more file, a premium HDR version, doesn’t add much to their storage requirements.

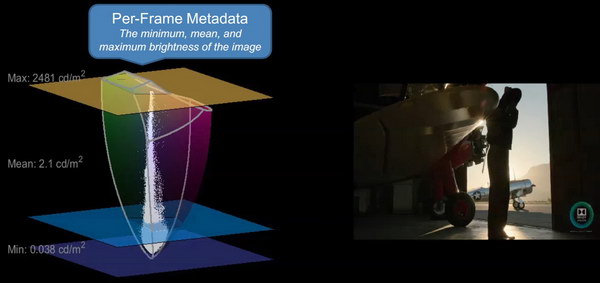

Dolby Vision per-frame metadata includes information on the minimum, mean and maximum brightness in a scene.

Dolby Vision per-frame metadata includes information on the minimum, mean and maximum brightness in a scene.

One thing Dolby Vision does that HDR10 does not do is send dynamic metadata on a per frame or per scene basis. HDR10 sends metadata on a per movie basis. Griffis said this would be OK if the viewer was watching the content on a display that was identical to the display the content was mastered on. Since it never is, it is necessary for the TV to adjust the content to match the artistic intent. The dynamic metadata provides information useful in this content adjustment.

![]() HiSilicon Hi3798C V200 SoC Demo Platform with Digital Tuner and Dolby Vision capability at IBC 2015. This SoC has a Quad core Cortex A53 processor at its heart. (Photo Credit: CNXSoft-Embedded Systems News)

HiSilicon Hi3798C V200 SoC Demo Platform with Digital Tuner and Dolby Vision capability at IBC 2015. This SoC has a Quad core Cortex A53 processor at its heart. (Photo Credit: CNXSoft-Embedded Systems News)

To use the Dolby Vision proprietary system, it is necessary to buy a system on a chip (SoC) ASIC from one of Dolby’s partners: MStar, HiSilicon, MediaTek or Sigma Designs. According to Griffis, these four companies have 75% of the market for these types of SoCs, so HDR system makers are not particularly limited in their SoC sources. Griffis added that the SoC that can decode Dolby Vision can also decode HDR10, so it is not necessary for a TV maker to include two decoder chips in a dual Dolby Vision/HDR10 system.

When asked about the added cost of Dolby Vision compared to HDR10, Griffis said, “the incremental cost is minimal and frankly a fraction of the total TV cost which is much more driven by the display electronics and electronic components, not royalties.”

A final question: “Which will prevail, Dolby Vision or HDR10?” Tune in next year, or perhaps next decade, to find out. Since HDR brings something to TV that consumers can actually see at a fairly modest cost in both dollars and bits, HDR itself isn’t likely to fade away. –Matthew Brennesholtz