Increasing the image fidelity of VR content will require more pixels to be delivered to the end user. That has a big impact on bandwidth, so we are starting to see the emergence of new ideas to solve this problem. The basic idea that companies are pursuing is to only transmit full resolution images that are a subset of the full 360º image. How this is done varies, so we will highlight techniques we learned about at NAB below.

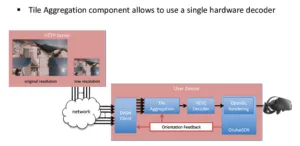

In its booth at NAB, Fraunhofer HHI gave a brief presentation of “Tile based DASH Streaming for Virtual reality with HEVC.” In their approach, the VR video content is stored on the server in two resolutions – original and low. The client device, i.e. the headset, then gets one of the resolution files based on their viewport (based on feedback from the 3D sensors in the headset).

HHI does not create the VR video in the popular equi-rectanglar projection however, they do a cube projection method and select 4 tiles per cube face so 24 tiles. High and low resolution versions of these tiles are created for each tile, so 48 bit streams are created. But these are merged in HEVC encoding so one bit stream is delivered to the client.

At the user end, the field of view of the headset provides orientation feedback to the DASH client. This helps to determine which tiles should be delivered in high resolution and which in low resolution. These can then be decoded and delivered to the headset.

This approach leads to low latency to adapt to a changing viewport by keeping the i-frame small. The trade-off is some loss in encoding efficiency.

Fraunhofer HHI is now working with companies to license this technology and also working with the MPEG organization to develop a standard around this approach.

Nokia had a demo that featured capture of musicians playing on a rooftop in London in 360º VR 3D using its Ozo camera. This was being shown to illustrate the capabilities of their flexible distribution solution.

Nokia explained that the HEVC standard includes profiles that allow for great flexibility in how portions of the image are segmented and encoded. The firm said that they encode the 4K VR video using a base layer and higher resolution enhancement layers, but it was not clear if they are using the Fraunhofer HHI approach, however. The base layer applies to the full 4K video, but the enhancement layers are created by sections or tiles within the image. A tile is selected based upon what the user is looking at, so it is an adaptive viewport solution.

Parameters that can be optimized include the resolution and compression of the base layer, the field of view of the tile, the compression of the enhancement layers and the latency that can be tolerated from the headset viewport position to determine the correct tile enhancement information to send. This can get complicated if the user is looking in a direction where two or more tiles are within their field of view.

In the Nokia demo, they choose what seemed like a very low resolution and high compression base layer so that an image would always be visible. This was noticeable as very blocky parts of the image from time to time. The enhancement tile field of view was chosen to be 60º. The data rate was 14 Mbps.

The good aspects of the demo were that there was always an image being displayed, the spatial audio worked very well, and the tracking-to-display of full resolution images was faster than I thought it would be. A quick movement of the head took about 1 second to “snap” from low to high resolution. It was unclear where the source content actually resided, however, if locally, in the cloud or on a remote server.

The negative aspects were the sometimes very noticeably poor base layer, some stitching artifacts and some stereoscopic artifacts.

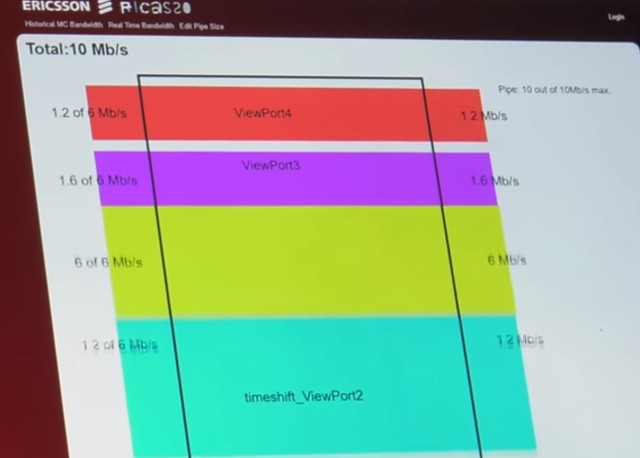

Ericsson showed a VR demos that package 8K media in a different way. The demo was like having a sports bar in your VR headset. The idea is to create a CG environment with a series of TV screens, in their case, four of them arrayed in a circle around the viewer. Each screen contains 2K source material, but is fed by an adaptive bit rate HEVC encoded stream running from 0.4 Mbps to 6 Mbps. When a single channel is in the viewer’s field of view, a higher bit rate feed is used with the other channels running at much lower rates until they come into view by the user turning their head. The total stream of all four channels in the demo was 10 Mbps.

One interesting feature was the ability for the user to select time shifting on one of the channels. This dropped the data rate to zero and allowed catch up viewing when they wanted to watch that content. Other configurations that are more ‘sports bar-like’ are possible too. – CC