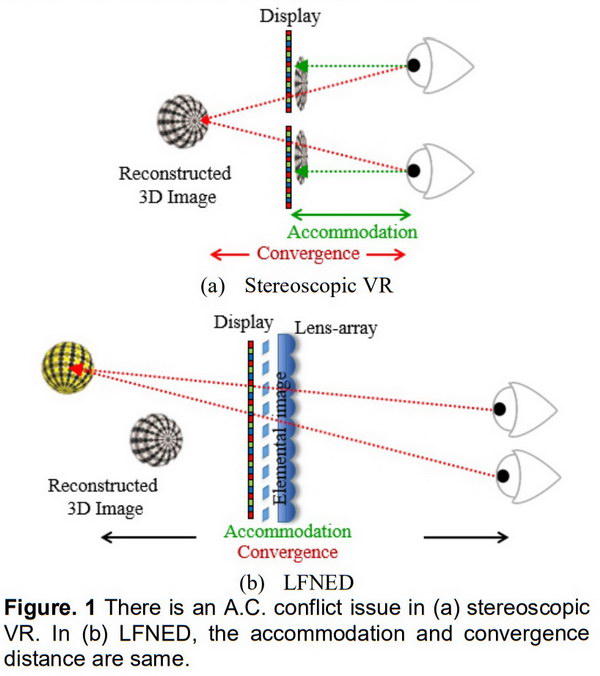

A hot topic at the SID Technical Symposium this year, and last year too, was Near Eye Displays (NEDs) for Augmented Reality (AR) and Virtual Reality (VR) that included accommodation for the eye. There are two circumstances that are said to need this accommodation. First, in stereoscopic AR or VR systems, they provide a solution to the accommodation/convergence (A/C) issue, where the eyes converge at a different distance from where they focus. In the world, with real objects, accommodation and convergence always happen at the same distance. This discrepancy in the virtual world can lead to visual fatigue and other problems and may contribute to VR sickness.

The second problem applies to monoscopic as well as stereoscopic see-through AR displays. In see-through displays, the visual information can be displayed in association with a specific real object in the see-through field of the AR HMD. For example, the AR display may show information about a statue you are looking at. In this case the displayed information should be in the same focal plane as the real object, allowing the AR user to focus simultaneously on both the real object and the displayed information associated with it.

One problem not addressed by any of the papers at SID was, once you have an adjustable focal plane, at what distance do you place that plane from the HMD user’s eye? With VR 3D content where the computer knows the depth of all objects in the field of view, the only problem is determining where the current user’s gaze point is and this can be determined by head and eye tracking. In AR systems, where the system controller does not normally know what the depths of the objects in the FOV are, it is necessary to implement a 3D sensor system to determine these depths. This system can either be a stereoscopic camera system, a time-of-flight camera or some other depth determining system.

There are several main technologies discussed at Display Week to achieve this result:

- Maxwellian displays where all objects at all distances on the NEDs are shown in focus.

- Light field displays that reproduce the light fields of the items shown on the NEDs.

- Holographic display systems that produced a volumetric image

- Multi-plane displays that had a finite number of display planes at different distances.

- Vari-focus systems that used a variable focal length lens to display a single image plane at various visual distances.

- Adaptive focus systems where either the microdisplay or the associated lens is moved by a mechanical actuator to provide a focal plane at various distances.

- Retinal projection displays.

For those interested in this topic, Display Week began at 8:30 Monday morning with Seminar SE-1, presented by Gordon Wetzstein, Assistant Professor of EE at Stanford University and titled “Computational Near-to-Eye Displays with Focus Cues.” This seminar first gave an introduction to NEDs and the reasons why NEDs should have dynamic focus. In addition to the needs of providing an image at the correct distance and focus, a NED can also adjust for myopia (nearsightedness) and hyperopia (farsightedness). This would ease the problems of VR HMD design for the manufacturers – the systems do not need to accommodate users wearing glasses because the correction provided by the glasses can be replaced by correction provided by the NED. Wetzstein provided examples of most of the different technologies listed above that can provide the needed image focus.

A second seminar, SE-10, was presented by Nikhil Balram, Sr. Director of Engineering for AR/VR at Google, and was titled “Light Field Displays.” This seminar gave a good explanation of how light field displays work and then discussed applications of the technology. As might be expected from Google, the applications focused on NED applications of light field displays (45 slides) rather than multi-user, large image applications (10 slides).

For those especially interested in AR and VR NEDs and willing to arrive in Los Angeles a day early, two of the four hour Sunday Short courses were relevant. Achin Bhowmik, Chief Technology Officer and EVP of Engineering at Starkey Hearing Technology presented Short Course S-2: “Fundamentals of Virtual and Augmented Reality Technologies.” Short Course S-4 titled “Fundamentals of MicroLED Displays” presented by Ioannis Kymissis, Associate Professor of Electrical Engineering at Columbia University was less immediately relevant to the AR/VR community. No current HMD systems use a microLED display since the technology is still in the early R&D phase. When it becomes available, however, it is highly likely it will be used in HMDs, especially in see-through AR HMDs where the extreme high brightness microLEDs are capable of producing is needed.

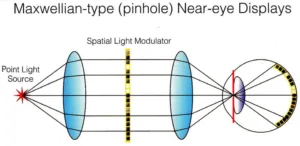

Maxwellian NED Displays

A simple Maxwellian NED. (Credit: Gordon Wetzstein, Stanford University)

A Maxwellian type display might seem to be the simplest solution to the focus problem with NEDs since all images are in focus for all observers. The problem with these displays, however, is they are based on a pinhole light source and provide a very small (pinhole-sized) eye box. As the eye moves to look off-axis, the pupil of the eye moves out of this eye box and the image is lost. For this technique to work, it is necessary to have eye tacking and pupil steering in the NED system.

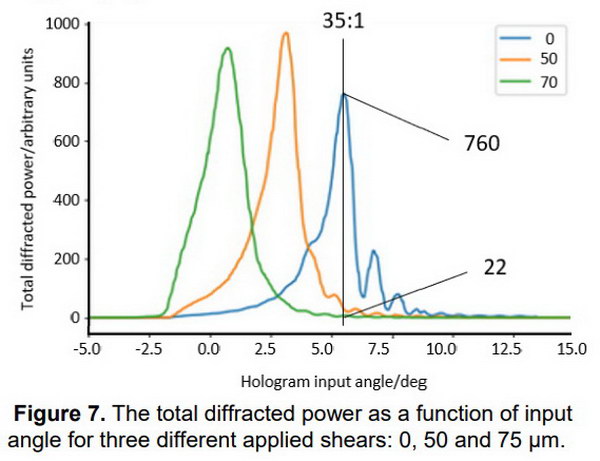

Pupil Steering from Bragg Grating Shear (Source: SID 2018 paper 17.2)

Pupil Steering from Bragg Grating Shear (Source: SID 2018 paper 17.2)

Adrian Travis and his three colleagues at Cambridge University, Microsoft Research Cambridge and Seoul National University presented a paper 17.2 titled “Shearing Bragg Gratings for Slim Mixed Reality.” This paper discussed a shearing technique between the front and back substrates of a volume Bragg grating that shifted the exit pupil of the system to track the entrance pupil of the eye in this waveguide-based NED. At first, this approach seemed improbable to me but since the shear required is only on the order of 50 – 70µm and the authors showed experimental evidence that the technique worked, I’m willing to accept the technique as potentially feasible. Travis gave a second presentation at the Immersive Experience Conference on another technique for pupil steering. This technique used adaptive mirrors at the input to the waveguide to adjust the input pupil position which, in turn, adjusted the output pupil position to steer it into the pupil of the eye. Both of the pupil steering techniques Travis proposed, of course, required the HMD to have eye tracking to work.

Light Field NEDs

Paper 10.2 was titled “A Deep Depth of Field Near Eye Light Field Displays Utilizing LC Lens and Dual-layer LCDs” and was from Mali Liu and his two colleagues from Zhejiang University. They combined light field technology with a vari-focus LC lens to provide a NED with an unusually wide range of focus depths.

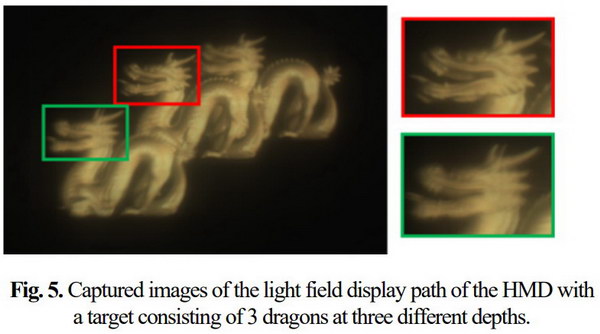

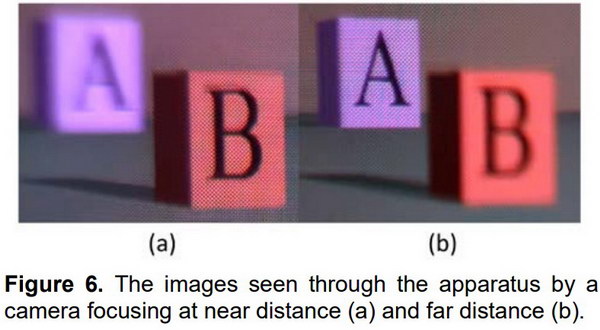

Objects at various depths shown on an integral imaging HMD. (Source: SID 2018 paper 10.3)

Objects at various depths shown on an integral imaging HMD. (Source: SID 2018 paper 10.3)

Hekun Huang and his colleague from the University of Arizona presented paper 10.3 titled “Design of a High-performance Optical See-through Light Field Head-mounted Display.” This NED used integral imaging, a variation on light field technology, to produce its depth. In addition to the micro-integral imaging microdisplay, the system used a tunable focal length lens. Due to the low resolution of the images generated by the integral imaging system, it is difficult or impossible to see the focus change in the images presented by the authors, shown above.

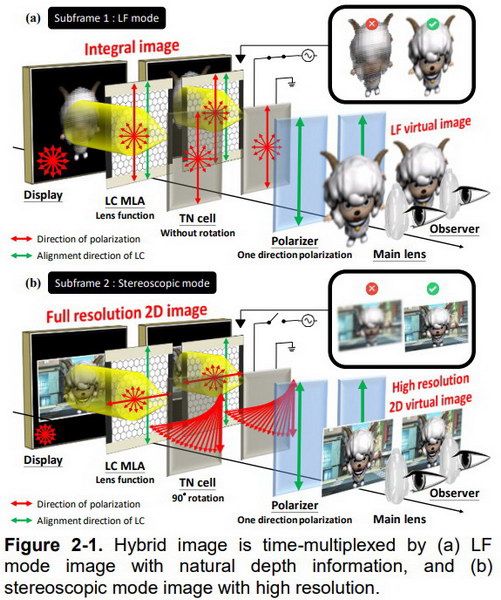

Light Field Display Time Multiplexed with a Stereoscopic Display to Increase Resolution. (Source: SID 2018 paper 10.4)

Light Field Display Time Multiplexed with a Stereoscopic Display to Increase Resolution. (Source: SID 2018 paper 10.4)

Chun-Ping Wang and his colleagues at National Chiao Tung University and AU Optronics gave paper 10.4 titled “Stereoscopic / Light Field Hybrid Head-mounted Display by Using Time-multiplexing Method.” They describe the use of a stereoscopic VR HMD where a light field system is used to resolve the A/C conflict. The system time multiplexes a conventional stereoscopic display with a light field display by using liquid crystal lenses. When the lenses are on, each microdisplay is a light field display with its depth of field but lower resolution. When the lenses are off, a conventional high-resolution stereoscopic image is shown. Multiplexing these two techniques together increases the system resolution while still maintaining sufficient visual depth that is said to resolve the A/C problem.

Poster paper P-94 titled “Free-form Micro-optical Design for Enhancing Image Quality (MTF) at Large FOV in Light Field Near Eye Display” was from Jui-Yi Wu and his colleagues at National Chiao Tung University and Coretronic Corporation. Most light field displays use an array of identical lenslets with spherical surfaces to form the light field. This paper showed it was possible to optimize each lenslet depending on its position in the FOV. This significantly increased resolution for off-axis parts of the image.

Holographic display systems

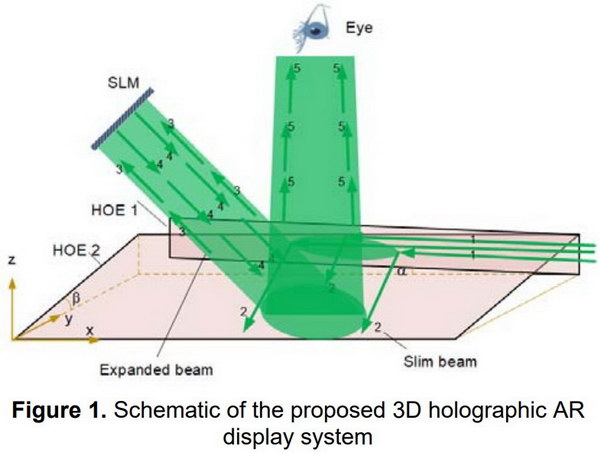

Illumination beam expansion using two see-through holographic gratings (Source: SID 2018 paper 17.1)

Illumination beam expansion using two see-through holographic gratings (Source: SID 2018 paper 17.1)

Paper 17.1 from Pengcheng Zhou and his colleagues at Shanghai Jiao Tong University was titled “A Flat-panel Holographic-optical-element System for Holographic Augmented Reality Display with a Beam Expander.” In this system, the 532nm illumination beam for the holographic spatial light modulator (SLM) enters the system from the right and is expanded to fill the SLM in a two step process using two different see-through holographic gratings. After the beam illuminates the SLM and modulated with the desired image, it is reflected back toward the eye. At wavelengths other than the illumination wavelength, the holographic grating is see-thorough and allows the wearer to see the outside world. The use of a holographic SLM holographic optical elements (HOEs) avoids the necessity of conventional lenses in the system and produces an image with a significant depth of field. This was not just a paper or theoretical exercise, the authors provided photos of their prototype holographic display system in operation.

(Source: SID 2018 paper P-96)

(Source: SID 2018 paper P-96)

Poster paper P-96 titled “Augmented Reality Holographic Display System Free of Zero-order and Conjugate Images” was from Chun-Chi Chan and his colleagues at National Taiwan University. The paper described a bench-top holographic display system that could ultimately be reduced to HMD size. The authors used the modified Gerchberg-Saxton algorithm (MGSA) to convert the 3D model image into holographic data for the SLM. Unfortunately, like most 3D to holographic algorithms, this took a lot of computing: 20 iterations over 15.76s, which represented a 26% improvement over previous algorithms.

Multi-plane displays

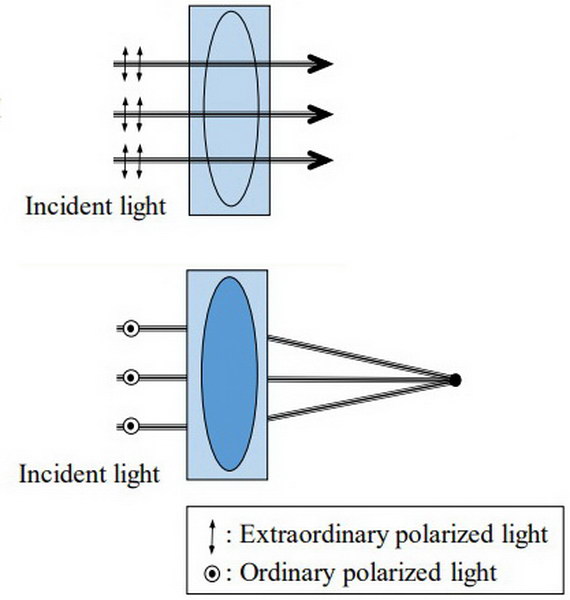

Birefringent Lens (Source: SID 2018 paper 79-1)

Birefringent Lens (Source: SID 2018 paper 79-1)

Paper 79-1 was titled “Accommodative AR HMD Using Birefringent Crystal” and presented by Byoungho Lee and his colleagues at Seoul National University. Their system used a birefringent lens plus two LCD image display panels. The lens was made out of a birefringent material and placed in an index-matching oil bath. For one polarization of light, this lens allowed the light to pass straight through but for the other polarization it had a positive focal length and moved the image focal plane. The combination of this dual focal length lens plus two LCDs created four different image planes in the HMD wearer’s field of view. The view of the real world was polarized so it passed straight through the lens so the real world was seen at the correct distance at all times.

(Source: SID 2018 paper 79-2)

(Source: SID 2018 paper 79-2)

Paper 79-2 was titled “Compact See-through Near-eye Display with Depth Adaption” and was presented by Yun-Han Lee and his colleagues from the University of Central Florida (UCF). Their system was based on Pancharatnam—Berry deflectors and lenses (PBDs and PBLs). These devices are sensitive to left vs right circularly polarized light and a Pancharatnam−Berry optical element can be analyzed as a patterned half-wave plate. By switching the polarization of light between left and right circularly polarized, the UCF system allows the generated image to be shown at two different focal planes. Like the Seoul National University system described above, the UCS system polarizes the light from the real world to always show it at its correct distance.

(Source: SID 2018 paper P-201)

(Source: SID 2018 paper P-201)

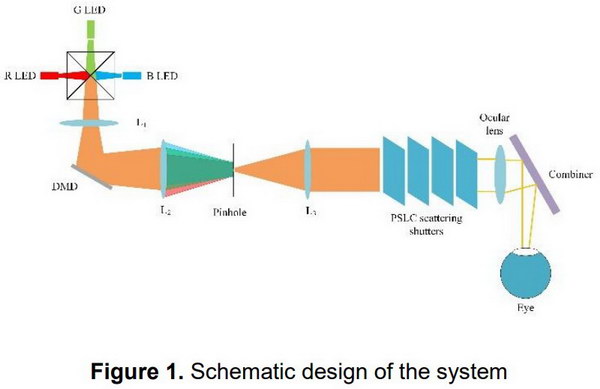

Poster paper P-201 titled “Design of Full-color Multi-plane Augmented Reality Display with PSLC Scattering Shutters” was from Shuxin Liu and his colleagues at Shanghai Jiao Tong University. This discussed a bench-top DLP projector that was time multiplexed onto six different polymer-stabilized liquid crystal (PSLC) screens that could be switched from clear to diffusing. This then provided multiple focal planes to provide the depth cues in an AR system. Technology similar to this has been used to provide volumetric 3D displays. The failure of this approach in large sizes was due to the fact that in the transparent state there was some residual scattering that degraded the images unacceptably. While the authors discuss scattering in the clear state, they do not discuss its impact on the image quality of the demonstration system. The photos they provide, however, show clear evidence of unacceptable scattering in the multi-shutter system.

Vari-focus systems

Vari-focal systems with electronically controlable lenses were, perhaps, the most common type of system to provide variable depth. Three papers at SID 2018 discussed vari-focal NEDs.

Paper 10.1 titled “Towards Varifocal Augmented Reality Displays using Deformable Beamsplitter Membranes” was presented by David Dunn from the University of North Carolina and his four colleagues, one of whom was from Nvidia. In this design a stretched, reflective, deformable membrane was deformed using differential air pressure into a variable radius spherical surface to provide the vari-focal mechanism. The prototype of this system could provide image distances from 10 – 800cm, covering the entire needed range of focal planes. One problem with the system was that the virtual image brightness varied with the image distance. This problem can be solved, perhaps, either by improved membrane coating or dynamically varying the display brightness to match distance.

Paper 65-5, titled “Resolving the Vergence Accommodation Conflict in VR and AR via Tunable Liquid Crystal Lenses” by Yoav Yadin and his colleagues at Deep Optics used a liquid crystal lens in a vari-focal optical system to place the virtual image at various distances from the HMD user. It is not straight forward to implement a LC lens that can be used to adjust the image position sufficiently to resolve the vergence accommodation conflict in HMDs. For example, implementing a 1 diopter lens (f=1m) with a 2cm aperture requires a phase modulation of 200π. Typically, a LC cell is designed for a phase modulation on the order of 2π and a 3 diopter lens is needed to provide sufficient accommodation. The approach taken by Deep Optics is to use a pixelated LC cell rather than a cell dedicated by design to a circular lens. This allows them to implement a LC Fresnel lens with sufficient power. The pixelated approach allows several desirable features including continuously tunable focal lengths, positive and negative focal lengths and an optical axis that can be shifted from the center of the panel as needed. These features also allow foveated focusing where a small diameter, higher diopter lens is implemented in the foveal region of the eye. As the eye moves, the lens can be moved around on the LC panel to follow the eye. This second approach, foveated focusing rather than a Fresnel lens, was used by Deep Optics in their example images.

Poster paper P-99 titled “Optical Methods for Tunable-Focus in Augmented Reality Head-Mounted Display” was from Yu-Chen Lin and his colleagues at National Taiwan University. This paper used a commercially available variable focal length lens to adjust the depth position of the image. The authors noted that the system produced image distortion which could be corrected electronically in the source image. They were also one of the few groups of authors to mention that the system needed a 3D sensor to determine exactly where the depth of the AR image should be set.

Adaptive focus systems

No papers discussing this type of design were presented at SID’s Display Week.

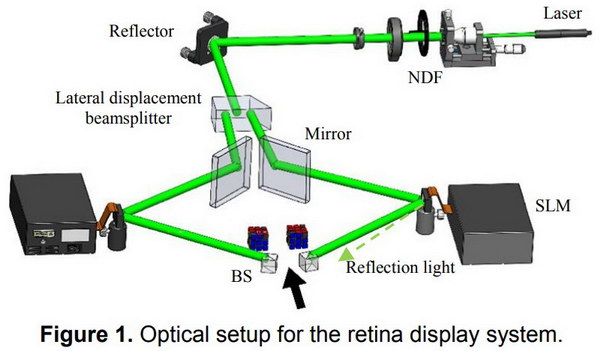

Retinal Projection

The only example of retinal projection I saw at Display Week was paper P-100 titled “Ultra-Large Field-of-View Retinal Projection Display with Vision Correction” from Wenbo Zhang and his seven colleagues at Shanghai Jiao Tong University. This paper discussed the use of a OLED microdisplay as an image source to project an image onto the retina. This paper was theoretical and simulated – the authors did not build or discuss a prototype.

Conclusions

There are many, many ways to provide a variable depth image plane for an AR or VR HMD. In addition to the ones presented at SID this year, I remember just as many presented at SID in 2017. To the best of my knowledge, however, none of the AR or VR HMDs currently on the market provide a variable depth image. In fact, many of the systems discussed at SID this year and last year seem like science fair projects for PhD candidates – how many ways can you rearrange known holographic, optical, opto-electronic and mechanical components in a way that provides a compact system with a variable focal plane?

Typical explanation of the Accommodation/Convergence Problem. (Source: SID 2018 paper 3-3)

Typical explanation of the Accommodation/Convergence Problem. (Source: SID 2018 paper 3-3)

I’d like to see a human factors study that shows that a variable depth imaging system is actually needed to avoid VR sickness or other problems. All the explanations of the problem I’ve seen so far are hand-waving explanations similar to the image above that say there could be a problem, not hard data that shows there is a problem. Typically, to solve the A/C “problem” you give up a lot since the variable accommodation systems are heavy, complex, expensive and normally reduce image brightness and resolution, and I’d like to see this all justified with hard data. –Matthew Brennesholtz

Analyst Comment

The idea of a display to solve the convergence/accomodation conflict was also discussed by Oculus in one of the Keynotes (KN02 Oculus Looks for Windows on the Real World)