One of the sessions at the “Future of Cinema” conference organized by SMPTE at NAB focused on the light field ecosystem. Speakers talked about what they are doing and progress is definitely being made in advancing the immature ecosystem.

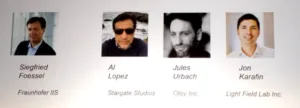

Siegfried Foessel from Fraunhofer IIS moderated the session and gave an introductory talk. In light field capture there are outward looking arrays (multiple cameras or plenoptic types) as well as inward looking arrays (cameras arranged in a circle or sphere around a scene). The more densely packed these cameras are, the higher the fidelity of the capture and any subsequent processing.

Once you have light field data from an array of cameras, there is much you can do with it. For one, processing can allow the creation of a 3D model of the scene. By having multiple camera views of any point in the scene, one can know the depth and even some of the material properties based upon how the captured light behaves. This can allow the content to be imported into a game engine with very sophisticated texture mapping so that many computed viewpoints are possible with high fidelity. Such capabilities can allow for relighting of the scene within the game engine, refocusing, virtual cameras movements and more. Some capture solutions can allow for viewing (outward capture) or movement (inward capture) with 6 degrees of freedom (6DOF).

He also provided a summary of light field activities within MPEG and JPEG standards communities:

- MPEG-I

- o 3DOF – 360-degree

- o 3DOF+ – 360-degree + small movement

- o Windowed 6DOF – classical light field

- o 6DOF – free movement in space

- JPEG-PLENO

- o Call for Proposals for light field coding

- o Grand challenge

Al Lopez from Stargate Studios said his company is a Visual Effects house and he described what a virtual backlot was all about. The idea is to use a green screen technique for production and then insert the location of the scene virtually at the VFX house. This is not a new idea, but there is increasing pressure to do this more quickly and less expensively. As a result, Stargate has created a library of 3D virtual locations that can be added to the video very easily. In fact, they now have a previsualization capability that allows the insertion of the virtual location into the captured green screen footage in real time.

These virtual locations are captured today with camera arrays plus LIDAR for the 3D information, but this is not a real time process. A light field solution might allow this in real time. This is important as the 3D virtual world needs to be aware of the real world on-set. For example, Lopez wants to be able to change the lighting on the actors in the real world and have that reflected in the virtual world in real time since this mismatch in lighting is often a problem when blending real and virtual worlds.

Jules Urbach of Otoy gave a summary of the many light field-related activities his company is involved with. The list is impressive and Otoy is clearly one of the most widely involved companies in the light field ecosystem. Their mission is to democratize the creation and publication of light field data and images.

Otoy has developed a full holographic workflow that includes their lightstage (inward looking) capture system, Octane rendering engine and ORBX media player.

Urbach also noted that they are working with Facebook on their newly launched multi-camera rigs. One of the cameras features 24 lenses and the other, less expensive version, boasts six. Both feature 6DOF capability. Facebook aims to outfit professional filmmakers with the ability to capture 2D and 3D 360-degree video.

Urbach says the Facebook raw images will be sent to the Otoy cloud server to create a 360-degree video with depth information. Editing can then be done in a number of packages from Adobe (After Effects) or the Foundry (Nuke). The content is then input into a game engine (from Unity, for example) and the Otoy Octane rendering engine generates the final 360º 3D video with 6DOF for playback on a variety of platforms. These platforms do not have light field displays yet, but Urbach sees that coming.

Otoy’s media player, ORBX, is a light weight player that can run in a browser with low data rates (as low as 1.5 Mbps). The app can run on a smartphone, for example, with the display acting as a window or portal into the virtual world. Moving the phone provides different views of the virtual world. It can also work with barcode cards that allow a virtual image to be seen on the card when viewed by the mobile device in an Augmented Reality application.

Otoy is active in the MPEG-I and JPEG-PLENO groups as well, making good on their goal of democratizing the light field ecosystem.

Jon Karafin, the CEO of newly launched Light Field Labs, was next. He gave an overview of his new company which is focused on light field processing and display. His talk, plus a pre-NAB interview we did with him, is summarized in a separate article. (Light Field Lab, Inc. Taking Ecosystem Approach)

Urbach is very bullish on the adoption of light field technology saying he thinks content will begin to be captured and processed using light field technology in 2018 on a regular basis. Karafin agreed and said that in 4-5 years, LF capture could become “ubiquitous in production.” Urbach says that light field capture can be used to eliminate the green screen today, but its use will depend upon the fidelity needed. – CC