The Virtual Reality Village opened for the first time at the Special Interest Group Graphics (SIGGRAPH for short) conference and Exhibition in Los Angeles. A VR Village highlight included a nomadic Virtual Reality system called “Holojam” from NYU.

NYU Labs Nomadic Virtual Reality Space in SIGGRAPH 2015 VR Village, Source: Sechrist The demonstration showed a “first-ever” realtime rendered 3D space using only the processing power of Samsung Galaxy Note 4 Smartphone/Tablets mounted in the headset.

The group added a motion tracking system to allow for interactive collaboration that included position and other data (collaborative drawing in 3D space) while tracking (and displaying) multiple people.”We aren’t the first ones showing nomadic real-time 3D rendering with HOLOJAM,” NYU professor and Research Lab director, Dr. Kevin Perlin told us, “but we are the first to demonstrate this using off the shelf mobile devices and standard Wi-Fi connectivity”, he said. The team use commodity router and multicast protocol demonstrated working on just commodity phones.

“This is work looking out 20 years, by then we expect to superimpose anything on reality much like Ray Bradbury imagined in his 1951 short story the Veldt”, Perlin emphasized. He also mentioned the work from Microsoft and Google predicting that by the end of the decade, similar functionality to that demonstrated today should be possible.

In the VR Village, users were wearing tracking markers on the hands, feet and head, and using a well known process called inverse kinetics (IK for short.) The system calculates the position of hips and shoulders based on the location data points and can reconstruct full bodies based on the collected data. Software is based on a modified Unity engine with the ability to render many draw lines into a single draw call. One grad student in the NYU booth indicated they were able to achieve 12K such draw lines from a single draw call.

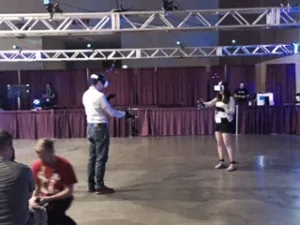

VR Headset with 3D Tracking, Source, SechristUSC Institute for Creative Technologies (MxR Lab), part of the Dept. of Computer Science, also had an active nomadic technology demo at this year’s first VR Village. Associate Lab Director, Dr. Evan Suma told us his VR demo focused on making small spaces appear bigger in VR. The “Infinite Walking in VR” demo uses the Oculus Rift with 3D tracking and its 9-degrees of freedom to “hack human perception making curved paths appear straight”, he said. One question this poses is “how big a space do you really need to achieve the effect of infinite space in VR?”. The group was demonstrating its solution in a 35- x 30-feet (9m – 10.6m) space at the show, but said they have shrunk the size to a more manageable 10- x 15-feet, (3m – 4.5m) but the “trade-off is always freedom of motion versus quality of the experience”.

VR Headset with 3D Tracking, Source, SechristUSC Institute for Creative Technologies (MxR Lab), part of the Dept. of Computer Science, also had an active nomadic technology demo at this year’s first VR Village. Associate Lab Director, Dr. Evan Suma told us his VR demo focused on making small spaces appear bigger in VR. The “Infinite Walking in VR” demo uses the Oculus Rift with 3D tracking and its 9-degrees of freedom to “hack human perception making curved paths appear straight”, he said. One question this poses is “how big a space do you really need to achieve the effect of infinite space in VR?”. The group was demonstrating its solution in a 35- x 30-feet (9m – 10.6m) space at the show, but said they have shrunk the size to a more manageable 10- x 15-feet, (3m – 4.5m) but the “trade-off is always freedom of motion versus quality of the experience”.

At the USC lab, the “infinite space effect” is achieved using stop motion animation and 360 images delivered from every angle. Light field rendering, image based rendering and complex visual graphics are employed well beyond what is seen in video games, and basic polygon crunching, Suma told us. He also said they have made much progress using pre-rendered images, so most of the heavy lifting in presenting the 3D space can be done in advance, making the realtime VR stream “very lightweight”.

So get over to the SIGGRAPH at the LA Convention Center this week if you are so inclined. You will not only see all things VR but all the other Computer Animation wizardry the show has delivered for 42 consecutive years now. – Steve Sechrist