Under display cameras (UDCs) are designed to change the expectations users may have of a smartphone display by removing some of the carved out features that are often used to separate the camera from the display. This is the notch effect, the existence of a separate space for the camera to co-exist with the display. Is it really that much of an issue? Maybe not so much to users who probably don’t really have any expectations other than wanting a decent selfie camera.

UDCs do provide for better product design and give the smartphone display a sleek, wraparound look, and they may also prove to be a key feature, giving users a sense of having a display that is optimized for maximum use and reach. However, UDCs face their own challenges, the major one being image degradation caused by being integrated into the display.

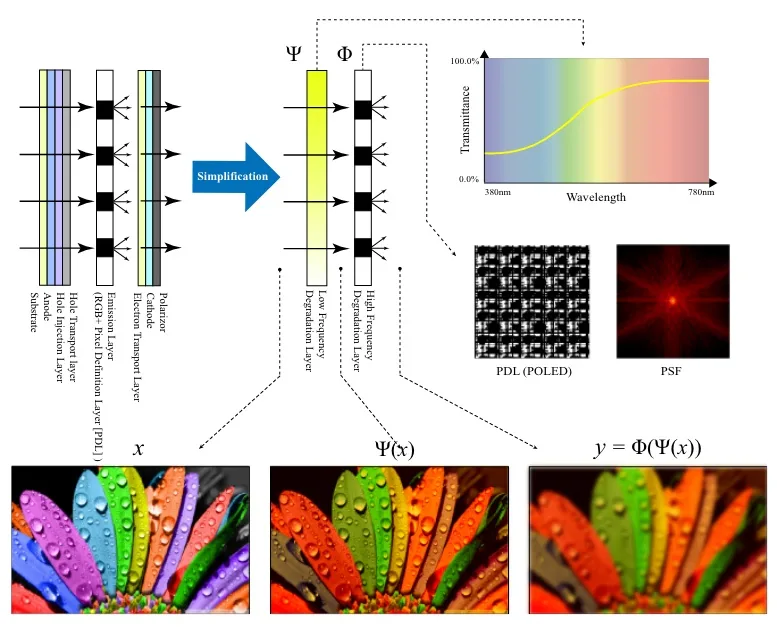

The first challenged faced by UDCs is diffraction artifacts. The pixel grid around a UDC in OLED screens is the arrangement of pixels that form the display. In the context of UDCs, this pixel grid has to be specially designed to allow the camera to capture images through the screen. The grid typically has periodic gaps between pixels, which act as apertures for the camera. However, these gaps can cause diffraction of light, leading to the diffraction artifacts that degrade the quality of the images captured by the UDC. There are natural limits on how much the OLED display can be modified to increase transparency and reduce distortion of light reaching the UDC. Some modifications like reducing pixel density or changing sub-pixel layout can help but aren’t enough.

In the same vein, there is the question of color shifts. This shift is primarily caused by the multiple thin-film layers in OLED displays. These layers can alter the light’s path and its spectral characteristics, leading to changes in color accuracy and consistency in the captured images. Adjusting the materials and the structure of the thin-film layers in the OLED display to minimize alterations in the light path and spectral characteristics could help in combination with the design of pixels and pixel layouts.

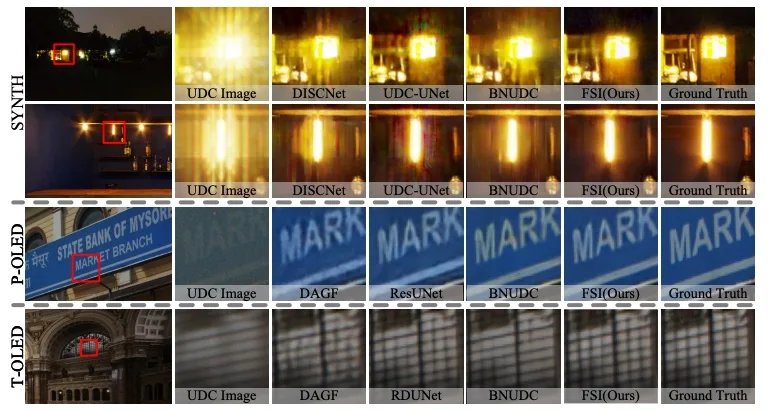

However, the likelihood is that you are going to need to have some level of complex post-processing of any image captured in order to make the necessary adjustments and deliver an image that is “ground truth,” in other words, as close to what was actually seen as possible. This raises the specter of additional circuitry to restore images in the frequency (the patterns of light and dark) and spatial (the overall picture) domains, as well as some form of brute force image restoration AI.

I have highlighted two approaches by researches to the issues facing UDCs below. It means that in assessing future smartphone displays, developers will have to go further than the actual architecture of the display itself and look at novel new ways of processing images in real-time. That raises power issues, as well as the determination of what the best trade-offs are going to be for any implementation.

All of this isn’t to dismiss the challenges of physically creating transparent holes in an OLED for a UDC. The challenges of designing the pixel grids and component layers has been discussed before on Display Daily. Visionox has done some good work of explaining its approach to creating OLED panels for UDC. However, there is still more work that needs to be done on the post-processing of images, and that the likelihood that there will be a hardware solution for UDCs is some way away. We have a ways to go in terms of materials development, thin film design, and even manufacturing processes to make UDCs ubiquitous. For now, they are a hole in the display that is inconspicuous. At some point, they have to be a part of the display.

Reference

- Koh, J., Lee, J., & Yoon, S. (2022). BNUDC: A Two-Branched Deep Neural Network for Restoring Images from Under-Display Cameras. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 1940–1949. https://doi.org/10.1109/CVPR52688.2022.00199

- Liu, C., Wang, X., Li, S., Wang, Y., & Qian, X. (2023). FSI: Frequency and Spatial Interactive Learning for Image Restoration in Under-Display Cameras. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 12537-12546).