The morning of the first day of Display Summit 2017 was devoted to describing advanced displays.

Thomas Burnett from FoVI 3D started out by describing its light field display system based upon integral imaging techniques. In the past they have developed several light field displays and they now have contracts to develop next generation solutions. This consists of arrays of OLED microdisplay with fiberoptic tapers leading to an array of microlenses. This is the image plane and light field images can be rendered to appear above and below this plane.

In November, they plan to release their LfD DK2 development kit that will offer a monochrome yellow display with 160×160 hogels (or microlenses/microdisplays), a field of view of 60º and a projection depth of 100-120mm.

They are also working to develop a new light field rendering architecture where a miniGPU is placed under each microdisplay to render the light field. This eliminates the need to render views sequentially, with a huge impact on frame rate.

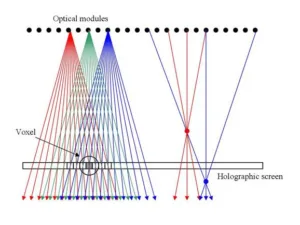

Tibor Balogh from Holografika described his horizontal parallax only system which uses an array of projectors and a holographically-defined screen. Rays from each projected image are confined to a narrow 1º horizontal width and a wide vertical FOV by the screen. This allows multiple rays from multiple projectors to converge at real light points in front of the screen. The system can be designed for wide or narrow field of view.

They have developed systems from 30” to 140” for a wide variety of use cases, said Balogh. He also revealed they have just installed a system in Korea that acts as a telepresence system with full-sized 3D images of the person on the other end of the teleconference.

Jon Karafin of Light Field Labs said there are three key elements to evaluate in a light field display:

- Rays per degree – higher ray density is better

- View volume – controls the amount of freedom the viewer has to move in a given light field space

- 2D equivalent resolution – determined by the number of active rays that can be delivered in any 2D slice in space

In 2018, they plan to showcase their R150MP prototype. This will be a 4” x 6” demonstrator with 150 million 2D equivalent pixels – which he thinks will be the highest on record when it debuts.

But the company wants to go to a 16K x 10K display for consumers using a tiled approach with each tile offering 650 million 2D equivalent pixels. The plan is to offer this by 2020.

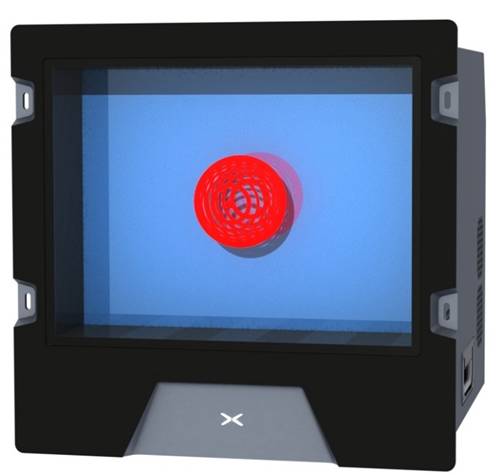

Ilmars Osmanis of LightSpace Technologies then described their volumetric display solution. It uses a DLP projector and a series of electrically-switchable diffusion screens to sequentially show slices of images in a box (like a sliced loaf of bread). The disadvantage of this display modality is that only translucent images can be displayed. That means you can’t properly render the outside of a heart, but you can see all the structures within the heart at the same time. This can be an advantage in some types of applications, however.

The approach creates a real image, like all the above displays, so it solves the vergence-accommodation conflict inherent in stereoscopic 3D displays. Medical imaging, scientific data, airport luggage scanning and more are target applications.

At the event, the company showed its x1406 display, which looked quite good. This offers 16-20 depth planes with 1024×768 resolution per plane. It is a 14” image with perhaps 6-8” of depth volume. In development is an upgrade that will offer 2560×1600 resolution per plane. This should be ready in a year.

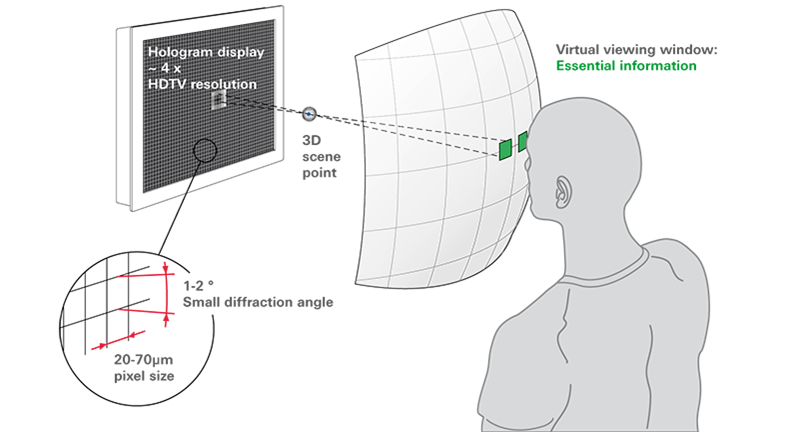

Tobias Schuster from SeeReal described their efforts to create a true real time holographic display. He explained that the generation of a hologram over a 14” screen would require several tens of billions of pixels to deliver about HD resolution. That’s not possible today, so their idea is to only render the part of the hologram (sub-hologram) on the spatial light modulator that is contributing light rays to each eye. That reduces the resolution to around 10 million pixels with pixels on the order of 20 to 100 microns. That is doable today.

They have built a prototype but it is a very complicated system with a backlight unit using a collimated and expanded laser system, a large 14” spatial light modulator composed of two LCDs with special liquid crystal materials, a field lens and eye tracking combined with beam steering (using two variable phase gratings in liquid crystal layers) so the user can move their head to see new views of the scene.

It is also looking at LCOS-based solutions for HUDs, HMDs and direct-view displays.

Finally, Tommy Thomas of Third Dimension Technologies, which is developing solutions similar to Holografika, gave a talk describing their efforts to develop a standard for the streaming of light field data over networks. A whole day prior to Display Summit was organized on this topic alone (see www.smfold.org for more).

In the panel discussion, Karafin reiterated a point he briefly mentioned in his talk. He thinks that light field displays in the integral imaging or plenoptic sense can’t be used for AR or VR. He said that the Lytro cinema camera has a very big main lens as this defines the view volume of the light field capture. In AR/VR, it is like running this in reverse where the size of the eye’s pupil defines the view volume. Since the pupil is so small, you won’t create a very compelling light field image. A better approach might be multi-planar display, which some are calling light field displays, however. – CC