What is HDR10+, you ask? It is the HDR10 standard augmented with dynamic metadata. In this case, Samsung’s dynamic metadata format as approved in SMPTE ST-2094-40. Three other methods for adding dynamic metadata to an HDR10 format are specified in the same SMPTE document.

The four are:

- Dolby (Parametric Tone Mapping)

- Philips (Parameter-based Color Volume Reconstruction)

- Technicolor (Reference-based Color Volume Remapping)

- Samsung (Scene-based Color Volume Mapping)

The HDR10 standard (ST-2084) only requires static metadata to be included with the HDR content. That means the following parameters should be attached to each piece of HDR content.

- Mastering display chromaticity primaries

- White point chromaticity primaries of mastering display

- Maximum mastering display luminance

- Minimum mastering display luminance

- Maximum Content Light Level (Max CLL)

- Maximum Frame Average Light Level (Max FALL)

Whenever the receiving display’s performance characteristics do not match the mastering display, tone-mapping must be applied. Tone mapping involves mapping the luminance level range of the source to the luminance levels of the actual display. But this luminance mapping also impacts the colors and the color saturation, so these can become sophisticated algorithms. Some algorithms for example, will chose to try to maintain the highest luminance levels but may change some colors. Other approaches focus on maintaining color accuracy but at the expense of peak luminance. Both can be valid approaches.

The problem with static metadata is that a single tone-mapping function is assigned to the entire piece of content. Darker scenes are treated the same way as brighter scenes. This mean the highlights are reproduced better in bright scenes, but darker scenes appear too dim.

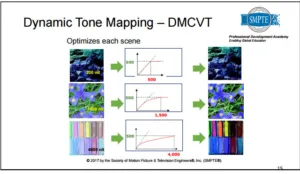

The dynamic tone mapping chart from a recent SMPTE webinar, shows the peak luminance levels for three scenes from a piece of content being tone-mapped to a display with a peak luminance of 500 cd/m². In this case, three different tone mapping curves are used based upon the peak luminance level in the scene, resulting in a more faithful reproduction of the HDR content on a 500 cd/m² display.

Samsung’s HDR10+ approach requires that metadata be added to the HDR10 stream to provide instructions to the TV as to how to tone map on a scene by scene basis. This metadata is generated by a colorist during the grading process. It is then encoded into the SEI (Supplemental Enhancement Information) part of the HEVC encoded stream. The TV must now be capable of recognizing the Samsung HDR10+ signal and applying the dynamic tone mapping on a scene-by-scene basis. As you might have suspected, all of Samsung Q series (Q7, Q8 and Q9) HDR TVs include decoding of the HDR10+ signal. In the second half of this year, Samsung’s 2016 UHD TVs will gain HDR10+ support through a firmware update.

The new agreement with Amazon Prime means that when Amazon interrogates the TV to understand its capabilities, it will now recognize if it is HDR10+ capable and deliver an appropriate stream for that TV.

I have seen demos of this HDR10+ capability at Samsung’s lab in New Jersey, where HDR10 content with static metadata is compared to an HDR10+ version with dynamic metadata. The dynamic metadata indeed does help to bring out details in darker scenes and evens out the average picture level across the content. But I also noticed there were changes in some colors. What is unclear is if this was intentional – i.e. the colorist was able to fine tune those scenes the way he/she wanted – or if this is an artifact of the tone mapping algorithm. Only your colorist knows for sure! – CC