The standards organization, VESA, has developed a compression codec that it calls Display Stream Compression (DSC). Now, silicon to support the encoding and decoding of video has been developed and should enable products to enter the market by the early next year.

To learn more, we talked with Montreal-based Hardent, which has developed the IP core to support encoding and decoding. The company recently announced a licensing deal with Korea-based Silicon Works (partly owned by LG Electronics) to license the decoder IP and create an ASIC for use in mobile devices.

Recently, Qualcomm announced its new SnapDragon 820 mobile processor SoC that includes a DSC encoder, so the ecosystem for the roll out of DSC is starting to become real.

According to Alain Legault, vice president of IP products at Hardent, VESA started the process of a compression codec development about two and a half years ago. Six companies submitted algorithms to be considered for the next standard, with the proposal from Broadcom ultimately winning approval.

Other video compression standards like H.264 and HEVC are developed with a basic algorithm and structure, with various companies free to create their own versions of the codec. Implementers then need to pay a royalty to a licensing organization to create silicon.

VESA DSC is structured differently with the algorithm fully developed by the VESA group members into a single finalized format (version 1.1 issued in July 2014). That is what the members have done over the last couple of years including testing with end users through visual assessments. There is no royalty to access the codec, but you must be a VESA member to do so. This approach was agreed to by the members to help ensure interoperability of devices that used the codec.

Hardent has taken this algorithm and integrated it into an IP core that can be implemented in an ASIC or FPGA. Legault said that silicon fabs or fabless companies can develop the IP core themselves, or come to companies like Hardent who have invested considerable time and money to develop, test and validate the IP core so that it is ready to go into production.

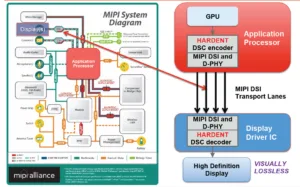

It looks as though mobile applications will be the first to adopt DSC given the Qualcomm announcement and the details of the Silicon Works decoder. According to Legault, they recommend using DSC to do light compression – up to 3:1 on the video. This compression is done in the application processor, like the Qualcomm 820 SoC, then the bit stream is transcoded to a physical layer interface. This can be a MIPI DSI 1.2 interface for within-device communication, or DisplayPort for a wired connection between devices (like your mobile phone and TV or monitor). Other physical interfaces are likely to follow.

While DisplayPort 1.2 and 1.3 can already support the transfer of 4K video, adding compression to the interface will lower the data rate. This helps the mobile device developer lower the RAM requirement, reduce power consumption and EMI emissions and save on costs. But the compression will be essential on DisplayPort when 8K video needs to be played back between devices (i.e. GPU, monitor or even mobile devices).

Legault claims at 3:1 compression level, the restored image is visibly identical to the original. I then asked how the image would degrade if one were to compress above this level. Legault said that you start to see more diffuse noise in the image. This compares to the arrival of mosquito noise in over compressed MPEG or JPEG images and ringing on hard edges with wavelet-based compressions like TICO. “This diffuse noise is harder to see than the artifacts from other compression schemes,” noted Legault. “We have validated this visually lossless nature by showing over 250K images to users.”

And, most importantly, the encoding and decoding can be done with very low latency – about 2 video lines, so very fast – something most other codecs can’t claim.

Legault also noted that they have now developed an FPGA version of the encoder and decoder using a Xilinx device. This is currently being evaluated by customers in the Broadcast and ProAV segments. Hardent also has a licensee in the automotive space.

The current implementation supports 8-, 10- and 12-bit video in a 4:4:4 YCbCr or RGB format. In development is an extension to 16-bit as well as support for 4:2:2 and 4:2:0 color sub-sampled video. This may be done by the end of the year.

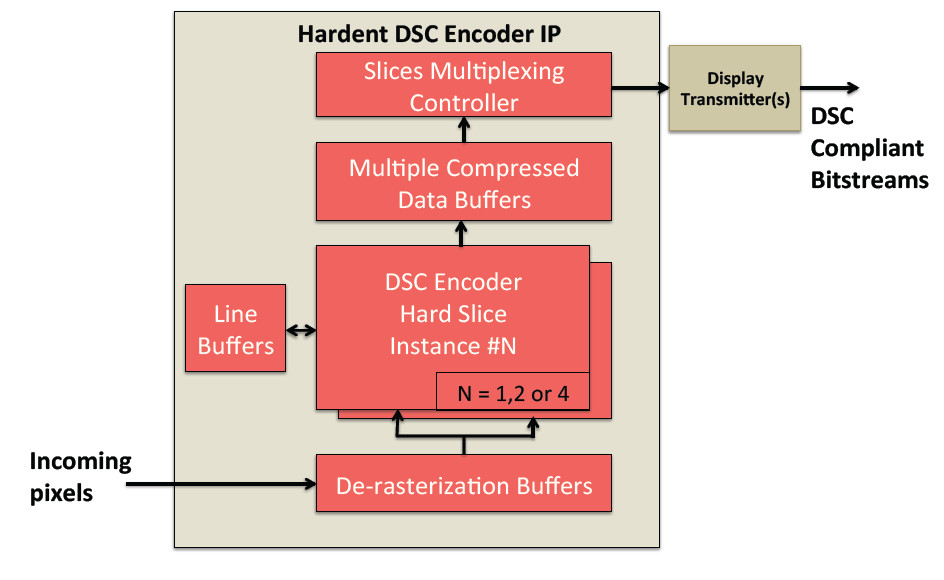

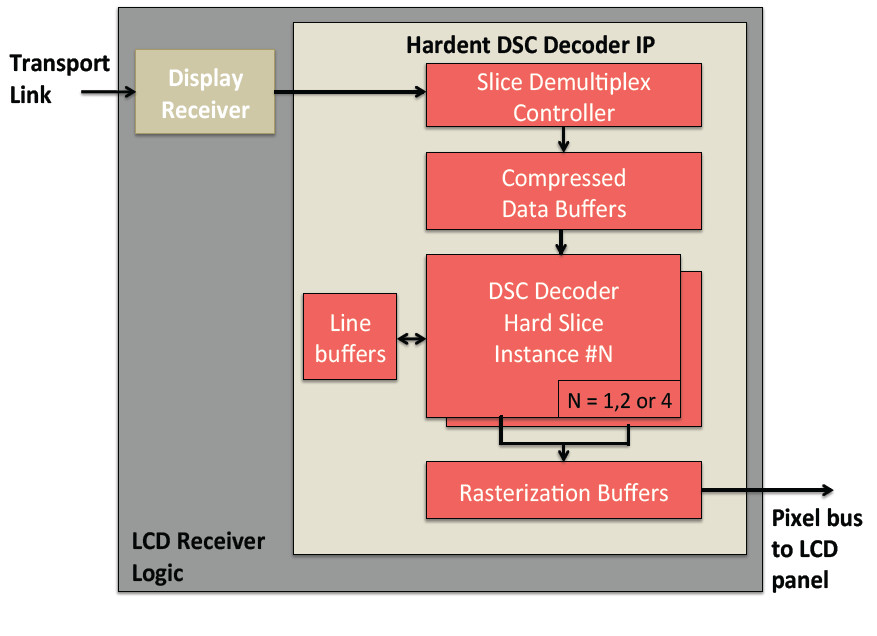

Legault also noted that the implementation of the IP core is scalable. That means it can scale between different design rules for various fabs and allows for parallel processing of an image. For example, a 4K image can be divided into four slices and a processor assigned to each slice running in parallel. Images are processed in “slices” of about 100 lines each, which are adjustable as noted in the block diagrams for the Hardent encoder and decoder below.

Extending this concept to the processing of 8K will be particularly helpful. Here, Hardent expects DSC to be implemented in GPU cards for encoding and in monitors for decoding. – Chris Chinnock

Analyst Comment

I spent a lot of time looking at the Hardent DSC implementation at CES this year and couldn’t spot any artefacts on the demo content (DisplayPort and DisplayStream Compression are Bullish – subscription required). We have heard that VESA is also looking at a slightly higher compression additional version of DSC that could go up to four or five to 1 compression. – BR

This article was independently written and prepared, but was moved from behind the pay wall with sponsorship from the company.