I’m back in the weeds of production and HDR today. I have been following a series of discussions in ‘The Broadcast Bridge’ about whether the traditional gamma curve is still needed. Last week I spotted an interesting editorial that takes some of the points and moves the discussion on. Thanks to Tony Orme for allowing us to reproduce his article here.

(for those that don’t know, traditional grey scales of video signals have always been based on integer values – i.e. from 0 to 255 for 8 bit video. Each of the integer values has a corresponding value of luminance, either relative or, in the case of the PQ curve, an absolute level. Codecs, for the distribution of content are based around this concept, so distribution has to stick to these values, but production content is either only lightly or not at all compressed, so you might be able to use a different approach).

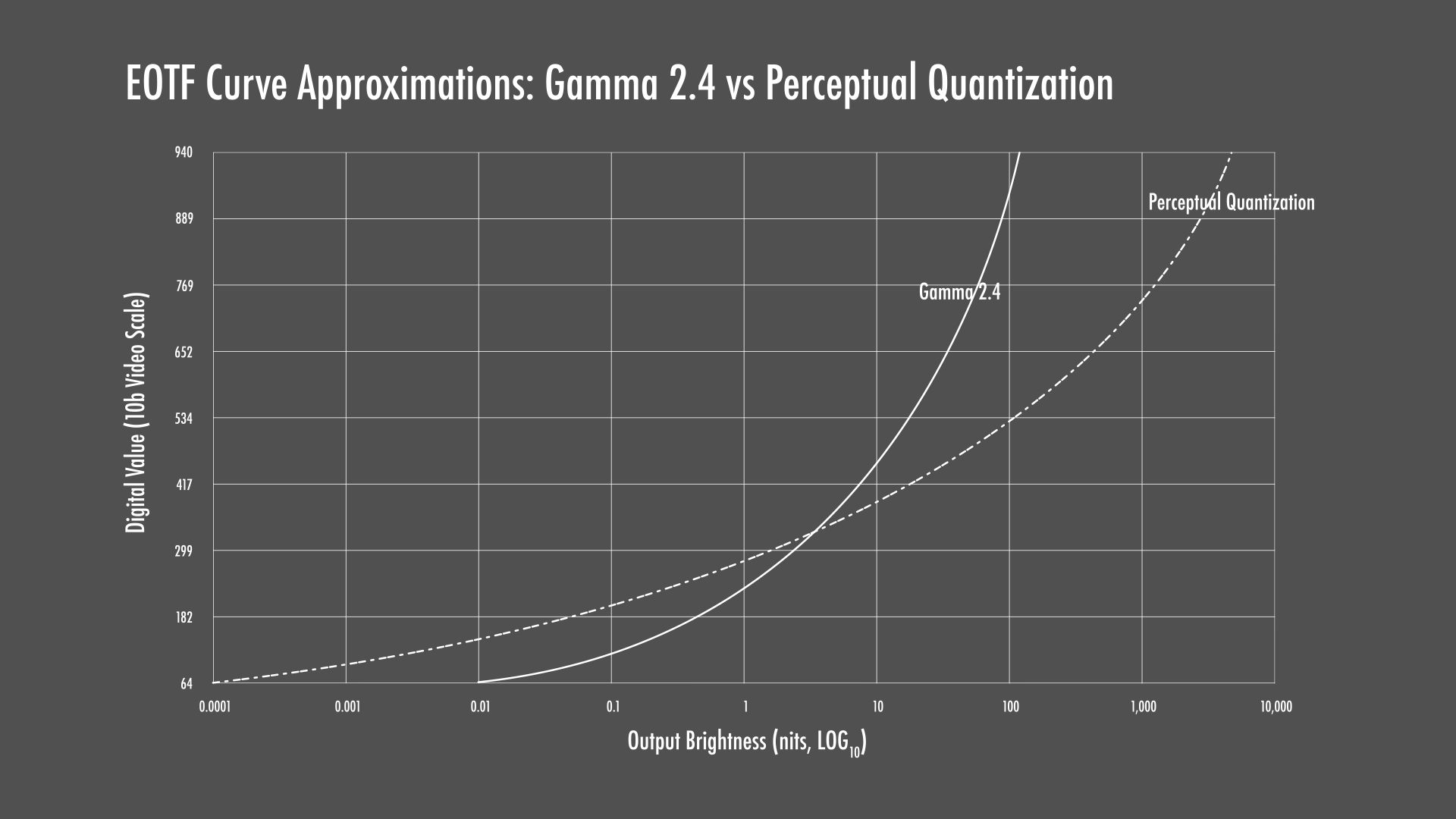

The PQ curve maps code values to absolute levels of luminance. Image:Mystery Box Click for higher resolution

The PQ curve maps code values to absolute levels of luminance. Image:Mystery Box Click for higher resolution

Here’s Tony’s article.

Moving to IP is providing us with new opportunities as the essence media no longer has to be tied to the underlying transport stream. As more broadcasters are taking advantage of HDR, should we be looking to floating point video samples to better represent the images?

In Part 9 of John Watkinson’s ten-part series “Is Gamma Still Needed?”, he raises the prospect of using floating point representation for video samples as an alternative to gamma type correction.

There’s much talk in the industry at the moment about incredibly bright displays becoming available with technology demonstrations showing brightness peaks of 10,000 NITS. To me, this seems to be delivering the wrong message about HDR. I realize that the marketing departments want bigger numbers as it looks more impressive on the advertising literature, but I fear this may be doing HDR a disservice.

To me, HDR is about contrast, not necessarily about brightness. A display that is too bright could even reduce the contrast ratio of the image as the excessively bright images will reflect off the walls, bounce back onto the screen and cause flare. (see Can a Display Be Too Bright? – ed.) This is probably not too much of an issue in the ideal QC viewing environments with black velvet curtains covering the walls and ceiling to absorb any reflections. But how many people watching at home have anything resembling an ideal viewing environment? The average home viewer would wind up the brightness, thus increase the reflectance of the walls and reduce the contrast ratio of the screen, resulting in the negation of many of the benefits of watching in HDR.

Looking to the audio world we rarely select the volume of a 100W loudspeaker amplifier to its highest setting. If we did, we’d probably deafen ourselves. We would normally listen at about 5 – 10W and leave the remaining 90 to 95W for headroom to better represent the transients and avoid clipping distortion. The same is true in video for HDR. We shouldn’t be aiming to drive the whole screen to its highest brightness setting but keep the average level viewable and use the remaining NITS for the transients.

Instead of trying to compress the transients in a gamma type curve using integer samples, such as the solutions provided by HLG and HDR-10, why not just linearly represent the video samples as floating-point numbers? We would achieve massive dynamic ranges resulting in better contrast and an improved immersive experience for the viewers.

I agree that floating-point video samples would not work well with SDI, if at all. But with the adoption of IP, we can easily represent floating point numbers which will deliver massively improved quality in our workflows as we don’t need to be concerned with the limitations of gamma and its associated truncation errors from integer conversions. Linear floating-point representation is always going to be more accurate when processed in software.

(I skipped over this to avoid the article getting too bloated, but Charles Poynton made the point in his talk at the HPA event (Poynton Defines “Scene Referred” Video) that an image is transmitted from a camera after an OETF conversion which is processed with an EOTF in post production to allow processing to be performed in a linear space, during post-production and is then mapped again on output. – ed.)

Television has been saddled with the backwards-compatibility requirement since the first transmissions in the 1930s, IP and 4K is allowing us to escape these shackles to improve our workflows and deliver better pictures and sound to our viewers. I think we should be doing more to take advantage of this. (TO)

Tony Orme is editor of The Broadcast Bridge and has worked at the BBC, Sky and ITV and has recently focused on moving from SDI to IP-focused media centres. He is a SMPTE member, a lecturer at The University of Surrey, and currently chair of the Thames Valley Branch of the Royal Television Society.

Thanks for permission to reproduce this article which was originally published here.