For virtual reality capture, there are two basic techniques:

- Inside out features an array of cameras on a single rig looking outward. This is most common type of VR capture

- Outside in features dozens of cameras array around a space to capture images of the space from many different angles. This is sometimes called volume capture.

Volume capture has been used in movies before – think about scenes from the Matrix where the camera spins around to look at whizzing bullets from multiple angles. It is also popular at major sporting events, allowing the big play to be viewed from a number of viewpoints spinning around the play. Both techniques can be used to create light field data capture sets as well.

There are only a few companies that have outside-in capture equipment and facilities. Those I am aware of include Microsoft, 8i, Fraunhofer HHI, ETC/Otoy, and Digital Domain (I’d add HypeVR, working with Intel – Man. Ed.). In this article, we will summarize developments at NAB from some of these companies.

In the NAB Super Session titled, “Next Generation Image Making – Taking Content Creation to New Places,” Steve Sullivan, General Manager, Holographic Image at Microsoft gave a talk about their volumetric capture. As shown in the image below, their facility includes 106 synchronized cameras with IR and RGB capture capability. They can capture 10 Gbytes per second, which means only about 4 minutes of record time.

Captured images are processed to create a 3D model of the action, which can be imported into a game engine where it is texturized with the video components. They call this a video hologram as one can now walk all around the image using a VR headset.

Sullivan said Microsoft is focusing development for four application areas: entertainment, education, commerce and memories. He thinks memories may become the ‘killer app’ as the ability to capture and display family moments with this technology goes mainstream. He highlighted a shoot with Star Trek actor George Takei where his actions were captured and inserted into other contexts for entertainment purposes. They also did a shoot with astronaut Buzz Aldren doing a moon landing reenactment.

Sullivan also noted that they can now combine inside-out capture within an outside in capture to create a very compelling hybrid space to explore.

Andrew Shulkind, a director of photography, was included on the panel to provide the DP’s perspective. He noted that volume capture is used a lot in the visual effects pipeline today for movies, but current capture techniques limit the relight capabilities available in post, so improvements are needed. Using flat lighting limits options later. However, if you light with specialized light to optimize the capture, but plan on changing it in post, your options are even more limited.

Jon Karafin of Light Field Labs is proposing a new format for light field data that uses a sparse array of cameras along the with material properties of the objects/actors being captured. “This can result in a 100X reduction in bandwidth compared to a dense array capture.”

A company called 4DReplay was showing off a volumetric capture solution using a semi-circle of DSLR cameras. It looked as though about 40 cameras were used. They were doing demos where small groups of people would gather around a basketball hoop and ‘ham it up’ for the camera. Only 4-5 seconds can be captured, but it allows the view to move around to get all the camera viewpoints. Processing took less than a minute before the group could see their volumetric capture. You can see a video here.

4DReplay used an array of DSLRs to Capture volumetric images.

4DReplay used an array of DSLRs to Capture volumetric images.

Fraunhofer HHI was demonstrating its outside-in volumetric capture technique in its booth at NAB. They have several set ups with one being a 12-camera 180º set up for VR and another holding just four cameras for TV. A 360º set is planned for this summer. They are developing some of the tool sets to support the capture and processing of volumetric images and have already worked with others such as 8i, we were told. This technology is being used for the first time in cooperation with UFA GmbH in the course of a joint test production for the immersive film “Gateway To Infinity” as a volumetric virtual reality experience. Some test content was shown in a VR headset where the actors were captured with this technique and inserted into a CGI world using the Unreal game engine. It looked pretty good.

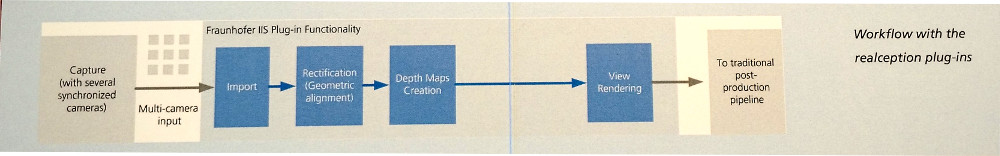

Sister research organization, Fraunhofer IIS, was demonstrating a light field post production tool they call Realception, which is a plug-in for the Nuke post production suite. The idea is to use light field data to render the VR headset view based on head position. This will enable the creation of 6 DoF live action content for virtual and mixed reality content.

Fraunhofer IIS used this statue to show volumetric capture

Fraunhofer IIS used this statue to show volumetric capture

To illustrate the technology, IIS did a volumetric capture of a small statue of a woman using a 3×3 camera array moving from left to right around the object. The Realception plug-in can then manipulate all these images to rectify, align, color correct and generate depth maps. Because not every view of the statue was captured, IIS needed a way to generate views that were hidden. It does this by creating a light field representation of the statue that includes material properties, allowing the recreation of unseen textures when the data is imported into an Unreal Game engine. The engine can then render the VR view based on the head position. – CC